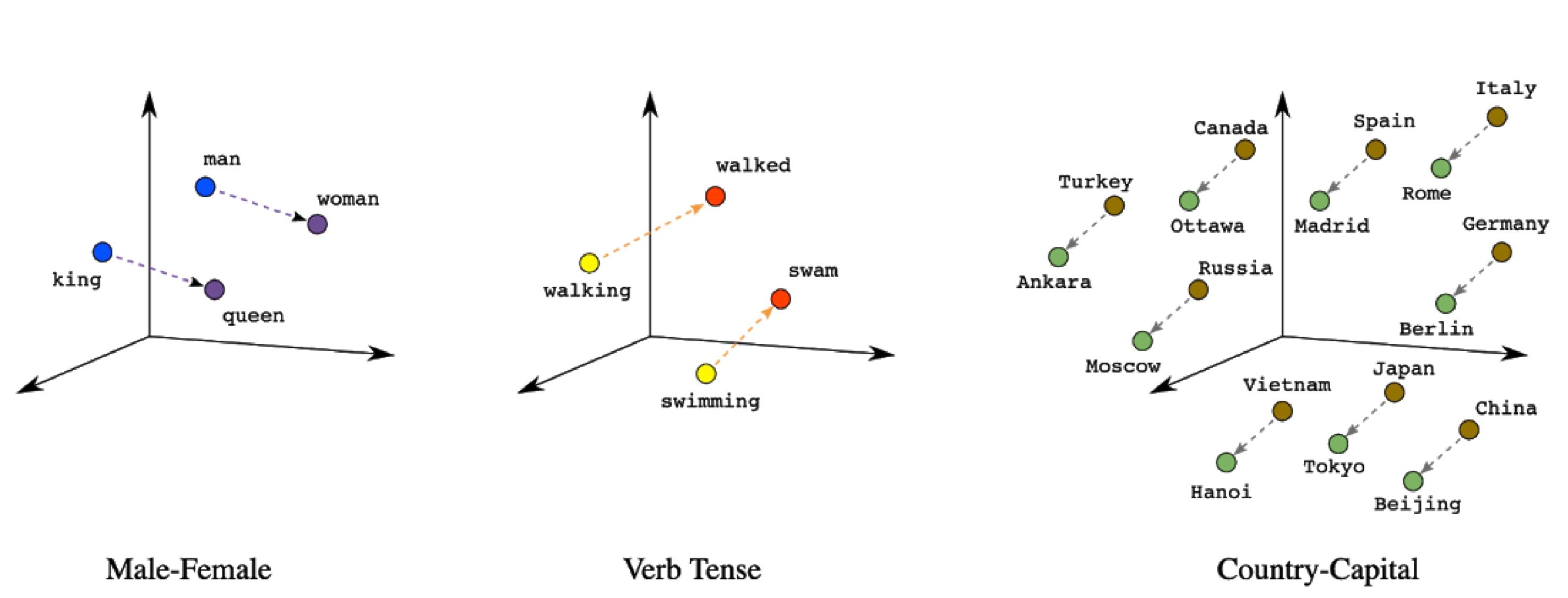

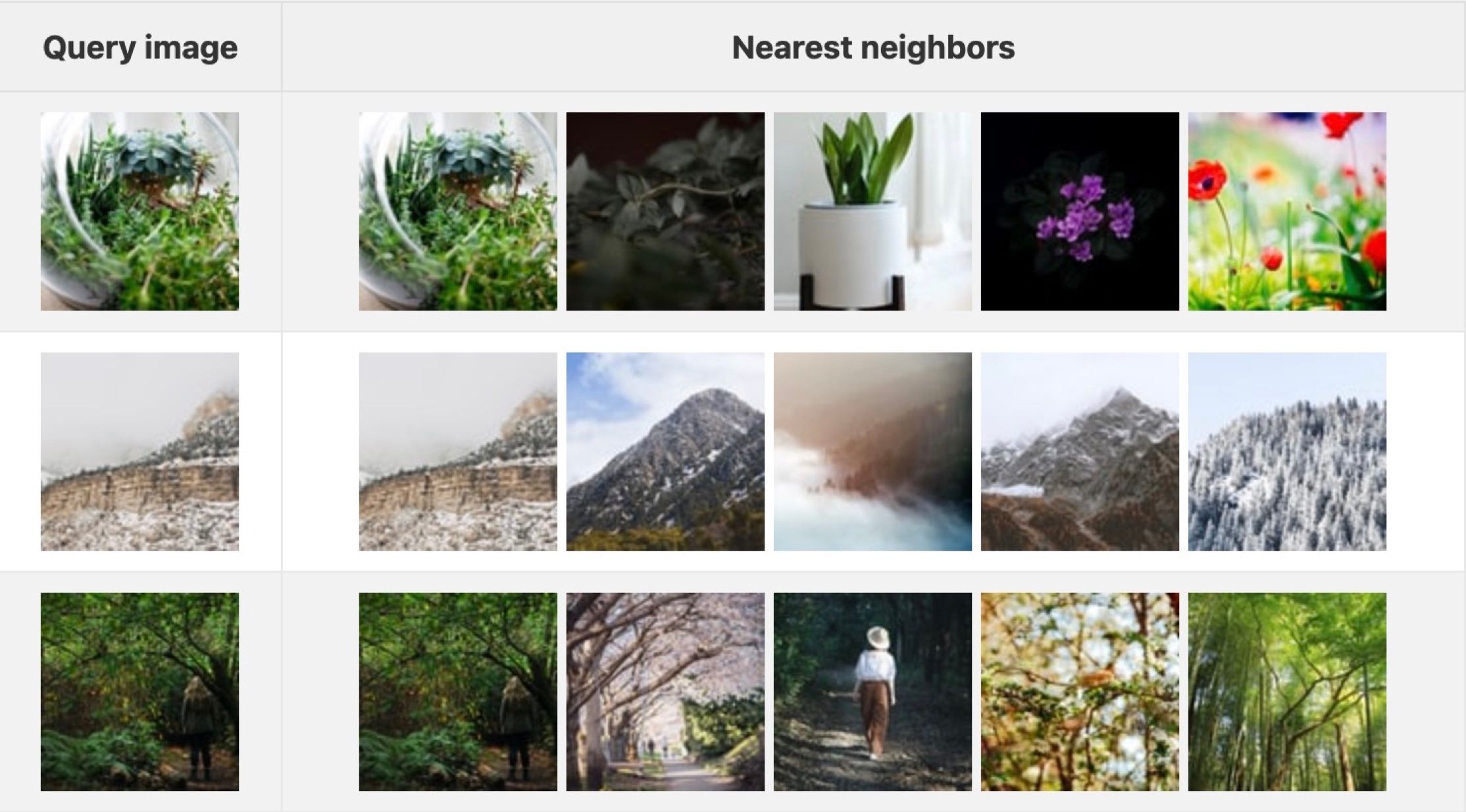

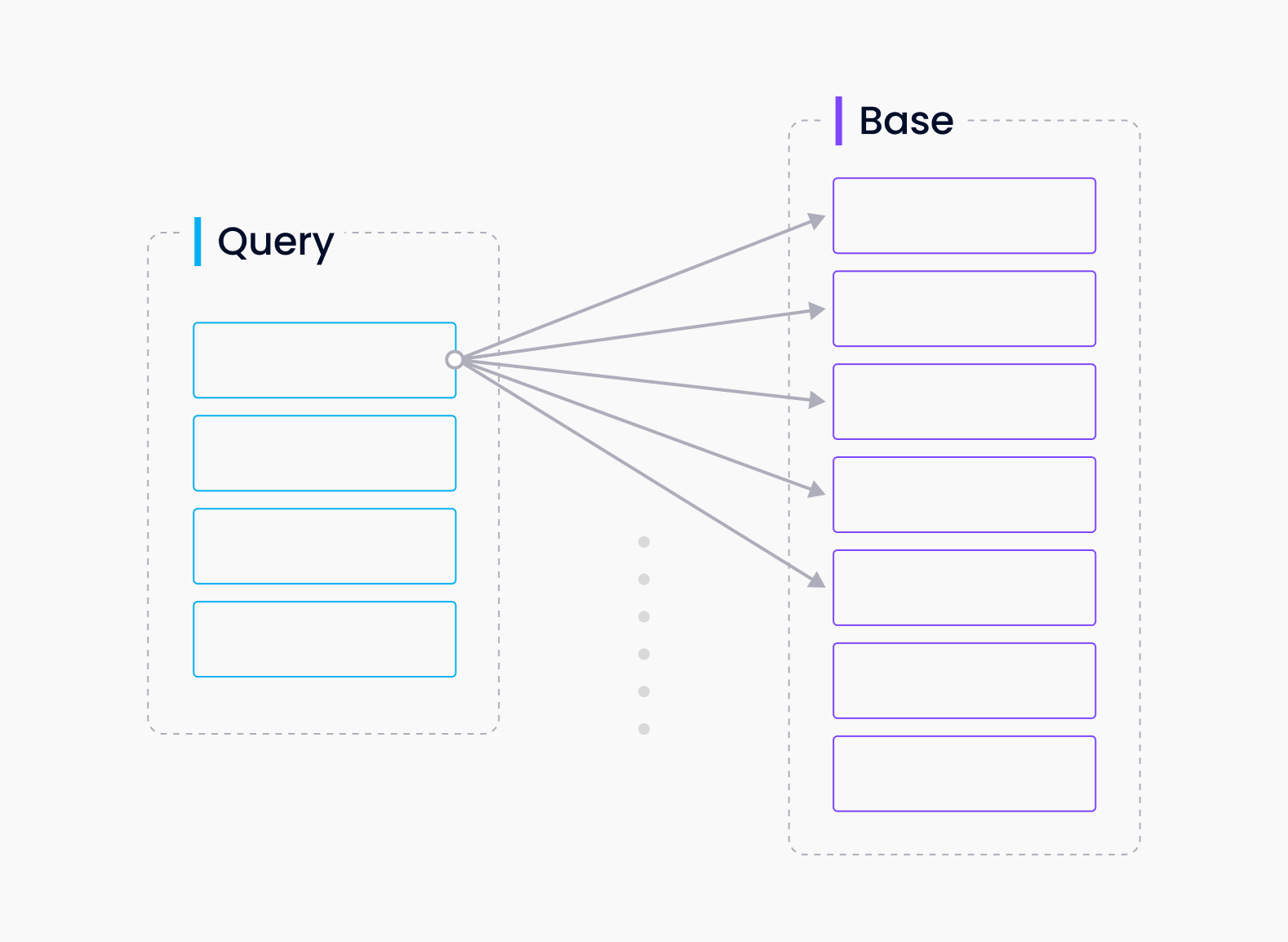

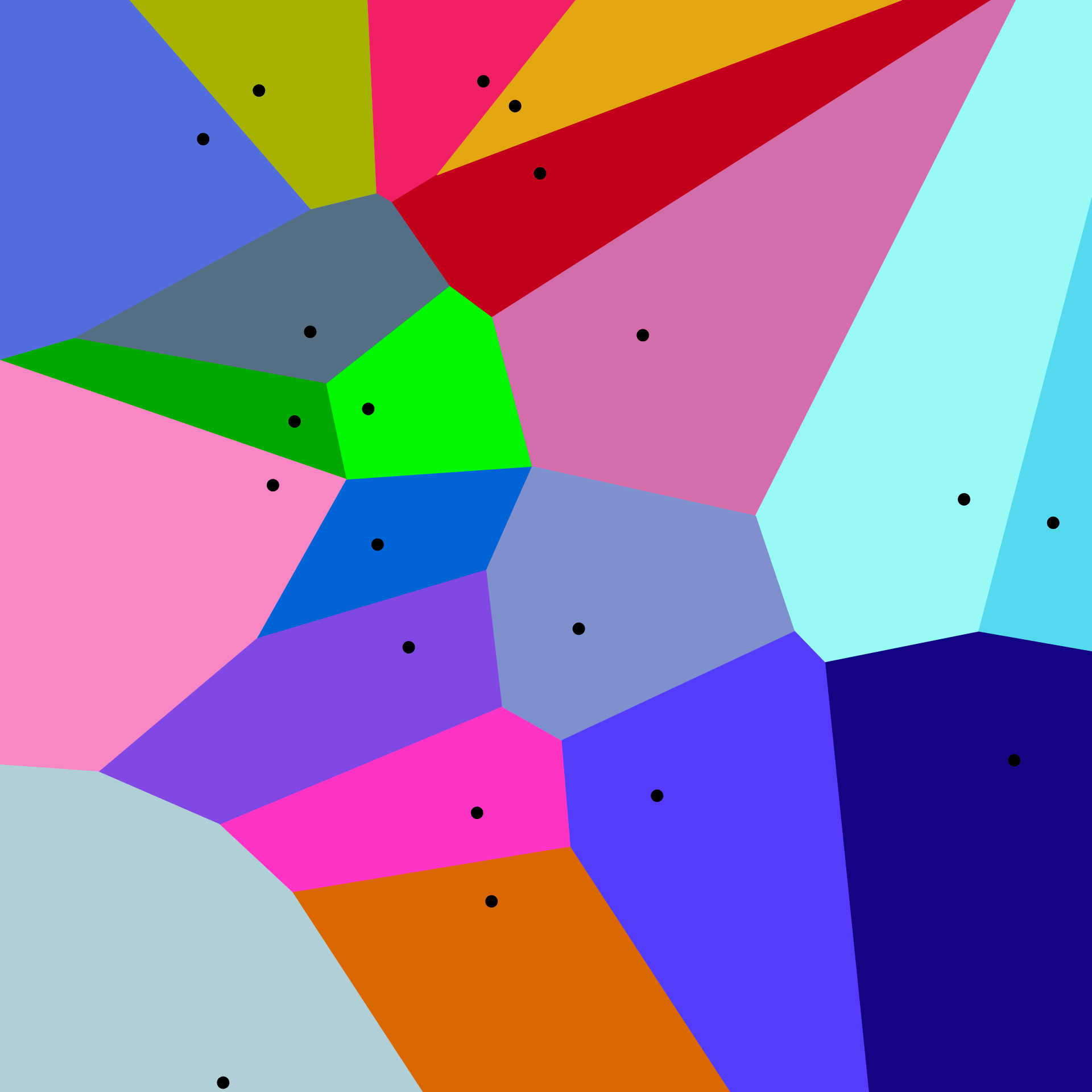

</noscript><div id="__next"><header class="header_headerContainer__cnwRR"><div class="page_container__IibMw header_desktopHeaderWrapper__HEBG_"><a title="Zilliz logo" class="header_logoWrapper__0doBt" href="/"><svg width="97" height="40" viewBox="0 0 97 40" fill="none" xmlns="http://www.w3.org/2000/svg"><g clip-path="url(#clip0_4874_3768)"><rect width="97" height="40" fill="transparent"></rect><path fill-rule="evenodd" clip-rule="evenodd" d="M18.6541 27.0547V39.7949H21.1413V24.5393L27.7546 35.9938C28.503 35.6278 29.2221 35.2111 29.9074 34.7483L22.6675 22.2084L30.641 25.1105C31.0007 24.3705 31.2862 23.5877 31.4879 22.7719L27.007 21.141H39.7949V18.6538H24.5381L35.9936 12.04C35.6276 11.2916 35.2109 10.5725 34.748 9.8872L22.2832 17.0838L25.1605 9.1786C24.4221 8.81536 23.6406 8.52618 22.8259 8.32075L21.1413 12.9492V0H18.6541V15.257L12.04 3.80101C11.2916 4.16702 10.5725 4.58369 9.88716 5.04654L17.0968 17.534L9.17163 14.6495C8.80943 15.3884 8.52133 16.1702 8.31702 16.9852L12.9015 18.6538H1.08717e-07L0 21.141H15.2559L3.80081 27.7546C4.16682 28.503 4.58348 29.2221 5.04633 29.9074L17.6089 22.6545L14.6995 30.6478C15.44 31.0066 16.2231 31.291 17.0392 31.4915L18.6541 27.0547Z" fill="url(#desktop-logo)"></path><rect x="46.0625" y="12.4359" width="11.3415" height="2.48718" fill="#000"></rect><rect x="85.3599" y="12.4359" width="11.3415" height="2.48718" fill="#000"></rect><rect x="67.0542" y="6.66563" width="2.68615" height="20.6933" fill="#000"></rect><rect x="61.085" y="12.4359" width="2.68615" height="14.9231" fill="#000"></rect><rect width="2.68615" height="2.68615" transform="matrix(1 0 0 -1 61.085 9.35179)" fill="#000"></rect><rect x="45.7642" y="24.8718" width="11.9385" height="2.48718" fill="#000"></rect><path d="M45.7642 24.8718L54.2216 14.9231L57.4042 14.9231L48.9467 24.8718L45.7642 24.8718Z" fill="#000"></path><path d="M85.0615 24.8718L93.519 14.9231L96.7015 14.9231L88.2441 24.8718L85.0615 24.8718Z" fill="#000"></path><rect x="73.0234" y="6.66563" width="2.68615" height="20.6933" fill="#000"></rect><rect x="85.0615" y="24.8718" width="11.9385" height="2.48718" fill="#000"></rect><rect x="78.9927" y="12.4359" width="2.68615" height="14.9231" fill="#000"></rect><rect width="2.68615" height="2.68615" transform="matrix(1 0 0 -1 78.9927 9.35179)" fill="#000"></rect></g><defs><linearGradient id="desktop-logo" x1="8.45641" y1="4.2282" x2="29.0503" y2="37.6061" gradientUnits="userSpaceOnUse"><stop stop-color="#9D41FF"></stop><stop offset="0.468794" stop-color="#2858FF"></stop><stop offset="0.770884" stop-color="#29B8FF"></stop><stop offset="1" stop-color="#00F0FF"></stop></linearGradient><clipPath id="clip0_4874_3768"><rect width="97" height="40" fill="white"></rect></clipPath></defs></svg></a><div class="header_rightSection__wt8UV"><ul class="header_navsWrapper__bq2UF"><li class="header_menuItem__mBXHj">Products<svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_verticalArrow__q0f2B"><path d="M2.5 4L6.5 8L10.5 4" stroke="#475467" stroke-width="2"></path></svg><div class="header_menuTransitionWrapper__eM91e" style="width:0px"><div class="header_subMenuWrapper__wGcsK"><div class="header_partWrapper__wFjH4 header_leftPart__PjZlt"><div class="header_cloudsContainer__inoV3"><div class=""><a class="header_cloudWrapper__MZqZI" href="/cloud"><svg width="40" height="40" viewBox="0 0 40 40" fill="none" xmlns="http://www.w3.org/2000/svg"><g clip-path="url(#clip0_4566_5716)"><path fill-rule="evenodd" clip-rule="evenodd" d="M40 19.8776C40 27.5419 33.7868 33.7551 26.1224 33.7551C26.1224 33.7551 26.1223 33.7551 26.1222 33.7551V33.7552H9.84091C9.82593 33.7553 9.81094 33.7553 9.79593 33.7553C4.38579 33.7553 0 29.3695 0 23.9594C0 18.5492 4.38579 14.1634 9.79593 14.1634C10.9977 14.1634 12.1489 14.3798 13.2127 14.7758C15.2456 9.63583 20.2594 6 26.1224 6C33.7868 6 40 12.2132 40 19.8776Z" fill="url(#paint0_linear_4566_5716)"></path></g><defs><linearGradient id="paint0_linear_4566_5716" x1="43.5833" y1="3.43409" x2="-7.67604" y2="6.91206" gradientUnits="userSpaceOnUse"><stop stop-color="#3542B7"></stop><stop offset="1" stop-color="#00CFDE"></stop></linearGradient><clipPath id="clip0_4566_5716"><rect width="40" height="40" fill="white"></rect></clipPath></defs></svg><p class="header_cloudName__FKCnE">Zilliz Cloud<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></p><p class="header_description__PJAok">Fully-managed vector database service designed for speed, scale and high performance.</p></a><a class="header_zillizVsMilvus__xq9eR" href="/zilliz-vs-milvus">Zilliz Cloud vs. Milvus<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></a></div><a class="header_cloudWrapper__MZqZI" href="/what-is-milvus"><svg width="40" height="40" viewBox="0 0 40 40" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M27.4365 11.5932C22.6628 6.80225 14.922 6.80225 10.1483 11.5932L2.34592 19.4238C1.88469 19.8871 1.88469 20.6333 2.34592 21.0967L10.1483 28.9272C14.922 33.7182 22.6628 33.7182 27.4365 28.935C32.2179 24.1519 32.2179 16.3842 27.4365 11.5932ZM25.5916 26.6338C22.0863 30.1524 16.3979 30.1524 12.8926 26.6338L7.15033 20.8768C6.8121 20.539 6.8121 19.9893 7.15033 19.6437L12.8849 13.8945C16.3902 10.3759 22.0786 10.3759 25.5839 13.8945C29.0969 17.4131 29.0969 23.1152 25.5916 26.6338Z" fill="#00B3FF"></path><path d="M37.6599 19.4316L34.2238 15.9208C34.0162 15.7088 33.6703 15.9051 33.7395 16.1957C34.3314 18.8739 34.3314 21.67 33.7395 24.3482C33.678 24.6388 34.0239 24.8273 34.2238 24.6231L37.6599 21.1124C38.1134 20.6411 38.1134 19.895 37.6599 19.4316Z" fill="#00B3FF"></path><path d="M19.2802 26.4217C22.6044 26.4217 25.2992 23.6684 25.2992 20.272C25.2992 16.8756 22.6044 14.1222 19.2802 14.1222C15.956 14.1222 13.2612 16.8756 13.2612 20.272C13.2612 23.6684 15.956 26.4217 19.2802 26.4217Z" fill="#00B3FF"></path></svg><p class="header_cloudName__FKCnE">Milvus<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></p><p class="header_description__PJAok">Open-source vector database built for billion-scale vector similarity search.</p></a></div></div><ul class="header_partWrapper__wFjH4 header_midPart__DU60V"><li class=""><a class="header_linkTitle__rhULA" href="/bring-your-own-cloud">BYOC</a></li><li class=""><a class="header_linkTitle__rhULA" href="/zilliz-migration-service">Migration</a></li><li class=""><a rel="noopener noreferrer" target="_blank" class="header_linkTitle__rhULA" href="/vector-database-benchmark-tool">Benchmark</a></li><li class=""><a class="header_linkTitle__rhULA" href="/product/integrations">Integrations</a></li><li class=""><a class="header_linkTitle__rhULA" href="/product/open-source-vector-database">Open Source</a></li><li class=""><a rel="noopener noreferrer" target="_blank" class="header_linkTitle__rhULA" href="https://support.zilliz.com/hc/en-us">Support Portal</a></li></ul><div class="header_partWrapper__wFjH4 header_rightPart__mJ5rk"><div class="header_imgWrapper__acXaO" style="background-image:url(https://assets.zilliz.com/medium_serverless_page_cover_d8d3872318.png)"></div><a class="header_linkTitle__rhULA" href="/serverless">High-Performance Vector Database Made Serverless.</a></div></div></div></li><li class="header_menuItem__mBXHj">Pricing<svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_verticalArrow__q0f2B"><path d="M2.5 4L6.5 8L10.5 4" stroke="#475467" stroke-width="2"></path></svg><div class="header_menuTransitionWrapper__eM91e" style="width:0px"><div class="header_subMenuWrapper__wGcsK"><ul class="header_partWrapper__wFjH4 header_midPart__DU60V"><li class=""><a class="header_linkTitle__rhULA" href="/pricing">Pricing Plan<span class="header_linkTip__MOCQ1">Flexible pricing options for every team on any budget</span></a></li><li class=""><a class="header_linkTitle__rhULA" href="/pricing#calculator">Calculator<span class="header_linkTip__MOCQ1">Estimate your cost</span></a></li></ul><div class="header_partWrapper__wFjH4 header_rightPart__mJ5rk"><div class="header_imgWrapper__acXaO" style="background-image:url(https://assets.zilliz.com/medium_success_b_6ae4050db7.png)"></div><a class="header_linkTitle__rhULA" href="/zilliz-cloud-free-tier">Free Tier</a></div></div></div></li><li class="header_menuItem__mBXHj">Developers<svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_verticalArrow__q0f2B"><path d="M2.5 4L6.5 8L10.5 4" stroke="#475467" stroke-width="2"></path></svg><div class="header_menuTransitionWrapper__eM91e" style="width:0px"><div class="header_subMenuWrapper__wGcsK"><div class="header_partWrapper__wFjH4 header_leftPart__PjZlt"><a rel="noopener noreferrer" target="_blank" class="header_documentContainer__BAe5n" href="https://docs.zilliz.com/docs/home"><svg width="50" height="50" viewBox="0 0 50 50" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_docIcon__xGEVK"><path d="M22.6667 38.6667H4C1.79087 38.6667 0 36.8759 0 34.6667V13.3333C0 12.597 0.59696 12 1.33333 12H20C20.7364 12 21.3333 12.597 21.3333 13.3333V29.3333H26.6667V34.6667C26.6667 36.8759 24.8759 38.6667 22.6667 38.6667ZM21.3333 32V34.6667C21.3333 35.4031 21.9303 36 22.6667 36C23.4031 36 24 35.4031 24 34.6667V32H21.3333ZM18.6667 36V14.6667H2.66667V34.6667C2.66667 35.4031 3.26363 36 4 36H18.6667ZM5.33333 18.6667H16V21.3333H5.33333V18.6667ZM5.33333 24H16V26.6667H5.33333V24ZM5.33333 29.3333H12V32H5.33333V29.3333Z" fill="black"></path></svg><p class="header_title__UKGc7">Documentation</p><p class="header_description__PJAok">The Zilliz Cloud Developer Hub where you can find all the information to work with Zilliz Cloud</p><p class="header_linkBtn__MlnDS">Learn More<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></p></a></div><ul class="header_partWrapper__wFjH4 header_midPart__DU60V"><li class=""><a class="header_linkTitle__rhULA" href="/learn">Learn</a></li><li class=""><a class="header_linkTitle__rhULA" href="/learn/generative-ai">GenAI Resource Hub</a></li><li class=""><a class="header_linkTitle__rhULA" href="/learn/milvus-notebooks">Notebooks</a></li><li class=""><a class="header_linkTitle__rhULA" href="/ai-models">AI Models</a></li><li class=""><a class="header_linkTitle__rhULA" href="/community">Community</a></li><li class=""><a class="header_linkTitle__rhULA" href="/milvus-downloads">Download Milvus</a></li></ul><div class="header_partWrapper__wFjH4 header_rightPart__mJ5rk"><div class="header_imgWrapper__acXaO" style="background-image:url(https://assets.zilliz.com/medium_office_hours_ed5a5d384c.png)"></div><a rel="noopener noreferrer" target="_blank" class="header_linkTitle__rhULA" href="https://discord.com/invite/8uyFbECzPX">Join the Milvus Discord Community</a></div></div></div></li><li class="header_menuItem__mBXHj">Resources<svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_verticalArrow__q0f2B"><path d="M2.5 4L6.5 8L10.5 4" stroke="#475467" stroke-width="2"></path></svg><div class="header_menuTransitionWrapper__eM91e" style="width:0px"><div class="header_subMenuWrapper__wGcsK"><ul class="header_partWrapper__wFjH4 header_midPart__DU60V"><li class=""><a class="header_linkTitle__rhULA" href="/blog">Blog</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=5">Guides</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=1">Research</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=4">Analyst Reports</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=2">Webinars</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=3">Trainings</a></li><li class=""><a class="header_linkTitle__rhULA" href="/resources?tag=6">Podcasts</a></li><li class=""><a class="header_linkTitle__rhULA" href="/event">Events</a></li><li class=""><a class="header_linkTitle__rhULA" href="/trust-center">Trust Center</a></li></ul><div class="header_partWrapper__wFjH4 header_rightPart__mJ5rk"><div class="header_imgWrapper__acXaO" style="background-image:url(https://assets.zilliz.com/thumbnail_5fde5817_93e0_4b5f_a63b_4ba02cb51b99_d3d836e307.png)"></div><a class="header_linkTitle__rhULA" href="/resources/guide/definitive-guide-choosing-vector-database">Definitive Guide to Choosing a Vector Database </a></div></div></div></li><li class="header_menuItem__mBXHj">Customers<svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" class="header_verticalArrow__q0f2B"><path d="M2.5 4L6.5 8L10.5 4" stroke="#475467" stroke-width="2"></path></svg><div class="header_menuTransitionWrapper__eM91e" style="width:0px"><div class="header_subMenuWrapper__wGcsK"><div class="header_partWrapper__wFjH4 header_leftPart__PjZlt"><div class="header_solutionsContainer__iViF3"><span class="header_title__UKGc7">By Use Case</span><a class="header_linkTitle__rhULA" href="/vector-database-use-cases/llm-retrieval-augmented-generation">Retrieval Augmented Generation</a><a class="header_linkBtn__MlnDS" href="/vector-database-use-cases">View all use cases<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></a><a class="header_linkBtn__MlnDS" href="/industry">View by industry<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></a><a class="header_linkBtn__MlnDS" href="/customers">View all customer stories<svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></a></div></div><div class="header_partWrapper__wFjH4 header_rightPart__mJ5rk"><div class="header_imgWrapper__acXaO" style="background-image:url(https://assets.zilliz.com/medium_Group_13397_084d85124a.png)"></div><a class="header_linkTitle__rhULA" href="/customers/beni">Beni Revolutionizes Sustainable Fashion with Zilliz Cloud&#x27;s Vector Search</a></div></div></div></li></ul><div class="header_btnsWrapper__r9jQ0"><div class="header_languageSelector__MZ0jb"><button class="header_languageBtn__687U2"><svg width="24" height="24" viewBox="0 0 24 24" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M12 21C10.7613 21 9.59467 20.7633 8.5 20.29C7.40533 19.8167 6.452 19.1733 5.64 18.36C4.82667 17.5487 4.18333 16.5953 3.71 15.5C3.23667 14.4053 3 13.2387 3 12C3 10.758 3.23667 9.59033 3.71 8.497C4.184 7.40367 4.82733 6.451 5.64 5.639C6.45133 4.827 7.40467 4.18433 8.5 3.711C9.59467 3.237 10.7613 3 12 3C13.242 3 14.4097 3.23667 15.503 3.71C16.5963 4.184 17.549 4.82733 18.361 5.64C19.173 6.452 19.8157 7.40433 20.289 8.497C20.763 9.59033 21 10.758 21 12C21 13.2387 20.7633 14.4053 20.29 15.5C19.8167 16.5947 19.1733 17.548 18.36 18.36C17.548 19.1727 16.5957 19.816 15.503 20.29C14.4097 20.7633 13.242 21 12 21ZM12 20.008C12.5867 19.254 13.0707 18.5137 13.452 17.787C13.8327 17.0603 14.1423 16.247 14.381 15.347H9.619C9.883 16.2977 10.199 17.1363 10.567 17.863C10.935 18.5897 11.4127 19.3047 12 20.008ZM10.727 19.858C10.2603 19.308 9.83433 18.628 9.449 17.818C9.06367 17.0087 8.777 16.1847 8.589 15.346H4.753C5.32633 16.59 6.13867 17.61 7.19 18.406C8.242 19.202 9.42067 19.686 10.726 19.858M13.272 19.858C14.5773 19.686 15.756 19.202 16.808 18.406C17.86 17.61 18.6723 16.59 19.245 15.346H15.411C15.1577 16.1973 14.8387 17.028 14.454 17.838C14.0687 18.6473 13.6747 19.3207 13.272 19.858ZM4.345 14.346H8.38C8.304 13.936 8.25067 13.5363 8.22 13.147C8.188 12.7577 8.172 12.3753 8.172 12C8.172 11.6247 8.18767 11.2423 8.219 10.853C8.25033 10.4637 8.30367 10.0637 8.379 9.653H4.347C4.23833 9.99967 4.15333 10.3773 4.092 10.786C4.03067 11.1947 4 11.5993 4 12C4 12.4013 4.03033 12.806 4.091 13.214C4.15233 13.6227 4.23733 14 4.346 14.346M9.381 14.346H14.619C14.695 13.936 14.7483 13.5427 14.779 13.166C14.811 12.79 14.827 12.4013 14.827 12C14.827 11.5987 14.8113 11.21 14.78 10.834C14.7487 10.4573 14.6953 10.064 14.62 9.654H9.38C9.30467 10.064 9.25133 10.4573 9.22 10.834C9.18867 11.21 9.173 11.5987 9.173 12C9.173 12.4013 9.18867 12.79 9.22 13.166C9.25133 13.5427 9.30567 13.936 9.381 14.346ZM15.62 14.346H19.654C19.7627 13.9993 19.8477 13.622 19.909 13.214C19.9703 12.806 20.0007 12.4013 20 12C20 11.5987 19.9697 11.194 19.909 10.786C19.8477 10.3773 19.7627 10 19.654 9.654H15.619C15.695 10.064 15.7483 10.4637 15.779 10.853C15.811 11.2423 15.827 11.6247 15.827 12C15.827 12.3753 15.8113 12.7577 15.78 13.147C15.7487 13.5363 15.6953 13.9363 15.62 14.347M15.412 8.654H19.246C18.66 7.38467 17.8573 6.36467 16.838 5.594C15.818 4.82333 14.6297 4.33333 13.273 4.124C13.7397 4.73733 14.1593 5.43933 14.532 6.23C14.904 7.02 15.1973 7.828 15.412 8.654ZM9.619 8.654H14.381C14.117 7.71533 13.7913 6.86667 13.404 6.108C13.0173 5.34867 12.5493 4.64333 12 3.992C11.4513 4.64333 10.9833 5.34867 10.596 6.108C10.2093 6.86667 9.88367 7.71533 9.619 8.654ZM4.754 8.654H8.588C8.80267 7.828 9.096 7.02 9.468 6.23C9.84067 5.43933 10.2603 4.737 10.727 4.123C9.35767 4.333 8.16633 4.82633 7.153 5.603C6.13967 6.38033 5.33967 7.397 4.753 8.653" fill="#1D2939"></path></svg></button><div class="header_dropdownWrapper__Y8x_9"><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">English</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">日本語</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">한국어</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Español</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Français</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Deutsch</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Italiano</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Português</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA header_langButton__YmBJV">Русский</button></div></div><a class="header_linkBtn__MlnDS" href="/contact-sales">Contact us</a><a rel="noopener noreferrer" target="_blank" class="header_linkBtn__MlnDS" href="https://cloud.zilliz.com/login">Log in</a><a rel="noreferrer noopener" target="_blank" title="" class="BaseButton_root__SFmw5 BaseButton_contained__2JisA" href="https://cloud.zilliz.com/signup?utm_page=learn/everything-you-should-know-about-vector-embeddings&amp;utm_button=nav_right">Get Started Free</a></div></div></div></header><header class="mobileHeader_headerContainer__voi8M"><div class="page_container__IibMw"><div class="mobileHeader_header__Xap_i"><a title="Zilliz logo" href="/"><svg width="97" height="40" viewBox="0 0 97 40" fill="none" xmlns="http://www.w3.org/2000/svg"><g clip-path="url(#clip0_4874_3768)"><rect width="97" height="40" fill="transparent"></rect><path fill-rule="evenodd" clip-rule="evenodd" d="M18.6541 27.0547V39.7949H21.1413V24.5393L27.7546 35.9938C28.503 35.6278 29.2221 35.2111 29.9074 34.7483L22.6675 22.2084L30.641 25.1105C31.0007 24.3705 31.2862 23.5877 31.4879 22.7719L27.007 21.141H39.7949V18.6538H24.5381L35.9936 12.04C35.6276 11.2916 35.2109 10.5725 34.748 9.8872L22.2832 17.0838L25.1605 9.1786C24.4221 8.81536 23.6406 8.52618 22.8259 8.32075L21.1413 12.9492V0H18.6541V15.257L12.04 3.80101C11.2916 4.16702 10.5725 4.58369 9.88716 5.04654L17.0968 17.534L9.17163 14.6495C8.80943 15.3884 8.52133 16.1702 8.31702 16.9852L12.9015 18.6538H1.08717e-07L0 21.141H15.2559L3.80081 27.7546C4.16682 28.503 4.58348 29.2221 5.04633 29.9074L17.6089 22.6545L14.6995 30.6478C15.44 31.0066 16.2231 31.291 17.0392 31.4915L18.6541 27.0547Z" fill="url(#mobile-logo)"></path><rect x="46.0625" y="12.4359" width="11.3415" height="2.48718" fill="#000"></rect><rect x="85.3599" y="12.4359" width="11.3415" height="2.48718" fill="#000"></rect><rect x="67.0542" y="6.66563" width="2.68615" height="20.6933" fill="#000"></rect><rect x="61.085" y="12.4359" width="2.68615" height="14.9231" fill="#000"></rect><rect width="2.68615" height="2.68615" transform="matrix(1 0 0 -1 61.085 9.35179)" fill="#000"></rect><rect x="45.7642" y="24.8718" width="11.9385" height="2.48718" fill="#000"></rect><path d="M45.7642 24.8718L54.2216 14.9231L57.4042 14.9231L48.9467 24.8718L45.7642 24.8718Z" fill="#000"></path><path d="M85.0615 24.8718L93.519 14.9231L96.7015 14.9231L88.2441 24.8718L85.0615 24.8718Z" fill="#000"></path><rect x="73.0234" y="6.66563" width="2.68615" height="20.6933" fill="#000"></rect><rect x="85.0615" y="24.8718" width="11.9385" height="2.48718" fill="#000"></rect><rect x="78.9927" y="12.4359" width="2.68615" height="14.9231" fill="#000"></rect><rect width="2.68615" height="2.68615" transform="matrix(1 0 0 -1 78.9927 9.35179)" fill="#000"></rect></g><defs><linearGradient id="mobile-logo" x1="8.45641" y1="4.2282" x2="29.0503" y2="37.6061" gradientUnits="userSpaceOnUse"><stop stop-color="#9D41FF"></stop><stop offset="0.468794" stop-color="#2858FF"></stop><stop offset="0.770884" stop-color="#29B8FF"></stop><stop offset="1" stop-color="#00F0FF"></stop></linearGradient><clipPath id="clip0_4874_3768"><rect width="97" height="40" fill="white"></rect></clipPath></defs></svg></a><div class="mobileHeader_menu__3CNGu"><div class="mobileHeader_languageSelector__zLvyD"><button class="mobileHeader_languageBtn__q35Ut"><svg width="24" height="24" viewBox="0 0 24 24" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M12 21C10.7613 21 9.59467 20.7633 8.5 20.29C7.40533 19.8167 6.452 19.1733 5.64 18.36C4.82667 17.5487 4.18333 16.5953 3.71 15.5C3.23667 14.4053 3 13.2387 3 12C3 10.758 3.23667 9.59033 3.71 8.497C4.184 7.40367 4.82733 6.451 5.64 5.639C6.45133 4.827 7.40467 4.18433 8.5 3.711C9.59467 3.237 10.7613 3 12 3C13.242 3 14.4097 3.23667 15.503 3.71C16.5963 4.184 17.549 4.82733 18.361 5.64C19.173 6.452 19.8157 7.40433 20.289 8.497C20.763 9.59033 21 10.758 21 12C21 13.2387 20.7633 14.4053 20.29 15.5C19.8167 16.5947 19.1733 17.548 18.36 18.36C17.548 19.1727 16.5957 19.816 15.503 20.29C14.4097 20.7633 13.242 21 12 21ZM12 20.008C12.5867 19.254 13.0707 18.5137 13.452 17.787C13.8327 17.0603 14.1423 16.247 14.381 15.347H9.619C9.883 16.2977 10.199 17.1363 10.567 17.863C10.935 18.5897 11.4127 19.3047 12 20.008ZM10.727 19.858C10.2603 19.308 9.83433 18.628 9.449 17.818C9.06367 17.0087 8.777 16.1847 8.589 15.346H4.753C5.32633 16.59 6.13867 17.61 7.19 18.406C8.242 19.202 9.42067 19.686 10.726 19.858M13.272 19.858C14.5773 19.686 15.756 19.202 16.808 18.406C17.86 17.61 18.6723 16.59 19.245 15.346H15.411C15.1577 16.1973 14.8387 17.028 14.454 17.838C14.0687 18.6473 13.6747 19.3207 13.272 19.858ZM4.345 14.346H8.38C8.304 13.936 8.25067 13.5363 8.22 13.147C8.188 12.7577 8.172 12.3753 8.172 12C8.172 11.6247 8.18767 11.2423 8.219 10.853C8.25033 10.4637 8.30367 10.0637 8.379 9.653H4.347C4.23833 9.99967 4.15333 10.3773 4.092 10.786C4.03067 11.1947 4 11.5993 4 12C4 12.4013 4.03033 12.806 4.091 13.214C4.15233 13.6227 4.23733 14 4.346 14.346M9.381 14.346H14.619C14.695 13.936 14.7483 13.5427 14.779 13.166C14.811 12.79 14.827 12.4013 14.827 12C14.827 11.5987 14.8113 11.21 14.78 10.834C14.7487 10.4573 14.6953 10.064 14.62 9.654H9.38C9.30467 10.064 9.25133 10.4573 9.22 10.834C9.18867 11.21 9.173 11.5987 9.173 12C9.173 12.4013 9.18867 12.79 9.22 13.166C9.25133 13.5427 9.30567 13.936 9.381 14.346ZM15.62 14.346H19.654C19.7627 13.9993 19.8477 13.622 19.909 13.214C19.9703 12.806 20.0007 12.4013 20 12C20 11.5987 19.9697 11.194 19.909 10.786C19.8477 10.3773 19.7627 10 19.654 9.654H15.619C15.695 10.064 15.7483 10.4637 15.779 10.853C15.811 11.2423 15.827 11.6247 15.827 12C15.827 12.3753 15.8113 12.7577 15.78 13.147C15.7487 13.5363 15.6953 13.9363 15.62 14.347M15.412 8.654H19.246C18.66 7.38467 17.8573 6.36467 16.838 5.594C15.818 4.82333 14.6297 4.33333 13.273 4.124C13.7397 4.73733 14.1593 5.43933 14.532 6.23C14.904 7.02 15.1973 7.828 15.412 8.654ZM9.619 8.654H14.381C14.117 7.71533 13.7913 6.86667 13.404 6.108C13.0173 5.34867 12.5493 4.64333 12 3.992C11.4513 4.64333 10.9833 5.34867 10.596 6.108C10.2093 6.86667 9.88367 7.71533 9.619 8.654ZM4.754 8.654H8.588C8.80267 7.828 9.096 7.02 9.468 6.23C9.84067 5.43933 10.2603 4.737 10.727 4.123C9.35767 4.333 8.16633 4.82633 7.153 5.603C6.13967 6.38033 5.33967 7.397 4.753 8.653" fill="#1D2939"></path></svg></button><div class="mobileHeader_dropdownWrapper__0DLyM"><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">English</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">日本語</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">한국어</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Español</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Français</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Deutsch</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Italiano</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Português</button><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA mobileHeader_langButton__YkCt_">Русский</button></div></div><svg width="24" height="24" viewBox="0 0 24 24"><rect x="2" y="5" width="20" height="2" fill="black"></rect><rect x="2" y="11" width="20" height="2" fill="black"></rect><rect x="2" y="17" width="20" height="2" fill="black"></rect></svg></div></div></div></header><main><div class="detailHead_headContainer__aCYlj"><div class="page_container__IibMw"><div class="detailHead_contentContainer__aimWx"><div class="detailHead_leftSection__PKLAW"><div class="globalBreadcrumb_breadcrumbContainer__8VB_S"><ul class="globalBreadcrumb_breadcrumbList___dw0x globalBreadcrumb_horizonAlignLeft__pvF41"><li class="globalBreadcrumb_listItem__4n_9F"><a class="globalBreadcrumb_breadcrumbItem__3Axv8 globalBreadcrumb_homeItem__9PTnF globalBreadcrumb_darkTheme__R4ILy" href="/"><svg width="13" height="12" viewBox="0 0 13 12" fill="none" xmlns="http://www.w3.org/2000/svg" color="#fff"><g clip-path="url(#clip0_19069_588)"><path d="M11.4526 5.66339L6.71842 1.17877C6.42915 0.90476 5.97612 0.90476 5.68685 1.17877L0.952637 5.66339" stroke="#fff" stroke-width="0.9" stroke-linecap="round" stroke-linejoin="round"></path><path d="M2.05817 7.37201V10.5598C2.05817 10.974 2.39395 11.3097 2.80817 11.3097H9.57781C9.99202 11.3097 10.3278 10.974 10.3278 10.5597V7.37201" stroke="#fff" stroke-width="0.9" stroke-linecap="round" stroke-linejoin="round"></path><path d="M7.50721 11.3097V8.12201C7.50721 7.7078 7.17142 7.37201 6.75721 7.37201H5.62885C5.21463 7.37201 4.87885 7.70779 4.87885 8.12201V11.3097" stroke="#fff" stroke-width="0.9" stroke-linecap="round" stroke-linejoin="round"></path></g><defs><clipPath id="clip0_19069_588"><rect width="12" height="12" fill="white" transform="translate(0.202637)"></rect></clipPath></defs></svg></a><svg width="8" height="14" viewBox="0 0 8 14" fill="none" xmlns="http://www.w3.org/2000/svg" color="#fff"><path d="M7.39502 1.20099L0.608318 12.7991" stroke="#fff"></path></svg></li><li class="globalBreadcrumb_listItem__4n_9F"><a class="globalBreadcrumb_breadcrumbItem__3Axv8 globalBreadcrumb_darkTheme__R4ILy" href="/learn">Learn</a><svg width="8" height="14" viewBox="0 0 8 14" fill="none" xmlns="http://www.w3.org/2000/svg" color="#fff"><path d="M7.39502 1.20099L0.608318 12.7991" stroke="#fff"></path></svg></li><li class="globalBreadcrumb_listItem__4n_9F"><p class="globalBreadcrumb_currentItem__2QgJK detailHead_currentNavItem__Hex2q globalBreadcrumb_darkTheme__R4ILy">Vector Database 101: Everything You Need to Know</p></li></ul></div><h1 class="detailHead_title__D0Z91">An Introduction to Vector Embeddings: What They Are and How to Use Them </h1><div class="detailHead_timeWrapper__NFKKE"><span>Jun 03, 2024</span><span class="detailHead_spot__pMBk4"></span><span>9 min read</span></div><p class="detailHead_desc__leSOl">In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings. </p><p class="detailHead_authorName__w4D3w">By <a href="/authors/_Haziqa_Sajid"> Haziqa Sajid</a></p></div><div class="detailHead_linksWrapper__1_8Vd"><p class="detailHead_seriesTitle__htQqo">Read the entire series</p><div class="detailHead_linksContent__Z_1l1"><ul class="detailHead_listWrapper__jGB5W"><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/introduction-to-unstructured-data">Introduction to Unstructured Data</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/what-is-vector-database">What is a Vector Database and how does it work: Implementation, Optimization &amp; Scaling for Production Applications</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/comparing-vector-database-vector-search-library-and-vector-search-plugin">Understanding Vector Databases: Compare Vector Databases, Vector Search Libraries, and Vector Search Plugins</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/introduction-to-milvus-vector-database">Introduction to Milvus Vector Database</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/milvus-vector-database-quickstart">Milvus Quickstart: Install Milvus Vector Database in 5 Minutes</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/vector-similarity-search">Introduction to Vector Similarity Search</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/vector-index">Everything You Need to Know about Vector Index Basics</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/scalar-quantization-and-product-quantization">Scalar Quantization and Product Quantization</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/hierarchical-navigable-small-worlds-HNSW">Hierarchical Navigable Small Worlds (HNSW) </a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/approximate-nearest-neighbor-oh-yeah-ANNOY">Approximate Nearest Neighbors Oh Yeah (Annoy)</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/choosing-right-vector-index-for-your-project">Choosing the Right Vector Index for Your Project</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/DiskANN-and-the-Vamana-Algorithm">DiskANN and the Vamana Algorithm</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/vector-database-backup-and-recovery-safeguard-data-integrity">Safeguard Data Integrity: Backup and Recovery in Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/dense-vector-in-ai-maximize-data-potential-in-machine-learning">Dense Vectors in AI: Maximizing Data Potential in Machine Learning</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges">Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges </a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/beginner-guide-to-implementing-vector-databases">A Beginner&#x27;s Guide to Implementing Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/maintaining-data-integrity-in-vector-databases">Maintaining Data Integrity in Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/from-sql-and-nosql-to-vectors-database-evolution-journey">From Rows and Columns to Vectors: The Evolutionary Journey of Database Technologies </a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/decoding-softmax-understanding-functions-and-impact-in-ai">Decoding Softmax Activation Function</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/harnessing-product-quantization-for-memory-efficiency-in-vector-databases">Harnessing Product Quantization for Memory Efficiency in Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/how-to-spot-search-performance-bottleneck-in-vector-databases">How to Spot Search Performance Bottleneck in Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/ensuring-high-availability-of-vector-databases">Ensuring High Availability of Vector Databases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/mastering-locality-sensitive-hashing-a-comprehensive-tutorial">Mastering Locality Sensitive Hashing: A Comprehensive Tutorial and Use Cases</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/vector-library-versus-vector-database">Vector Library vs Vector Database: Which One is Right for You?</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/maximizing-gpt-4-potential-through-fine-tuning-techniques">Maximizing GPT 4.x&#x27;s Potential Through Fine-Tuning Techniques</a></li><li class="detailHead_listItem___5wTj"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/Deploying-Vector-Databases-in-Multi-Cloud-Environments">Deploying Vector Databases in Multi-Cloud Environments</a></li><li class="detailHead_listItem___5wTj detailHead_activeItem__3vbC2"><span class="detailHead_iconWrapper__MtztT"><svg width="6" height="10" viewBox="0 0 6 10" fill="none" xmlns="http://www.w3.org/2000/svg"><path opacity="0.6" d="M1.6625e-08 1.03866C9.919e-09 0.619699 0.484383 0.386523 0.811863 0.647842L5.77613 4.60918C6.02698 4.80935 6.02698 5.19065 5.77613 5.39082L0.811864 9.35216C0.484383 9.61348 1.50143e-07 9.3803 1.43437e-07 8.96134L1.6625e-08 1.03866Z" fill="white"></path></svg></span><a href="/learn/everything-you-should-know-about-vector-embeddings">An Introduction to Vector Embeddings: What They Are and How to Use Them </a></li></ul></div></div></div></div></div><div class="page_container__IibMw"><div class="learnDetail_contentContainer__xRjX3"><div class="learnDetail_docContent__s44U3"><div class="docContainer blogContainer"><p><em>Understand vector embeddings and when and how to use them. Explore real-world applications with Milvus and Zilliz Cloud vector databases.</em></p> <p><a href="https://zilliz.com/glossary/vector-embeddings">Vector embeddings</a> are numerical representations of data points, making unstructured data easier to search against. These embeddings are stored in specialized databases like<a href="https://milvus.io/intro"> Milvus</a> and<a href="https://zilliz.com/cloud"> Zilliz Cloud</a> (fully managed Milvus), which utilize advanced algorithms and indexing techniques for quick data retrieval.</p> <p>Modern artificial intelligence (AI) models, like <a href="https://zilliz.com/glossary/large-language-models-(llms)">Large Language Models</a> (LLMs), use text vector embeddings to understand natural language and generate relevant responses. Moreover, advanced versions of LLMs use <a href="https://zilliz.com/learn/Retrieval-Augmented-Generation">Retrieval Augmented Generation (RAG)</a> to retrieve information from external vector stores for task-specific applications.</p> <p>In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.</p> <h2 id="What-are-Vector-Embeddings" class="common-anchor-header">What are Vector Embeddings?<button data-href="#What-are-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>A vector embedding is a list of numerical data points, with each number representing a data feature. These embeddings are obtained by analyzing connections within a dataset. Data points that are closer to each other are identified as semantically similar.</p> <p>The embeddings are formulated using deep learning models trained to map data to a high-dimensional vector space. Popular embedding models like BERT and Data2Vec form the basis of many modern deep-learning applications.</p> <p>Moreover, vector embeddings are popularly used in <a href="https://zilliz.com/learn/A-Beginner-Guide-to-Natural-Language-Processing">NLP</a> and CV applications due to their efficiency.</p> <h2 id="Types-of-Vector-Embeddings" class="common-anchor-header">Types of Vector Embeddings<button data-href="#Types-of-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>There are three main types of embeddings based on their dimensionality: <a href="https://zilliz.com/learn/sparse-and-dense-embeddings">dense, sparse,</a> and binary embeddings. Here’s how they differ in characteristics and use:</p> <h3 id="1-Dense-Embeddings" class="common-anchor-header">1. Dense Embeddings<button data-href="#1-Dense-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Vector embeddings that represent data points with most non-zero elements are dense. They capture finer details since they store all data, even zero values, making them less storage efficient.</p> <p>Word2Vec, GloVe, CLIP, and BERT are models that generate dense vector embeddings from input data.</p> <h3 id="2-Sparse-Embeddings" class="common-anchor-header">2. Sparse Embeddings<button data-href="#2-Sparse-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Sparse vector embeddings are high-dimensional vectors with most zero vector elements. The non-zero values in sparse embeddings represent the relative importance of data points in a corpus. Sparse embeddings require less memory and storage and are suitable for high-dimensional sparse data like word frequency.</p> <p>TF-IDF and SPLADE are popular methods of generating sparse vector embeddings.</p> <h3 id="3-Binary-Embeddings" class="common-anchor-header">3. Binary Embeddings<button data-href="#3-Binary-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>A binary embedding stores information in only 2 bits, 1 and 0. This form of storage is substantially more efficient than 32-bit floating point integers and improves data retrieval. However, it does lead to information loss since we are dialing down on data precision.</p> <p>Regardless, binary embeddings are popular in certain use cases where speed is preferred for slight accuracy.</p> <h2 id="How-are-Vector-Embeddings-Created" class="common-anchor-header">How are Vector Embeddings Created?<button data-href="#How-are-Vector-Embeddings-Created" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>Sophisticated deep learning models and statistical methods help create vector embeddings. These models identify patterns and connections in input data to learn the difference between data points. Models generate vector embeddings in an n-dimensional space based on their understanding of underlying connections.</p> <p>An N-dimensional space is beyond our 3-dimensional thinking and captures data from multiple perspectives. High-dimensional vector embeddings allow capturing finer details from data points, resulting in accurate outputs.</p> <p>For example, in textual data, high-dimensional space allows for capturing subtle differences in word meanings. Operating in a 2-dimensional space will group the words “tired” and “exhausted” together. An n-dimensional space will project them in different dimensions, capturing the difference in emotions. Mathematically, the following vector is a vector <code>v</code> in n-dimensional space:</p> <p>v=[v1,v2,…,vn]</p> <p>The two popular techniques for creating vector embeddings are:</p> <h3 id="Neural-Networks" class="common-anchor-header">Neural Networks<button data-href="#Neural-Networks" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Neural networks, such as <a href="https://zilliz.com/glossary/convolutional-neural-network">Convolutional Neural Networks</a> (CNNs) or <a href="https://zilliz.com/glossary/recurrent-neural-networks">Recurrent Neural Networks</a> (RNNs), excel at learning data complexities. For example, BERT analyzes a word's neighboring terms to understand its meaning and generate embeddings.</p> <h3 id="Matrix-Factorization" class="common-anchor-header">Matrix Factorization<button data-href="#Matrix-Factorization" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Unlike neural networks, matrix factorization is a simpler embedding model. It takes training data as a matrix where each row and column represents a data record. The model then factorizes data points into lower-rank matrices. Matrix factorization is popularly used in recommendation systems, where the input matrix is the user rating matrix with rows representing users and columns representing the item (e.g., movie). Multiplying the user embedding matrix with the transpose of the item embedding matrix generates a matrix that approximates the original matrix.</p> <p>Various tools and libraries simplify the process of generating embeddings from input data. The most popular libraries include TensorFlow, PyTorch, and Hugging Face. These open-source libraries and tools offer user-friendly documentation for creating embedding models.</p> <p>The following table lists different embedding models, their descriptions, and links to the official documentation:</p> <table> <thead> <tr><th style="text-align:center"></th><th style="text-align:center"></th><th style="text-align:center"></th></tr> </thead> <tbody> <tr><td style="text-align:center"><strong>Model</strong></td><td style="text-align:center"><strong>Description</strong></td><td style="text-align:center"><strong>Link</strong></td></tr> <tr><td style="text-align:center">Neural Networks</td><td style="text-align:center">Neural Networks like CNNs and RNNs effectively identify data patterns, which is useful for generating vector embeddings. For example, Word2Vec.</td><td style="text-align:center">https://developers.google.com/machine-learning/crash-course/introduction-to-neural-networks/video-lecture</td></tr> <tr><td style="text-align:center">Matrix Factorization</td><td style="text-align:center">Matrix Factorization is suitable for filtering tasks like recommendation systems. It captures user preferences by manipulating input matrices.</td><td style="text-align:center">https://developers.google.com/machine-learning/recommendation/collaborative/matrix</td></tr> <tr><td style="text-align:center">GloVe</td><td style="text-align:center">GloVe is a uni-directional embedding model. It generates a single-word embedding for a single word.</td><td style="text-align:center">https://nlp.stanford.edu/projects/glove/</td></tr> <tr><td style="text-align:center">BERT</td><td style="text-align:center">BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained model that analyzes textual data bidirectionaly.</td><td style="text-align:center"><a href="https://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BERT-The-Foundation-Model-for-BGE-M3-and-Splade">https://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BERT-The-Foundation-Model-for-BGE-M3-and-Splade</a></td></tr> <tr><td style="text-align:center">ColBERT</td><td style="text-align:center">A token-level embedding and ranking model</td><td style="text-align:center"><a href="https://zilliz.com/learn/explore-colbert-token-level-embedding-and-ranking-model-for-similarity-search">https://zilliz.com/learn/explore-colbert-token-level-embedding-and-ranking-model-for-similarity-search</a></td></tr> <tr><td style="text-align:center">SPLADE</td><td style="text-align:center">An advanced embedding model for generating sparse embeddings.</td><td style="text-align:center">https://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#SPLADE</td></tr> <tr><td style="text-align:center">BGE-M3</td><td style="text-align:center"><a href="https://huggingface.co/BAAI/bge-m3">BGE-M3</a> is an advanced machine-learning model that extends BERT's capabilities.</td><td style="text-align:center">https://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BGE-M3</td></tr> </tbody> </table> <h2 id="What-are-Vector-Embeddings-Used-for" class="common-anchor-header">What are Vector Embeddings Used for?<button data-href="#What-are-Vector-Embeddings-Used-for" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>Vector embeddings are widely used in various modern search and AI tasks. Some of these tasks include:</p> <ul> <li><strong>Similarity Search:</strong> Similarity search is a technique to find similar data points in high-dimensional space. This is done by measuring the distance between vector embeddings using similarity measures like Euclidean distance or Jaccard similarity. Modern search engines use similarity search to retrieve relevant web pages against user searches.</li> </ul> <ul> <li><strong>Recommendation Systems:</strong> Recommendation systems rely on vectorized data to cluster similar items. Elements from the same cluster are then used as recommendations for the users. The systems create clusters on various levels, such as groups of users based on demographics and preferences and a group of products. All this information is stored as vector embeddings for efficient and accurate retrieval at runtime.</li> </ul> <ul> <li><strong>Retrieval Augmented Generation (RAG)</strong>: RAG is a popular technique for alleviating the hallucinatory issues of large language models and providing them with additional knowledge. Embedding models transform external knowledge and user queries into vector embeddings. A vector database stores the embeddings and conducts a similarity search for the most relevant results to the user query. The LLM generates the final answers based on the retrieved contextual information.</li> </ul> <h2 id="Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus" class="common-anchor-header">Storing, Indexing, and Retrieving Vector Embeddings with Milvus<button data-href="#Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>Milvus offers a built-in library to store, index, and search vector embeddings. Here’s the step-by-step approach to do so using the <code>PyMilvus</code> library:</p> <h3 id="1-Install-Libraries-and-Set-up-a-Milvus-Database" class="common-anchor-header">1. Install Libraries and Set up a Milvus Database<button data-href="#1-Install-Libraries-and-Set-up-a-Milvus-Database" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Install <code>pymilvus</code> , and <code>gensim</code> , where <code>Pymilvus</code> is a Python SDK for Milvus, and <code>gensim</code> is a Python library for NLP. Run the following code to install the libraries:</p> <pre><code><span class="hljs-addition">!pip install -U -pymilvus gensim</span> <button class="copy-code-btn"></button></code></pre> <p>In this tutorial, we’re connecting <a href="https://milvus.io/docs/install_standalone-docker.md">Milvus</a> using docker, so make sure you’ve docker installed in your system. Run the following command in your terminal to install Milvus:</p> <pre><code><span class="hljs-meta prompt_">&gt;</span> <span class="language-javascript">wget -sfL <span class="hljs-attr">https</span>:<span class="hljs-comment">//raw.githubusercontent.com/milvus-io/milvus/master/scripts/standalone_embed.sh</span></span> <span class="hljs-meta prompt_">&gt;</span> <span class="language-javascript">bash standalone_embed.<span class="hljs-property">sh</span> start</span> <button class="copy-code-btn"></button></code></pre> <p>Now the Milvus service has started and you’re ready to use the Milvus database.To set up a local Milvus vector database, create a MilvusClient instance and specify a filename, like <code>milvus_demo.db</code>, to store all the data.</p> <pre><code>from pymilvus <span class="hljs-keyword">import</span> <span class="hljs-type">MilvusClient</span> <span class="hljs-variable">client</span> <span class="hljs-operator">=</span> MilvusClient(<span class="hljs-string">&quot;milvus_demo.db&quot;</span>) <button class="copy-code-btn"></button></code></pre> <h3 id="2-Generate-Vector-Embeddings" class="common-anchor-header">2. Generate Vector Embeddings<button data-href="#2-Generate-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>The following code creates a collection to store embeddings, loads a pre-trained model from <code>gensim</code> , and generates embeddings to simple words like ice and water:</p> <pre><code>import gensim.downloader as api <span class="hljs-keyword">from</span> pymilvus import ( connections, FieldSchema, CollectionSchema, DataType) <span class="hljs-comment"># create a collection</span> fields = [ FieldSchema(<span class="hljs-attribute">name</span>=<span class="hljs-string">&quot;pk&quot;</span>, <span class="hljs-attribute">dtype</span>=DataType.INT64, <span class="hljs-attribute">is_primary</span>=<span class="hljs-literal">True</span>, <span class="hljs-attribute">auto_id</span>=<span class="hljs-literal">False</span>), FieldSchema(<span class="hljs-attribute">name</span>=<span class="hljs-string">&quot;words&quot;</span>, <span class="hljs-attribute">dtype</span>=DataType.VARCHAR, <span class="hljs-attribute">max_length</span>=50), FieldSchema(<span class="hljs-attribute">name</span>=<span class="hljs-string">&quot;embeddings&quot;</span>, <span class="hljs-attribute">dtype</span>=DataType.FLOAT_VECTOR, <span class="hljs-attribute">dim</span>=50)] schema = CollectionSchema(fields, <span class="hljs-string">&quot;Demo to store and retrieve embeddings&quot;</span>) demo_milvus = client.create_collection(<span class="hljs-string">&quot;milvus_demo&quot;</span>, schema) <span class="hljs-comment"># load the pre-trained model from gensim</span> model = api.load(<span class="hljs-string">&quot;glove-wiki-gigaword-50&quot;</span>) <span class="hljs-comment"># generate embeddings</span> ice = model[<span class="hljs-string">&#x27;ice&#x27;</span>] water = model[<span class="hljs-string">&#x27;water&#x27;</span>] cold = model[<span class="hljs-string">&#x27;cold&#x27;</span>] tree = model[<span class="hljs-string">&#x27;tree&#x27;</span>] man = model[<span class="hljs-string">&#x27;man&#x27;</span>] woman = model[<span class="hljs-string">&#x27;woman&#x27;</span>] child = model[<span class="hljs-string">&#x27;child&#x27;</span>] female = model[<span class="hljs-string">&#x27;female&#x27;</span>] <button class="copy-code-btn"></button></code></pre> <h3 id="3-Store-Vector-Embeddings" class="common-anchor-header">3. Store Vector Embeddings<button data-href="#3-Store-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Store the generated vector embeddings in the previous step to the <code>demo_milvus</code> collection we created above:</p> <pre><code><span class="hljs-comment">#Insert data in collection</span> <span class="hljs-attr">data</span> = [ [<span class="hljs-number">1</span>,<span class="hljs-number">2</span>,<span class="hljs-number">3</span>,<span class="hljs-number">4</span>,<span class="hljs-number">5</span>,<span class="hljs-number">6</span>,<span class="hljs-number">7</span>,<span class="hljs-number">8</span>], <span class="hljs-comment"># field pk </span> [<span class="hljs-string">&#x27;ice&#x27;</span>,<span class="hljs-string">&#x27;water&#x27;</span>,<span class="hljs-string">&#x27;cold&#x27;</span>,<span class="hljs-string">&#x27;tree&#x27;</span>,<span class="hljs-string">&#x27;man&#x27;</span>,<span class="hljs-string">&#x27;woman&#x27;</span>,<span class="hljs-string">&#x27;child&#x27;</span>,<span class="hljs-string">&#x27;female&#x27;</span>], <span class="hljs-comment"># field words </span> [ice, water, cold, tree, man, woman, child, female], <span class="hljs-comment"># field embeddings]</span> insert_result = demo_milvus.insert(data) <span class="hljs-comment"># After final entity is inserted, it is best to call flush to have no growing segments left in memory</span> demo_milvus.flush() <button class="copy-code-btn"></button></code></pre> <h3 id="4-Create-Indexes-on-Entries" class="common-anchor-header">4. Create Indexes on Entries<button data-href="#4-Create-Indexes-on-Entries" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Indexes make the vector search faster. The following code <code>IVF_FLAT</code> index, <code>L2 (Euclidean distance)</code> metric, and 128 parameters to create an index:</p> <pre><code><span class="hljs-keyword">index</span> = { <span class="hljs-string">&quot;index_type&quot;</span>: <span class="hljs-string">&quot;IVF_FLAT&quot;</span>, <span class="hljs-string">&quot;metric_type&quot;</span>: <span class="hljs-string">&quot;L2&quot;</span>, <span class="hljs-string">&quot;params&quot;</span>: {<span class="hljs-string">&quot;nlist&quot;</span>: 128},} demo_milvus.create_<span class="hljs-meta">index</span>(<span class="hljs-string">&quot;embeddings&quot;</span>, <span class="hljs-keyword">index</span>) <button class="copy-code-btn"></button></code></pre> <h3 id="5-Search-Vector-Embeddings" class="common-anchor-header">5. Search Vector Embeddings<button data-href="#5-Search-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>To search the vector embedding, load the Milvus collection in memory using the <code>.load()</code> method and do a vector similarity search:</p> <pre><code>demo_milvus.load() <span class="hljs-comment"># performs a vector similarity search:</span> data = [cold]search_params = { <span class="hljs-string">&quot;metric_type&quot;</span>: <span class="hljs-string">&quot;L2&quot;</span>, <span class="hljs-string">&quot;params&quot;</span>: {<span class="hljs-string">&quot;nprobe&quot;</span>: 10},} result = demo_milvus.search(data, <span class="hljs-string">&quot;embeddings&quot;</span>, search_params, limit=4, output_fields=[<span class="hljs-string">&quot;words&quot;</span>]) <button class="copy-code-btn"></button></code></pre> <h2 id="Best-Practices-for-Using-Vector-Embeddings" class="common-anchor-header">Best Practices for Using Vector Embeddings<button data-href="#Best-Practices-for-Using-Vector-Embeddings" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>Obtaining optimal results with vector embeddings requires careful use of embedding models. The best practices for using vector embeddings are:</p> <h3 id="1-Selecting-the-Right-Embedding-Model" class="common-anchor-header">1. Selecting the Right Embedding Model<button data-href="#1-Selecting-the-Right-Embedding-Model" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Different embedding models are suitable for different tasks. For example, CLIP is designed for multimodal tasks, and GloVe is designed for NLP tasks. <a href="https://zilliz.com/blog/choosing-the-right-embedding-model-for-your-data">Selecting embedding models</a> based on data needs and computational limitations results in better outputs.</p> <h3 id="2-Optimizing-Embedding-Performance" class="common-anchor-header">2. Optimizing Embedding Performance<button data-href="#2-Optimizing-Embedding-Performance" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Pre-trained models like BERT and CLIP offer a good starting point. However, these can be optimized for improved performance.</p> <p>Hyperparameter tuning also helps find the important combination of features for optimal performance. Data augmentation is another way to improve embedding model performance. It artificially increases the size and complexity of data, making it suitable for tasks with limited data.</p> <h3 id="3-Monitoring-Embedding-Model" class="common-anchor-header">3. Monitoring Embedding Model<button data-href="#3-Monitoring-Embedding-Model" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Continuous monitoring of embedding models tests their performance over time. This offers insights into model degradation, allowing fine-tuning them for accurate results.</p> <h3 id="4-Considering-Evolving-Needs" class="common-anchor-header">4. Considering Evolving Needs<button data-href="#4-Considering-Evolving-Needs" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Evolving data needs like growing data or changing format may decrease accuracy. Retraining and fine-tuning models according to data needs ensures precise model performance.</p> <h2 id="Common-Pitfalls-and-How-to-Avoid-Them" class="common-anchor-header">Common Pitfalls and How to Avoid Them<button data-href="#Common-Pitfalls-and-How-to-Avoid-Them" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><h4 id="Change-in-Model-Architecture" class="common-anchor-header">Change in Model Architecture<button data-href="#Change-in-Model-Architecture" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h4><p>Fine-tuning and hyperparameter tuning can modify the underlying model architecture. Since the model generates vector embeddings, significant changes can lead to different vector embeddings.</p> <p>To improve model performance without changing them completely, avoid adjusting model parameters completely. Instead, fine-tune pre-trained models like Word2Vec and BERT for specific tasks.</p> <h4 id="Data-Drift" class="common-anchor-header">Data Drift<button data-href="#Data-Drift" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h4><p>Data drift happens when data changes from what the model was trained on. This might result in inaccurate vector embeddings. Continuous monitoring of data ensures it stays consistent with model requirements.</p> <h4 id="Misleading-Evaluation-Metrics" class="common-anchor-header">Misleading Evaluation Metrics<button data-href="#Misleading-Evaluation-Metrics" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h4><p>All evaluation metrics are suitable for different tasks. Randomly choosing the evaluation metrics might result in misleading analysis, hiding the model's true performance.</p> <p>Carefully pick the evaluation metrics suitable for your tasks. For example, Cosine similarity for semantic differences and BLEU score for translation tasks.</p> <h2 id="Further-Resources" class="common-anchor-header">Further Resources<button data-href="#Further-Resources" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h2><p>The best way to build a deeper understanding of vector embeddings is by watching relevant resources, practicing, and engaging with industry professionals. Below are the ways you can deeply explore vector embeddings:</p> <ul> <li>Zilliz Learn series: <a href="https://zilliz.com/learn">https://zilliz.com/learn</a></li> </ul> <ul> <li>Zilliz Glossaries: <a href="https://zilliz.com/glossary">https://zilliz.com/glossary</a></li> </ul> <ul> <li>Embedding models and their integration with Milvus: <a href="https://zilliz.com/product/integrations">https://zilliz.com/product/integrations</a></li> </ul> <ul> <li>Hugging Face Models: <a href="https://huggingface.co/models">https://huggingface.co/models</a></li> </ul> <ul> <li>Academic papers:</li> </ul> <ul> <li><a href="https://arxiv.org/abs/1810.04805">[1810.04805] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding</a></li> </ul> <ul> <li><a href="https://arxiv.org/abs/2004.12832">[2004.12832] ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT</a></li> </ul> <ul> <li><a href="https://arxiv.org/abs/2109.10086">[2109.10086] SPLADE v2: Sparse Lexical and Expansion Model for Information Retrieval</a></li> </ul> <ul> <li>Benchmark tool for evaluating vector databases: <a href="https://zilliz.com/vector-database-benchmark-tool?database=ZillizCloud%2CMilvus%2CElasticCloud%2CPgVector%2CPinecone%2CQdrantCloud%2CWeaviateCloud&amp;dataset=medium&amp;filter=none%2Clow%2Chigh&amp;tab=1">VectorDBBench: An Open-Source VectorDB Benchmark Tool</a></li> </ul> <h3 id="2-Community-Engagement" class="common-anchor-header">2. Community Engagement<button data-href="#2-Community-Engagement" class="anchor-icon" translate="no"> <svg aria-hidden="true" focusable="false" height="20" version="1.1" viewBox="0 0 16 16" width="16" > <path fill="#0092E4" fill-rule="evenodd" d="M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z" ></path> </svg> </button></h3><p>Join <a href="https://discord.com/invite/8uyFbECzPX">our Discord</a> community to connect with GenAI developers from various industries and discuss everything related to vector embeddings, vector databases, and AI. Follow relevant discussions on Stack Overflow, Reddit, and <a href="https://github.com/milvus-io/milvus">GitHub</a> to learn potential issues you might encounter when working with embeddings and improve your debugging skills.</p> <p>Staying up-to-date with resources and engaging with the community ensures that your skills grow as technology advances, which offers you a competitive advantage in the AI industry.</p> </div><div class="learnDetail_updateAt__h06TJ">Updated on Jul 01, 2025</div><ul class="learnDetail_authorsList__1H6GG"><li><span class="learnDetail_avatarWrapper__M_vVs"><img src="https://assets.zilliz.com/Haziqa_Sajid_e9a3a2f2ed.jpeg" alt=" Haziqa Sajid"/></span><div><a class="learnDetail_name__YcFY9" href="/authors/_Haziqa_Sajid"> Haziqa Sajid</a><p class="learnDetail_introduction__LE63i">Digital Storytelling for Data, AI, B2B &amp; SaaS</p></div></li></ul><div class="learnDetail_footerNavWrapper__jif_z"><div><a class="learnDetail_linkBtn__rGWom learnDetail_previousBtn__OORHy" href="/learn/Deploying-Vector-Databases-in-Multi-Cloud-Environments"><svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg>previous</a></div><div></div></div></div><div class="globalArticleCTASection_sectionContainer__z7vw5 learnDetail_rightSideBar__N0jto"><ul class="globalArticleCTASection_listWrapper__l52HF"><h3 class="globalArticleCTASection_anchorTitle__iGSh_">Content</h3><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#What-are-Vector-Embeddings">What are Vector Embeddings?</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#Types-of-Vector-Embeddings">Types of Vector Embeddings</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#How-are-Vector-Embeddings-Created">How are Vector Embeddings Created?</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#What-are-Vector-Embeddings-Used-for">What are Vector Embeddings Used for?</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus">Storing, Indexing, and Retrieving Vector Embeddings with Milvus</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#Best-Practices-for-Using-Vector-Embeddings">Best Practices for Using Vector Embeddings</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#Common-Pitfalls-and-How-to-Avoid-Them">Common Pitfalls and How to Avoid Them</a></li><li><a class="globalArticleCTASection_anchorLink__JXvsH" href="/learn/everything-you-should-know-about-vector-embeddings#Further-Resources">Further Resources</a></li></ul><div class="globalArticleCTASection_trialContainer___GL1h"><h4 class="globalArticleCTASection_trialTitle__5LCX9">Start Free, Scale Easily</h4><p class="globalArticleCTASection_trialContent__VfHaQ">Try the fully-managed vector database built for your GenAI applications.</p><a rel="noreferrer noopener" target="_blank" title="" class="BaseButton_root__SFmw5 BaseButton_contained__2JisA globalArticleCTASection_trialButton__o7PQn" href="https://cloud.zilliz.com/signup?utm_page=everything-you-should-know-about-vector-embeddings&amp;utm_button=right_CTA">Try Zilliz Cloud for Free</a></div><div class="share_shareSection__JdOu2"><h4 class="share_shareTitle__cRU4n">Share this article</h4><div class="share_mediasWrapper__IYc0b"><button aria-label="twitter" class="react-share__ShareButton share_btn__pkIal share_liteIcon__zji87" style="background-color:transparent;border:none;padding:0;font:inherit;color:inherit;cursor:pointer"><svg height="30" width="30" viewBox="0 0 30 30" fill="none" xmlns="http://www.w3.org/2000/svg"><circle cx="15" cy="15" r="15" fill="white"></circle><path d="M18.244 2.25h3.308l-7.227 8.26 8.502 11.24H16.17l-5.214-6.817L4.99 21.75H1.68l7.73-8.835L1.254 2.25H8.08l4.713 6.231zm-1.161 17.52h1.833L7.084 4.126H5.117z" fill="black" transform="translate(7, 7) scale(0.75)"></path></svg></button><button aria-label="facebook" class="react-share__ShareButton share_btn__pkIal share_liteIcon__zji87" style="background-color:transparent;border:none;padding:0;font:inherit;color:inherit;cursor:pointer"><svg width="30" height="30" viewBox="0 0 30 30" fill="none" xmlns="http://www.w3.org/2000/svg"><circle cx="15" cy="15" r="15" fill="white"></circle><path d="M13 12.3333H11V15H13V23H16.3333V15H18.7333L19 12.3333H16.3333V11.2C16.3333 10.6 16.4667 10.3333 17.0667 10.3333H19V7H16.4667C14.0667 7 13 8.06667 13 10.0667V12.3333Z" fill="black"></path></svg></button><button class="share_btn__pkIal share_liteIcon__zji87"><a target="_new"><svg width="30" height="31" viewBox="0 0 30 31" fill="none" xmlns="http://www.w3.org/2000/svg"><circle cx="15" cy="15.6" r="15" fill="white"></circle><path fill-rule="evenodd" clip-rule="evenodd" d="M10.5 8.34998C10.5 9.31647 9.7165 10.1 8.75 10.1C7.7835 10.1 7 9.31647 7 8.34998C7 7.38348 7.7835 6.59998 8.75 6.59998C9.7165 6.59998 10.5 7.38348 10.5 8.34998ZM7 11.6H10.578V22.6H7V11.6ZM20.2998 11.7212C20.2954 11.7197 20.2909 11.7182 20.2864 11.7168C20.2616 11.7085 20.2367 11.7003 20.21 11.693C20.162 11.682 20.114 11.673 20.065 11.665C19.875 11.627 19.667 11.6 19.423 11.6C17.337 11.6 16.014 13.117 15.578 13.703V11.6H12V22.6H15.578V16.6C15.578 16.6 18.282 12.834 19.423 15.6V22.6H23V15.177C23 13.515 21.861 12.13 20.324 11.729C20.3159 11.7264 20.3078 11.7238 20.2998 11.7212Z" fill="black"></path></svg></a></button><button class="share_btn__pkIal share_shareBtn__uoSKk share_liteIcon__zji87" aria-label="Share Link"><svg width="30" height="30" viewBox="0 0 30 30" fill="none" xmlns="http://www.w3.org/2000/svg"><circle cx="15" cy="15" r="15" fill="white"></circle><path d="M19.1221 8.9396C19.1221 8.42067 19.5358 8 20.0462 8C20.6346 8 21.2128 8.12022 21.7755 8.34908C22.388 8.59816 22.8593 8.96779 23.2871 9.40272C23.7148 9.83765 24.0784 10.3169 24.3233 10.9396C24.5484 11.5118 24.6667 12.0997 24.6667 12.698C24.6667 13.2963 24.5484 13.8842 24.3233 14.4563C24.0814 15.0712 23.7239 15.5463 23.3032 15.9769L22.3933 16.9945C22.3835 17.0055 22.3734 17.0163 22.363 17.0268L19.4059 20.0335C18.9782 20.4685 18.5068 20.8381 17.8944 21.0872C17.3316 21.316 16.7534 21.4362 16.165 21.4362C15.5766 21.4362 14.9984 21.316 14.4357 21.0872C13.8233 20.8381 13.3519 20.4685 12.9241 20.0335C12.4964 19.5986 12.1328 19.1193 11.8879 18.4966C11.6585 17.9134 11.5446 17.325 11.5446 16.6443C11.5446 16.046 11.6628 15.4581 11.8879 14.8859C12.1328 14.2633 12.4964 13.784 12.9241 13.349L13.9406 12.3155C14.3015 11.9485 14.8866 11.9485 15.2475 12.3155C15.6084 12.6824 15.6084 13.2773 15.2475 13.6443L14.231 14.6778C13.9195 14.9946 13.7285 15.2669 13.6039 15.5839C13.4593 15.9513 13.3927 16.303 13.3927 16.6443C13.3927 17.0911 13.4637 17.4423 13.6039 17.7987C13.7285 18.1156 13.9195 18.388 14.231 18.7047C14.5425 19.0215 14.8104 19.2156 15.1221 19.3424C15.4834 19.4893 15.8293 19.557 16.165 19.557C16.5007 19.557 16.8466 19.4893 17.208 19.3424C17.5196 19.2156 17.7875 19.0215 18.099 18.7047L21.0406 15.7138L21.9499 14.6968C21.9597 14.6858 21.9699 14.675 21.9802 14.6645C22.2918 14.3477 22.4827 14.0753 22.6073 13.7584C22.7519 13.391 22.8185 13.0393 22.8185 12.698C22.8185 12.3567 22.7519 12.005 22.6073 11.6375C22.4827 11.3206 22.2918 11.0483 21.9802 10.7315C21.6687 10.4148 21.4008 10.2206 21.0891 10.0939C20.7278 9.9469 20.3819 9.87919 20.0462 9.87919C19.5358 9.87919 19.1221 9.45852 19.1221 8.9396ZM14.5941 10.349C14.2584 10.349 13.9125 10.4167 13.5511 10.5637C13.2394 10.6904 12.9716 10.8846 12.66 11.2013L9.62608 14.2862L8.71678 15.3032C8.70692 15.3142 8.6968 15.325 8.68644 15.3355C8.37491 15.6523 8.18399 15.9247 8.05932 16.2416C7.91477 16.609 7.84818 16.9607 7.84818 17.302C7.84818 17.6433 7.91477 17.995 8.05932 18.3625C8.18399 18.6794 8.37491 18.9517 8.68644 19.2685C8.99796 19.5852 9.26584 19.7794 9.57752 19.9061C9.93889 20.0531 10.2848 20.1208 10.6205 20.1208C11.1308 20.1208 11.5446 20.5415 11.5446 21.0604C11.5446 21.5793 11.1308 22 10.6205 22C10.0321 22 9.45385 21.8798 8.89112 21.6509C8.27871 21.4018 7.80732 21.0322 7.37957 20.5973C6.95182 20.1624 6.58829 19.6831 6.34332 19.0604C6.11823 18.4882 6 17.9003 6 17.302C6 16.7037 6.11823 16.1158 6.34332 15.5437C6.58521 14.9288 6.94272 14.4537 7.36351 14.0231L8.27332 13.0055C8.28318 12.9945 8.2933 12.9837 8.30366 12.9732L11.3532 9.87252C11.7809 9.43759 12.2523 9.06795 12.8647 8.81888C13.4274 8.59001 14.0057 8.4698 14.5941 8.4698C15.1825 8.4698 15.7607 8.59001 16.3234 8.81888C16.9358 9.06795 17.4072 9.43759 17.8349 9.87252C18.2627 10.3074 18.6262 10.7867 18.8712 11.4094C19.0963 11.9816 19.2145 12.5695 19.2145 13.1678C19.2145 13.7661 19.0963 14.354 18.8712 14.9261C18.629 15.5417 18.271 16.0171 17.8496 16.4481L16.8462 17.5611C16.5013 17.9436 15.9168 17.9695 15.5406 17.6188C15.1644 17.2682 15.139 16.6738 15.4838 16.2913L16.5003 15.1637C16.5094 15.1537 16.5186 15.1439 16.5281 15.1343C16.8396 14.8175 17.0305 14.5451 17.1552 14.2282C17.2997 13.8608 17.3663 13.5091 17.3663 13.1678C17.3663 12.8265 17.2997 12.4748 17.1552 12.1073C17.0305 11.7904 16.8396 11.5181 16.5281 11.2013C16.2166 10.8846 15.9487 10.6904 15.637 10.5637C15.2756 10.4167 14.9297 10.349 14.5941 10.349Z" fill="black"></path></svg><span class="share_copied__4rKDS">Copied</span></button></div></div></div></div><section class="learnDetail_recommendSection__NBE0x"><h2 class="learnDetail_recommendTitle__WzsJ1">Keep Reading</h2><ul class="learnDetail_recommendArticles__0Csna"><a class="learnDetail_pickerItem__Qkcwx" href="/learn/introduction-to-unstructured-data"><img src="https://assets.zilliz.com/Introduction_to_Unstructured_Data_60dd9ab64b.png" alt="Introduction to Unstructured Data"/><div class="learnDetail_contentPart__zQTkO"><div><h3 class="learnDetail_articleTitle__pYwnn">Introduction to Unstructured Data</h3><p class="learnDetail_articleDesc__iZ5v7">Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week. </p></div><button class="CommonTransitionButton_transitionButton__4vdrN"><span class="CommonTransitionButton_label__4YgGh">Read Now</span><svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></button></div></a><a class="learnDetail_pickerItem__Qkcwx" href="/learn/choosing-right-vector-index-for-your-project"><img src="https://assets.zilliz.com/Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png" alt="Choosing the Right Vector Index for Your Project"/><div class="learnDetail_contentPart__zQTkO"><div><h3 class="learnDetail_articleTitle__pYwnn">Choosing the Right Vector Index for Your Project</h3><p class="learnDetail_articleDesc__iZ5v7">Understanding in-memory vector search algorithms, indexing strategies, and guidelines on choosing the right vector index for your project.</p></div><button class="CommonTransitionButton_transitionButton__4vdrN"><span class="CommonTransitionButton_label__4YgGh">Read Now</span><svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></button></div></a><a class="learnDetail_pickerItem__Qkcwx" href="/learn/integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges"><img src="https://assets.zilliz.com/Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png" alt="Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges "/><div class="learnDetail_contentPart__zQTkO"><div><h3 class="learnDetail_articleTitle__pYwnn">Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges </h3><p class="learnDetail_articleDesc__iZ5v7">Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning. </p></div><button class="CommonTransitionButton_transitionButton__4vdrN"><span class="CommonTransitionButton_label__4YgGh">Read Now</span><svg width="16" height="17" viewBox="0 0 16 17" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M10.7817 8.15636L7.20566 4.58036L8.14833 3.6377L13.3337 8.82303L8.14833 14.0084L7.20566 13.0657L10.7817 9.4897H2.66699V8.15636H10.7817Z" fill="black"></path></svg></button></div></a></ul></section></div></main><footer class="footer_footerContainer__nClkK"><div class="page_container__IibMw"><section class="footer_navsWrapper__d8Hxa"><div class="footer_subscribePart__bj0xX"><div class="footer_socialMediaWrapper__v0YIi"><img src="/images/home/homepage-footer-logo.svg" alt="Zilliz Logo"/><div class="footer_socialMediaIcons__xqbMo"><a rel="noopener noreferrer" target="_blank" title="Youtube" class="footer_linkButton__e7vEY" href="https://www.youtube.com/c/MilvusVectorDatabase"><svg width="24" height="24" viewBox="0 0 24 24" fill="none"><path fill-rule="evenodd" clip-rule="evenodd" d="M20.184 4.13849C21.6225 4.21949 22.329 4.43249 22.98 5.59049C23.658 6.74699 24 8.73899 24 12.2475V12.252V12.2595C24 15.7515 23.658 17.7585 22.9815 18.903C22.3305 20.061 21.624 20.271 20.1855 20.3685C18.747 20.451 15.1335 20.5005 12.003 20.5005C8.8665 20.5005 5.2515 20.451 3.8145 20.367C2.379 20.2695 1.6725 20.0595 1.0155 18.9015C0.345 17.757 0 15.75 0 12.258V12.255V12.2505V12.246C0 8.73899 0.345 6.74699 1.0155 5.59049C1.6725 4.43099 2.3805 4.21949 3.816 4.13699C5.2515 4.04099 8.8665 4.00049 12.003 4.00049C15.1335 4.00049 18.747 4.04099 20.184 4.13849ZM16.5 12.2505L9 7.75049V16.7505L16.5 12.2505Z" fill="black"></path></svg></a><a rel="noopener noreferrer" target="_blank" title="LinkedIn" class="footer_linkButton__e7vEY" href="https://www.linkedin.com/company/zilliz"><svg width="24" height="24" viewBox="0 0 24 24" fill="none"><path fill-rule="evenodd" clip-rule="evenodd" d="M5.8125 2.40625C5.8125 3.73519 4.73519 4.8125 3.40625 4.8125C2.07731 4.8125 1 3.73519 1 2.40625C1 1.07731 2.07731 0 3.40625 0C4.73519 0 5.8125 1.07731 5.8125 2.40625ZM1 6.875H5.91975V22H1V6.875ZM19.3205 7.05237C19.3031 7.04688 19.286 7.04122 19.2689 7.03557L19.2688 7.03556C19.2346 7.02426 19.2004 7.01296 19.1637 7.00287C19.0977 6.98775 19.0317 6.97538 18.9644 6.96438C18.7031 6.91213 18.4171 6.875 18.0816 6.875C15.2134 6.875 13.3942 8.96088 12.7948 9.76663V6.875H7.875V22H12.7948V13.75C12.7948 13.75 16.5127 8.57175 18.0816 12.375V22H23V11.7934C23 9.50813 21.4339 7.60375 19.3205 7.05237Z" fill="black"></path></svg></a><a rel="noopener noreferrer" target="_blank" title="Twitter" class="footer_linkButton__e7vEY" href="https://twitter.com/zilliz_universe"><svg height="24" width="24" viewBox="0 0 24 24" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M18.244 2.25h3.308l-7.227 8.26 8.502 11.24H16.17l-5.214-6.817L4.99 21.75H1.68l7.73-8.835L1.254 2.25H8.08l4.713 6.231zm-1.161 17.52h1.833L7.084 4.126H5.117z" fill="black"></path></svg></a><a rel="noopener noreferrer" target="_blank" title="GitHub" class="footer_linkButton__e7vEY" href="https://github.com/zilliztech"><svg width="24" height="24" viewBox="0 0 24 24" fill="none"><path fill-rule="evenodd" clip-rule="evenodd" d="M12.3036 1C6.0724 1 1.02539 6.04701 1.02539 12.2782C1.02539 17.2688 4.25378 21.4841 8.73688 22.9785C9.30079 23.0771 9.51226 22.7388 9.51226 22.4427C9.51226 22.1749 9.49816 21.2867 9.49816 20.3422C6.66451 20.8638 5.93142 19.6514 5.70586 19.017C5.57898 18.6927 5.02916 17.6918 4.54984 17.4239C4.1551 17.2125 3.59119 16.6908 4.53574 16.6767C5.4239 16.6626 6.0583 17.4944 6.26977 17.8328C7.28481 19.5386 8.90606 19.0593 9.55455 18.7632C9.65324 18.0301 9.94929 17.5367 10.2735 17.2548C7.76413 16.9728 5.14195 16 5.14195 11.6861C5.14195 10.4596 5.57898 9.44458 6.29796 8.6551C6.18518 8.37314 5.79044 7.21713 6.41075 5.66637C6.41075 5.66637 7.3553 5.37031 9.51226 6.82239C10.4145 6.56863 11.3732 6.44175 12.3318 6.44175C13.2905 6.44175 14.2491 6.56863 15.1514 6.82239C17.3083 5.35622 18.2529 5.66637 18.2529 5.66637C18.8732 7.21713 18.4785 8.37314 18.3657 8.6551C19.0847 9.44458 19.5217 10.4455 19.5217 11.6861C19.5217 16.0141 16.8854 16.9728 14.376 17.2548C14.7848 17.6072 15.1373 18.2839 15.1373 19.3412C15.1373 20.8497 15.1232 22.0621 15.1232 22.4427C15.1232 22.7388 15.3346 23.0912 15.8986 22.9785C20.3535 21.4841 23.5819 17.2548 23.5819 12.2782C23.5819 6.04701 18.5348 1 12.3036 1V1Z" fill="black"></path></svg></a><a rel="noopener noreferrer" target="_blank" title="Discord" class="footer_linkButton__e7vEY" href="https://discord.com/invite/8uyFbECzPX"><svg width="24" height="24" viewBox="0 0 24 24" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M20.3303 4.19247C18.7767 3.45861 17.1156 2.92527 15.3789 2.62158C15.1656 3.0109 14.9164 3.53454 14.7446 3.9511C12.8985 3.67079 11.0693 3.67079 9.25714 3.9511C9.08537 3.53454 8.83053 3.0109 8.61534 2.62158C6.87679 2.92527 5.21374 3.46057 3.66017 4.19636C0.526624 8.9771 -0.32283 13.6391 0.101898 18.2349C2.18023 19.8019 4.19439 20.7537 6.17456 21.3766C6.66347 20.6973 7.09951 19.9751 7.47516 19.214C6.75973 18.9395 6.07451 18.6008 5.42705 18.2076C5.59882 18.0792 5.76684 17.9448 5.92916 17.8066C9.87818 19.6714 14.1689 19.6714 18.0707 17.8066C18.2349 17.9448 18.4029 18.0792 18.5728 18.2076C17.9235 18.6028 17.2364 18.9415 16.5209 19.216C16.8966 19.9751 17.3307 20.6992 17.8215 21.3786C19.8036 20.7557 21.8196 19.8038 23.898 18.2349C24.3963 12.9072 23.0466 8.28801 20.3303 4.19247ZM8.01316 15.4085C6.8277 15.4085 5.85553 14.2912 5.85553 12.9305C5.85553 11.5699 6.80694 10.4506 8.01316 10.4506C9.2194 10.4506 10.1915 11.5679 10.1708 12.9305C10.1727 14.2912 9.2194 15.4085 8.01316 15.4085ZM15.9867 15.4085C14.8013 15.4085 13.8291 14.2912 13.8291 12.9305C13.8291 11.5699 14.7805 10.4506 15.9867 10.4506C17.1929 10.4506 18.1651 11.5679 18.1443 12.9305C18.1443 14.2912 17.1929 15.4085 15.9867 15.4085Z" fill="black"></path></svg></a><a rel="noopener noreferrer" target="_blank" title="G2" class="footer_linkButton__e7vEY" href="https://www.g2.com/products/zilliz/reviews"><svg width="24" height="25" viewBox="0 0 24 25" fill="none" xmlns="http://www.w3.org/2000/svg"><g id="Icon/Social/Instagram Copy 6"><g id="Layer 1"><path id="Vector" d="M15.708 16.8491C16.4437 18.1257 17.1712 19.3879 17.8981 20.6487C14.6791 23.1131 9.67095 23.4109 5.96349 20.5729C1.69701 17.3044 0.995778 11.7274 3.27998 7.71278C5.90715 3.09514 10.8234 2.07392 13.9888 2.82274C13.9032 3.00872 12.0074 6.9418 12.0074 6.9418C12.0074 6.9418 11.8575 6.95165 11.7727 6.95329C10.8371 6.99295 10.1403 7.21065 9.39335 7.59682C8.57389 8.02442 7.87164 8.64623 7.34797 9.40789C6.8243 10.1696 6.49516 11.0479 6.38932 11.9661C6.27888 12.8973 6.40764 13.8414 6.76345 14.709C7.06429 15.4425 7.48985 16.0939 8.06035 16.6439C8.93553 17.4885 9.97698 18.0114 11.1842 18.1845C12.3274 18.3486 13.4268 18.1862 14.4571 17.6684C14.8435 17.4745 15.1722 17.2604 15.5565 16.9667C15.6055 16.9349 15.6489 16.8947 15.708 16.8491Z" fill="black"></path><path id="Vector_2" d="M15.715 5.65256C15.5282 5.46878 15.3551 5.29921 15.1828 5.12855C15.0799 5.02681 14.9809 4.92097 14.8756 4.82169C14.8379 4.78587 14.7936 4.73691 14.7936 4.73691C14.7936 4.73691 14.8294 4.66088 14.8447 4.6297C15.0463 4.22521 15.3622 3.92956 15.7369 3.69436C16.1512 3.4323 16.6339 3.29896 17.124 3.3112C17.7511 3.32351 18.3342 3.47967 18.8262 3.9003C19.1894 4.21071 19.3757 4.60454 19.4085 5.07468C19.4632 5.8678 19.135 6.47523 18.4833 6.89914C18.1004 7.14857 17.6874 7.34138 17.2733 7.56974C17.045 7.69582 16.8497 7.80659 16.6265 8.03468C16.4302 8.26359 16.4206 8.47938 16.4206 8.47938L19.3872 8.47555V9.79679H14.8081C14.8081 9.79679 14.8081 9.70654 14.8081 9.66907C14.7906 9.0198 14.8663 8.40882 15.1636 7.81917C15.4371 7.2782 15.8621 6.88218 16.3727 6.57724C16.766 6.34231 17.1801 6.14239 17.5742 5.90855C17.8173 5.76442 17.9891 5.55301 17.9877 5.24643C17.9877 4.98333 17.7962 4.74949 17.5228 4.67647C16.8779 4.50253 16.2215 4.78012 15.8802 5.37032C15.8304 5.45647 15.7795 5.54207 15.715 5.65256Z" fill="black"></path><path id="Vector_3" d="M21.4533 15.4447L18.9533 11.1273H14.0061L11.49 15.4892H16.4736L18.9328 19.7861L21.4533 15.4447Z" fill="black"></path></g></g></svg></a><a rel="noopener noreferrer" target="_blank" title="Bluesky" class="footer_linkButton__e7vEY" href="https://bsky.app/profile/zilliz-universe.bsky.social"><svg width="24" height="24" viewBox="0 0 24 24" fill="none" xmlns="http://www.w3.org/2000/svg"><g id="Icon/Social/Instagram Copy 7"><path id="Vector" d="M5.76879 3.30387C8.29104 5.19742 11.004 9.03674 12.0001 11.0971C12.9962 9.03689 15.709 5.19738 18.2313 3.30387C20.0513 1.93757 23 0.8804 23 4.24437C23 4.9162 22.6148 9.8881 22.3889 10.6953C21.6036 13.5015 18.7421 14.2173 16.1967 13.7841C20.646 14.5413 21.7778 17.0496 19.3335 19.5579C14.6911 24.3216 12.661 18.3627 12.1406 16.8358C12.0453 16.5559 12.0007 16.4249 12 16.5363C11.9993 16.4249 11.9547 16.5559 11.8594 16.8358C11.3392 18.3627 9.30916 24.3218 4.66654 19.5579C2.22213 17.0496 3.35395 14.5412 7.80331 13.7841C5.25785 14.2173 2.39627 13.5015 1.6111 10.6953C1.38518 9.88802 1 4.91612 1 4.24437C1 0.8804 3.94882 1.93757 5.76866 3.30387H5.76879Z" fill="black"></path></g></svg></a></div></div><div class="subscribeFooter_container__V07MM footer_subscribeRoot__ni93s"><strong class="subscribeFooter_title__LHQsP">Sign up for the Zilliz newsletter</strong><div class="subscribeFooter_inputContainer__tnxFu"><input class="subscribeFooter_inputEle__oP0t0" placeholder="Email address" value=""/><button class="BaseButton_root__SFmw5 BaseButton_contained__2JisA subscribeFooter_btn__Hk7zt" style="cursor:pointer">Subscribe</button></div><p class="subscribeFooter_address__cI4er">201 Redwood Shores Pkwy, Suite 330 Redwood City, California 94065</p></div></div><ul class="footer_navs__0biPd"><li class="footer_navColumn__kADYe"><h5 class="footer_cat___oPg7">Products</h5><ul><li class="footer_titleGroup__v56YT"><a title="Zilliz Cloud" target="_self" class="footer_linkButton__e7vEY" href="/cloud">Zilliz Cloud</a></li><li class="footer_titleGroup__v56YT"><a title="Zilliz Cloud BYOC" target="_self" class="footer_linkButton__e7vEY" href="/bring-your-own-cloud">Zilliz Cloud BYOC</a></li><li class="footer_titleGroup__v56YT"><a title="Zilliz Cloud Free Tier" target="_self" class="footer_linkButton__e7vEY" href="/zilliz-cloud-free-tier">Zilliz Cloud Free Tier</a></li><li class="footer_titleGroup__v56YT"><a title="ZIlliz Migration Service" target="_self" class="footer_linkButton__e7vEY" href="/zilliz-migration-service">ZIlliz Migration Service</a></li><li class="footer_titleGroup__v56YT"><a title="Milvus" target="_self" class="footer_linkButton__e7vEY" href="/what-is-milvus">Milvus</a></li><li class="footer_titleGroup__v56YT"><a rel="noopener noreferrer" target="_blank" title="DeepSearcher" class="footer_linkButton__e7vEY" href="https://github.com/zilliztech/deep-searcher">DeepSearcher<svg width="12" height="12" viewBox="0 0 12 12" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M7.86391 4L2.70712 4L2.70712 3L9.57108 3V9.86396L8.57108 9.86396L8.57108 4.70704L2.70712 10.571L2.00002 9.86389L7.86391 4Z" fill="black"></path></svg></a></li><li class="footer_titleGroup__v56YT"><a title="GPTCache" target="_self" class="footer_linkButton__e7vEY" href="/what-is-gptcache">GPTCache</a></li><li class="footer_titleGroup__v56YT"><a title="Attu" target="_self" class="footer_linkButton__e7vEY" href="/attu">Attu</a></li><li class="footer_titleGroup__v56YT"><a rel="noopener noreferrer" target="_blank" title="Milvus CLI" class="footer_linkButton__e7vEY" href="https://github.com/zilliztech/milvus_cli">Milvus CLI<svg width="12" height="12" viewBox="0 0 12 12" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M7.86391 4L2.70712 4L2.70712 3L9.57108 3V9.86396L8.57108 9.86396L8.57108 4.70704L2.70712 10.571L2.00002 9.86389L7.86391 4Z" fill="black"></path></svg></a></li><li class="footer_titleGroup__v56YT"><a title="Vector Transport Service" target="_self" class="footer_linkButton__e7vEY" href="/vector-transport-service">Vector Transport Service</a></li></ul></li><li class="footer_navColumn__kADYe"><h5 class="footer_cat___oPg7">Developers</h5><ul><li class="footer_titleGroup__v56YT"><a rel="noopener noreferrer" target="_blank" title="Documentation" class="footer_linkButton__e7vEY" href="https://docs.zilliz.com/docs/home">Documentation<svg width="12" height="12" viewBox="0 0 12 12" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M7.86391 4L2.70712 4L2.70712 3L9.57108 3V9.86396L8.57108 9.86396L8.57108 4.70704L2.70712 10.571L2.00002 9.86389L7.86391 4Z" fill="black"></path></svg></a></li><li class="footer_titleGroup__v56YT"><a title="Open-Source Projects" target="_self" class="footer_linkButton__e7vEY" href="/product/open-source-vector-database">Open-Source Projects</a></li><li class="footer_titleGroup__v56YT"><a rel="noopener noreferrer" target="_self" title="VectorDB Benchmark" class="footer_linkButton__e7vEY" href="/vector-database-benchmark-tool">VectorDB Benchmark</a></li><li class="footer_titleGroup__v56YT"><a title="Free RAG Cost Calculator" target="_self" class="footer_linkButton__e7vEY" href="/rag-cost-calculator">Free RAG Cost Calculator</a></li><li class="footer_titleGroup__v56YT"><a title="RAG Tutorials" target="_self" class="footer_linkButton__e7vEY" href="/tutorials/rag">RAG Tutorials</a></li><li class="footer_titleGroup__v56YT"><a title="Milvus Notebooks" target="_self" class="footer_linkButton__e7vEY" href="/learn/milvus-notebooks">Milvus Notebooks</a></li></ul></li><li class="footer_navColumn__kADYe"><h5 class="footer_cat___oPg7">Resources</h5><ul><li class="footer_titleGroup__v56YT"><a title="Blog" target="_self" class="footer_linkButton__e7vEY" href="/blog">Blog</a></li><li class="footer_titleGroup__v56YT"><a title="Learning Center" target="_self" class="footer_linkButton__e7vEY" href="/learn">Learning Center</a></li><li class="footer_titleGroup__v56YT"><a title="GenAI Resource Hub" target="_self" class="footer_linkButton__e7vEY" href="/learn/generative-ai">GenAI Resource Hub</a></li><li class="footer_titleGroup__v56YT"><a title="VectorDB Comparison" target="_self" class="footer_linkButton__e7vEY" href="/comparison">VectorDB Comparison</a></li><li class="footer_titleGroup__v56YT"><a title="Guides &amp; Whitepapers" target="_self" class="footer_linkButton__e7vEY" href="/resources">Guides &amp; Whitepapers</a></li><li class="footer_titleGroup__v56YT"><a title="Popular Embedding Models" target="_self" class="footer_linkButton__e7vEY" href="/ai-models">Popular Embedding Models</a></li><li class="footer_titleGroup__v56YT"><a title="Data Connectors" target="_self" class="footer_linkButton__e7vEY" href="/data-connectors">Data Connectors</a></li><li class="footer_titleGroup__v56YT"><a title="Glossary" target="_self" class="footer_linkButton__e7vEY" href="/glossary">Glossary</a></li><li class="footer_titleGroup__v56YT"><a title="What is RAG?" target="_self" class="footer_linkButton__e7vEY" href="/learn/Retrieval-Augmented-Generation">What is RAG?</a></li><li class="footer_titleGroup__v56YT"><a title="What is a Vector Database?" target="_self" class="footer_linkButton__e7vEY" href="/learn/what-is-vector-database">What is a Vector Database?</a></li><li class="footer_titleGroup__v56YT"><a title="Trust Center" target="_self" class="footer_linkButton__e7vEY" href="/trust-center">Trust Center</a></li><li class="footer_titleGroup__v56YT"><a title="AI Reference Guide" target="_self" class="footer_linkButton__e7vEY footer_faqEntry__udaD3" href="/ai-faq">AI Reference Guide</a></li></ul></li><li class="footer_navColumn__kADYe"><h5 class="footer_cat___oPg7">Company</h5><ul><li class="footer_titleGroup__v56YT"><a title="About" target="_self" class="footer_linkButton__e7vEY" href="/about">About</a></li><li class="footer_titleGroup__v56YT"><a title="Careers" target="_self" class="footer_linkButton__e7vEY" href="/careers">Careers</a><span class="footer_highlightItem__lwUcw"></span></li><li class="footer_titleGroup__v56YT"><a title="News" target="_self" class="footer_linkButton__e7vEY" href="/news">News</a></li><li class="footer_titleGroup__v56YT"><a title="Partners" target="_self" class="footer_linkButton__e7vEY" href="/partners">Partners</a></li><li class="footer_titleGroup__v56YT"><a title="Events" target="_self" class="footer_linkButton__e7vEY" href="/event">Events</a></li><li class="footer_titleGroup__v56YT"><a title="Contact Sales" target="_self" class="footer_linkButton__e7vEY" href="/contact-sales">Contact Sales</a></li><li class="footer_titleGroup__v56YT"><a title="Brand Assets" target="_self" class="footer_linkButton__e7vEY" href="/brand-assets">Brand Assets</a></li></ul></li></ul></section><div class="footer_bottom__0Qj7r"><div class="footer_leftPart__k5npK"><div><a href="/terms-and-conditions">Terms of Service</a><a href="/privacy-policy">Privacy Policy</a><a href="/security">Security</a><a rel="noopener noreferrer" target="_blank" href="https://status.zilliz.com/">System Status</a><button class="footer_cookieBtn__KJDjM">Cookie Settings</button><div class="footer_mark__p3pFH">LF AI, LF AI &amp; data, Milvus, and associated open-source project names are trademarks of the Linux Foundation.</div><div class="footer_copyright__AUuel">© Zilliz 2025 All rights reserved.</div></div></div><div class="footer_logoSection__gXPke"><img src="/images/layout/soc-logo.png" alt="aicpa"/><img src="/images/layout/iso-logo.png" alt="ISO"/></div></div></div></footer><div class="inkeep_inkeepChatButtonContainer__sEQtK"><div class="inkeep_inkeepButtonBgWrapper__Mqwne"><button class="inkeep_inkeepChatButton__q9V8P"><svg width="15" height="16" viewBox="0 0 15 16" fill="none" xmlns="http://www.w3.org/2000/svg"><path d="M4.06875 3.7094C4.37476 3.7094 4.64788 3.90089 4.7525 4.18846L7.66377 12.1945L7.68581 12.2655C7.77631 12.6232 7.58293 12.9981 7.22879 13.127C6.87469 13.2557 6.4852 13.093 6.3247 12.7609L6.29627 12.692L5.74259 11.1695H2.39491L1.84123 12.692C1.70382 13.0697 1.28644 13.2643 0.908711 13.127C0.531024 12.9896 0.336372 12.5722 0.473726 12.1945L3.385 4.18846L3.43191 4.0854C3.55849 3.8561 3.80098 3.7094 4.06875 3.7094ZM2.93296 9.68974L2.92443 9.7139H5.21307L5.20454 9.68974L4.06875 6.56595L2.93296 9.68974Z" fill="url(#paint0_linear_11477_442303)"></path><path d="M10.6191 12.4432V10.2598C10.6191 9.8578 10.945 9.53195 11.3469 9.53195C11.7489 9.53195 12.0747 9.8578 12.0747 10.2598V12.4432C12.0747 12.8452 11.7489 13.171 11.3469 13.171C10.945 13.171 10.6191 12.8452 10.6191 12.4432Z" fill="url(#paint1_linear_11477_442303)"></path><path d="M11.0201 2.37198C11.1338 2.11266 11.5601 2.11266 11.6738 2.37198C11.8986 2.88469 12.2258 3.5124 12.6127 3.89927C12.9996 4.28613 13.6273 4.61338 14.14 4.83817C14.3993 4.95185 14.3993 5.37821 14.14 5.4919C13.6273 5.71669 12.9996 6.04395 12.6127 6.43081C12.2258 6.81768 11.8986 7.44539 11.6738 7.95811C11.5601 8.21743 11.1338 8.21743 11.0201 7.95811C10.7953 7.4454 10.468 6.81768 10.0812 6.43081C9.69429 6.04395 9.06658 5.71669 8.55386 5.4919C8.29454 5.37821 8.29454 4.95186 8.55386 4.83817C9.06658 4.61338 9.69429 4.28613 10.0812 3.89927C10.468 3.5124 10.7953 2.88469 11.0201 2.37198Z" fill="url(#paint2_linear_11477_442303)"></path><defs><linearGradient id="paint0_linear_11477_442303" x1="0.429688" y1="2.33714" x2="10.9766" y2="9.53844" gradientUnits="userSpaceOnUse"><stop stop-color="#00EF8B"></stop><stop offset="0.505" stop-color="#0044E4"></stop><stop offset="1" stop-color="#CD3FFF"></stop></linearGradient><linearGradient id="paint1_linear_11477_442303" x1="0.429688" y1="2.33714" x2="10.9766" y2="9.53844" gradientUnits="userSpaceOnUse"><stop stop-color="#00EF8B"></stop><stop offset="0.505" stop-color="#0044E4"></stop><stop offset="1" stop-color="#CD3FFF"></stop></linearGradient><linearGradient id="paint2_linear_11477_442303" x1="0.429688" y1="2.33714" x2="10.9766" y2="9.53844" gradientUnits="userSpaceOnUse"><stop stop-color="#00EF8B"></stop><stop offset="0.505" stop-color="#0044E4"></stop><stop offset="1" stop-color="#CD3FFF"></stop></linearGradient></defs></svg>Ask AI</button></div></div><div class="inkeep_inkeepChatModalContainer__dB5kW inkeep_hiddenEle__sZZ1h"><div class="inkeep_inkeepChatModalContent___AcLJ"><div class="inkeep_inkeepChatModalHeader__Z5g4t"><h3 class="inkeep_inkeepChatModalTitle__RNvS2">AI Assistant</h3><button class="inkeep_inkeepChatModalCloseButton__DKIqe"><svg width="24" height="24" viewBox="0 0 24 24"><path d="M5 5L19 19" stroke="#1D2939" stroke-width="2"></path><path d="M19 5L5 19" stroke="#1D2939" stroke-width="2"></path></svg></button></div><div class="inkeep_chatModal__EZkCw"></div></div><div class="inkeep_closeButtonWrapper__Cw9No"><button class="inkeep_closeButton__tJl6d"><svg width="37" height="36" viewBox="0 0 37 36" fill="none" xmlns="http://www.w3.org/2000/svg"><circle cx="18.3965" cy="18" r="17.5" fill="white" stroke="#5D6D85"></circle><path d="M18.3975 0C28.3384 0.000263882 36.3975 8.05904 36.3975 18C36.3975 27.941 28.3384 35.9997 18.3975 36C8.45634 36 0.397461 27.9411 0.397461 18C0.397461 8.05887 8.45634 0 18.3975 0ZM18.3975 1.5C9.28476 1.5 1.89746 8.8873 1.89746 18C1.89746 27.1127 9.28476 34.5 18.3975 34.5C27.5099 34.4997 34.8975 27.1125 34.8975 18C34.8975 8.88746 27.5099 1.50026 18.3975 1.5ZM24.0732 15.248L24.9482 16.3174L25.1699 16.5879L24.8994 16.8096C24.4326 17.1915 22.8576 18.4233 21.4053 19.5547C20.678 20.1212 19.9794 20.6641 19.4629 21.0654C19.2046 21.2661 18.991 21.4318 18.8428 21.5469C18.7691 21.6041 18.7112 21.6491 18.6719 21.6797C18.6522 21.6949 18.6361 21.707 18.626 21.7148C18.6213 21.7185 18.6177 21.7217 18.6152 21.7236C18.6142 21.7244 18.6129 21.7251 18.6123 21.7256L18.6113 21.7266L18.3955 21.8936L18.1807 21.7256L11.8994 16.8145L11.6182 16.5938L11.8447 16.3174L12.7188 15.248L12.9404 14.9775L13.2119 15.1992L18.3965 19.4404L23.5811 15.1992L23.8516 14.9775L24.0732 15.248Z" fill="url(#paint0_linear_11620_448113)"></path><defs><linearGradient id="paint0_linear_11620_448113" x1="0.397461" y1="0.522786" x2="31.3993" y2="17.2587" gradientUnits="userSpaceOnUse"><stop stop-color="#00EF8B"></stop><stop offset="0.505" stop-color="#0044E4"></stop><stop offset="1" stop-color="#CD3FFF"></stop></linearGradient></defs></svg></button></div></div></div><script id="__NEXT_DATA__" type="application/json">{"props":{"pageProps":{"data":{"backgroundImage":"https://assets.zilliz.com/large_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","authorNames":[" Haziqa Sajid"],"authors":[{"id":118,"name":" Haziqa Sajid","author_tags":"Freelance Technical Writer","published_at":"2024-03-12T10:25:34.265Z","created_by":18,"updated_by":18,"created_at":"2024-03-12T10:25:31.148Z","updated_at":"2024-07-03T07:58:27.010Z","home_page":"LinkedIn","home_page_link":"https://www.linkedin.com/in/haziqa-sajid-22b53245/","self_intro":"Digital Storytelling for Data, AI, B2B \u0026 SaaS","repost_to_medium":null,"repost_state":null,"meta_description":" Haziqa Sajid, Freelance Technical Writer","locale":"en","avatar":{"id":2780,"name":" Haziqa Sajid.jpeg","alternativeText":"","caption":"","width":140,"height":140,"formats":null,"hash":"Haziqa_Sajid_e9a3a2f2ed","ext":".jpeg","mime":"image/jpeg","size":5.11,"url":"https://assets.zilliz.com/Haziqa_Sajid_e9a3a2f2ed.jpeg","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":60,"updated_by":60,"created_at":"2024-03-12T10:24:50.762Z","updated_at":"2024-03-12T10:24:50.771Z"}}],"display_time":"Jun 03, 2024","title":"An Introduction to Vector Embeddings: What They Are and How to Use Them ","sub_title":null,"tags":[{"id":39,"name":"VectorDB 101","published_at":"2022-09-30T05:56:34.554Z","created_by":18,"updated_by":18,"created_at":"2022-09-30T05:56:31.633Z","updated_at":"2024-07-10T07:12:04.155Z","locale":"en"}],"articleContent":"\u003cp\u003e\u003cem\u003eUnderstand vector embeddings and when and how to use them. Explore real-world applications with Milvus and Zilliz Cloud vector databases.\u003c/em\u003e\u003c/p\u003e\n\u003cp\u003e\u003ca href=\"https://zilliz.com/glossary/vector-embeddings\"\u003eVector embeddings\u003c/a\u003e are numerical representations of data points, making unstructured data easier to search against. These embeddings are stored in specialized databases like\u003ca href=\"https://milvus.io/intro\"\u003e Milvus\u003c/a\u003e and\u003ca href=\"https://zilliz.com/cloud\"\u003e Zilliz Cloud\u003c/a\u003e (fully managed Milvus), which utilize advanced algorithms and indexing techniques for quick data retrieval.\u003c/p\u003e\n\u003cp\u003eModern artificial intelligence (AI) models, like \u003ca href=\"https://zilliz.com/glossary/large-language-models-(llms)\"\u003eLarge Language Models\u003c/a\u003e (LLMs), use text vector embeddings to understand natural language and generate relevant responses. Moreover, advanced versions of LLMs use \u003ca href=\"https://zilliz.com/learn/Retrieval-Augmented-Generation\"\u003eRetrieval Augmented Generation (RAG)\u003c/a\u003e to retrieve information from external vector stores for task-specific applications.\u003c/p\u003e\n\u003cp\u003eIn this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.\u003c/p\u003e\n\u003ch2 id=\"What-are-Vector-Embeddings\" class=\"common-anchor-header\"\u003eWhat are Vector Embeddings?\u003cbutton data-href=\"#What-are-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eA vector embedding is a list of numerical data points, with each number representing a data feature. These embeddings are obtained by analyzing connections within a dataset. Data points that are closer to each other are identified as semantically similar.\u003c/p\u003e\n\u003cp\u003eThe embeddings are formulated using deep learning models trained to map data to a high-dimensional vector space. Popular embedding models like BERT and Data2Vec form the basis of many modern deep-learning applications.\u003c/p\u003e\n\u003cp\u003eMoreover, vector embeddings are popularly used in \u003ca href=\"https://zilliz.com/learn/A-Beginner-Guide-to-Natural-Language-Processing\"\u003eNLP\u003c/a\u003e and CV applications due to their efficiency.\u003c/p\u003e\n\u003ch2 id=\"Types-of-Vector-Embeddings\" class=\"common-anchor-header\"\u003eTypes of Vector Embeddings\u003cbutton data-href=\"#Types-of-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eThere are three main types of embeddings based on their dimensionality: \u003ca href=\"https://zilliz.com/learn/sparse-and-dense-embeddings\"\u003edense, sparse,\u003c/a\u003e and binary embeddings. Here’s how they differ in characteristics and use:\u003c/p\u003e\n\u003ch3 id=\"1-Dense-Embeddings\" class=\"common-anchor-header\"\u003e1. Dense Embeddings\u003cbutton data-href=\"#1-Dense-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eVector embeddings that represent data points with most non-zero elements are dense. They capture finer details since they store all data, even zero values, making them less storage efficient.\u003c/p\u003e\n\u003cp\u003eWord2Vec, GloVe, CLIP, and BERT are models that generate dense vector embeddings from input data.\u003c/p\u003e\n\u003ch3 id=\"2-Sparse-Embeddings\" class=\"common-anchor-header\"\u003e2. Sparse Embeddings\u003cbutton data-href=\"#2-Sparse-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eSparse vector embeddings are high-dimensional vectors with most zero vector elements. The non-zero values in sparse embeddings represent the relative importance of data points in a corpus. Sparse embeddings require less memory and storage and are suitable for high-dimensional sparse data like word frequency.\u003c/p\u003e\n\u003cp\u003eTF-IDF and SPLADE are popular methods of generating sparse vector embeddings.\u003c/p\u003e\n\u003ch3 id=\"3-Binary-Embeddings\" class=\"common-anchor-header\"\u003e3. Binary Embeddings\u003cbutton data-href=\"#3-Binary-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eA binary embedding stores information in only 2 bits, 1 and 0. This form of storage is substantially more efficient than 32-bit floating point integers and improves data retrieval. However, it does lead to information loss since we are dialing down on data precision.\u003c/p\u003e\n\u003cp\u003eRegardless, binary embeddings are popular in certain use cases where speed is preferred for slight accuracy.\u003c/p\u003e\n\u003ch2 id=\"How-are-Vector-Embeddings-Created\" class=\"common-anchor-header\"\u003eHow are Vector Embeddings Created?\u003cbutton data-href=\"#How-are-Vector-Embeddings-Created\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eSophisticated deep learning models and statistical methods help create vector embeddings. These models identify patterns and connections in input data to learn the difference between data points. Models generate vector embeddings in an n-dimensional space based on their understanding of underlying connections.\u003c/p\u003e\n\u003cp\u003eAn N-dimensional space is beyond our 3-dimensional thinking and captures data from multiple perspectives. High-dimensional vector embeddings allow capturing finer details from data points, resulting in accurate outputs.\u003c/p\u003e\n\u003cp\u003eFor example, in textual data, high-dimensional space allows for capturing subtle differences in word meanings. Operating in a 2-dimensional space will group the words “tired” and “exhausted” together. An n-dimensional space will project them in different dimensions, capturing the difference in emotions. Mathematically, the following vector is a vector \u003ccode\u003ev\u003c/code\u003e in n-dimensional space:\u003c/p\u003e\n\u003cp\u003ev=[v1,v2,…,vn]\u003c/p\u003e\n\u003cp\u003eThe two popular techniques for creating vector embeddings are:\u003c/p\u003e\n\u003ch3 id=\"Neural-Networks\" class=\"common-anchor-header\"\u003eNeural Networks\u003cbutton data-href=\"#Neural-Networks\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eNeural networks, such as \u003ca href=\"https://zilliz.com/glossary/convolutional-neural-network\"\u003eConvolutional Neural Networks\u003c/a\u003e (CNNs) or \u003ca href=\"https://zilliz.com/glossary/recurrent-neural-networks\"\u003eRecurrent Neural Networks\u003c/a\u003e (RNNs), excel at learning data complexities. For example, BERT analyzes a word's neighboring terms to understand its meaning and generate embeddings.\u003c/p\u003e\n\u003ch3 id=\"Matrix-Factorization\" class=\"common-anchor-header\"\u003eMatrix Factorization\u003cbutton data-href=\"#Matrix-Factorization\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eUnlike neural networks, matrix factorization is a simpler embedding model. It takes training data as a matrix where each row and column represents a data record. The model then factorizes data points into lower-rank matrices. Matrix factorization is popularly used in recommendation systems, where the input matrix is the user rating matrix with rows representing users and columns representing the item (e.g., movie). Multiplying the user embedding matrix with the transpose of the item embedding matrix generates a matrix that approximates the original matrix.\u003c/p\u003e\n\u003cp\u003eVarious tools and libraries simplify the process of generating embeddings from input data. The most popular libraries include TensorFlow, PyTorch, and Hugging Face. These open-source libraries and tools offer user-friendly documentation for creating embedding models.\u003c/p\u003e\n\u003cp\u003eThe following table lists different embedding models, their descriptions, and links to the official documentation:\u003c/p\u003e\n\u003ctable\u003e\n\u003cthead\u003e\n\u003ctr\u003e\u003cth style=\"text-align:center\"\u003e\u003c/th\u003e\u003cth style=\"text-align:center\"\u003e\u003c/th\u003e\u003cth style=\"text-align:center\"\u003e\u003c/th\u003e\u003c/tr\u003e\n\u003c/thead\u003e\n\u003ctbody\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003e\u003cstrong\u003eModel\u003c/strong\u003e\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003e\u003cstrong\u003eDescription\u003c/strong\u003e\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003e\u003cstrong\u003eLink\u003c/strong\u003e\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eNeural Networks\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eNeural Networks like CNNs and RNNs effectively identify data patterns, which is useful for generating vector embeddings. For example, Word2Vec.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003ehttps://developers.google.com/machine-learning/crash-course/introduction-to-neural-networks/video-lecture\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eMatrix Factorization\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eMatrix Factorization is suitable for filtering tasks like recommendation systems. It captures user preferences by manipulating input matrices.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003ehttps://developers.google.com/machine-learning/recommendation/collaborative/matrix\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eGloVe\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eGloVe is a uni-directional embedding model. It generates a single-word embedding for a single word.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003ehttps://nlp.stanford.edu/projects/glove/\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eBERT\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eBERT (Bidirectional Encoder Representations from Transformers) is a pre-trained model that analyzes textual data bidirectionaly.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003e\u003ca href=\"https://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BERT-The-Foundation-Model-for-BGE-M3-and-Splade\"\u003ehttps://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BERT-The-Foundation-Model-for-BGE-M3-and-Splade\u003c/a\u003e\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eColBERT\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eA token-level embedding and ranking model\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003e\u003ca href=\"https://zilliz.com/learn/explore-colbert-token-level-embedding-and-ranking-model-for-similarity-search\"\u003ehttps://zilliz.com/learn/explore-colbert-token-level-embedding-and-ranking-model-for-similarity-search\u003c/a\u003e\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eSPLADE\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003eAn advanced embedding model for generating sparse embeddings.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003ehttps://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#SPLADE\u003c/td\u003e\u003c/tr\u003e\n\u003ctr\u003e\u003ctd style=\"text-align:center\"\u003eBGE-M3\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003e\u003ca href=\"https://huggingface.co/BAAI/bge-m3\"\u003eBGE-M3\u003c/a\u003e is an advanced machine-learning model that extends BERT's capabilities.\u003c/td\u003e\u003ctd style=\"text-align:center\"\u003ehttps://zilliz.com/learn/bge-m3-and-splade-two-machine-learning-models-for-generating-sparse-embeddings#BGE-M3\u003c/td\u003e\u003c/tr\u003e\n\u003c/tbody\u003e\n\u003c/table\u003e\n\u003ch2 id=\"What-are-Vector-Embeddings-Used-for\" class=\"common-anchor-header\"\u003eWhat are Vector Embeddings Used for?\u003cbutton data-href=\"#What-are-Vector-Embeddings-Used-for\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eVector embeddings are widely used in various modern search and AI tasks. Some of these tasks include:\u003c/p\u003e\n\u003cul\u003e\n\u003cli\u003e\u003cstrong\u003eSimilarity Search:\u003c/strong\u003e Similarity search is a technique to find similar data points in high-dimensional space. This is done by measuring the distance between vector embeddings using similarity measures like Euclidean distance or Jaccard similarity. Modern search engines use similarity search to retrieve relevant web pages against user searches.\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003e\u003cstrong\u003eRecommendation Systems:\u003c/strong\u003e Recommendation systems rely on vectorized data to cluster similar items. Elements from the same cluster are then used as recommendations for the users. The systems create clusters on various levels, such as groups of users based on demographics and preferences and a group of products. All this information is stored as vector embeddings for efficient and accurate retrieval at runtime.\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003e\u003cstrong\u003eRetrieval Augmented Generation (RAG)\u003c/strong\u003e: RAG is a popular technique for alleviating the hallucinatory issues of large language models and providing them with additional knowledge. Embedding models transform external knowledge and user queries into vector embeddings. A vector database stores the embeddings and conducts a similarity search for the most relevant results to the user query. The LLM generates the final answers based on the retrieved contextual information.\u003c/li\u003e\n\u003c/ul\u003e\n\u003ch2 id=\"Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus\" class=\"common-anchor-header\"\u003eStoring, Indexing, and Retrieving Vector Embeddings with Milvus\u003cbutton data-href=\"#Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eMilvus offers a built-in library to store, index, and search vector embeddings. Here’s the step-by-step approach to do so using the \u003ccode\u003ePyMilvus\u003c/code\u003e library:\u003c/p\u003e\n\u003ch3 id=\"1-Install-Libraries-and-Set-up-a-Milvus-Database\" class=\"common-anchor-header\"\u003e1. Install Libraries and Set up a Milvus Database\u003cbutton data-href=\"#1-Install-Libraries-and-Set-up-a-Milvus-Database\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eInstall \u003ccode\u003epymilvus\u003c/code\u003e , and \u003ccode\u003egensim\u003c/code\u003e , where \u003ccode\u003ePymilvus\u003c/code\u003e is a Python SDK for Milvus, and \u003ccode\u003egensim\u003c/code\u003e is a Python library for NLP. Run the following code to install the libraries:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003e\u003cspan class=\"hljs-addition\"\u003e!pip install -U -pymilvus gensim\u003c/span\u003e\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003cp\u003eIn this tutorial, we’re connecting \u003ca href=\"https://milvus.io/docs/install_standalone-docker.md\"\u003eMilvus\u003c/a\u003e using docker, so make sure you’ve docker installed in your system. Run the following command in your terminal to install Milvus:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003e\u003cspan class=\"hljs-meta prompt_\"\u003e\u0026gt;\u003c/span\u003e \u003cspan class=\"language-javascript\"\u003ewget -sfL \u003cspan class=\"hljs-attr\"\u003ehttps\u003c/span\u003e:\u003cspan class=\"hljs-comment\"\u003e//raw.githubusercontent.com/milvus-io/milvus/master/scripts/standalone_embed.sh\u003c/span\u003e\u003c/span\u003e\n\u003cspan class=\"hljs-meta prompt_\"\u003e\u0026gt;\u003c/span\u003e \u003cspan class=\"language-javascript\"\u003ebash standalone_embed.\u003cspan class=\"hljs-property\"\u003esh\u003c/span\u003e start\u003c/span\u003e\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003cp\u003eNow the Milvus service has started and you’re ready to use the Milvus database.To set up a local Milvus vector database, create a MilvusClient instance and specify a filename, like \u003ccode\u003emilvus_demo.db\u003c/code\u003e, to store all the data.\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003efrom pymilvus \u003cspan class=\"hljs-keyword\"\u003eimport\u003c/span\u003e \u003cspan class=\"hljs-type\"\u003eMilvusClient\u003c/span\u003e\n\n\u003cspan class=\"hljs-variable\"\u003eclient\u003c/span\u003e \u003cspan class=\"hljs-operator\"\u003e=\u003c/span\u003e MilvusClient(\u003cspan class=\"hljs-string\"\u003e\u0026quot;milvus_demo.db\u0026quot;\u003c/span\u003e)\n\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003ch3 id=\"2-Generate-Vector-Embeddings\" class=\"common-anchor-header\"\u003e2. Generate Vector Embeddings\u003cbutton data-href=\"#2-Generate-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eThe following code creates a collection to store embeddings, loads a pre-trained model from \u003ccode\u003egensim\u003c/code\u003e , and generates embeddings to simple words like ice and water:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003eimport gensim.downloader as api\n\u003cspan class=\"hljs-keyword\"\u003efrom\u003c/span\u003e pymilvus import ( connections, FieldSchema, CollectionSchema, DataType)\n\u003cspan class=\"hljs-comment\"\u003e# create a collection\u003c/span\u003e\nfields = [ FieldSchema(\u003cspan class=\"hljs-attribute\"\u003ename\u003c/span\u003e=\u003cspan class=\"hljs-string\"\u003e\u0026quot;pk\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-attribute\"\u003edtype\u003c/span\u003e=DataType.INT64, \u003cspan class=\"hljs-attribute\"\u003eis_primary\u003c/span\u003e=\u003cspan class=\"hljs-literal\"\u003eTrue\u003c/span\u003e, \u003cspan class=\"hljs-attribute\"\u003eauto_id\u003c/span\u003e=\u003cspan class=\"hljs-literal\"\u003eFalse\u003c/span\u003e), FieldSchema(\u003cspan class=\"hljs-attribute\"\u003ename\u003c/span\u003e=\u003cspan class=\"hljs-string\"\u003e\u0026quot;words\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-attribute\"\u003edtype\u003c/span\u003e=DataType.VARCHAR, \u003cspan class=\"hljs-attribute\"\u003emax_length\u003c/span\u003e=50), FieldSchema(\u003cspan class=\"hljs-attribute\"\u003ename\u003c/span\u003e=\u003cspan class=\"hljs-string\"\u003e\u0026quot;embeddings\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-attribute\"\u003edtype\u003c/span\u003e=DataType.FLOAT_VECTOR, \u003cspan class=\"hljs-attribute\"\u003edim\u003c/span\u003e=50)]\nschema = CollectionSchema(fields, \u003cspan class=\"hljs-string\"\u003e\u0026quot;Demo to store and retrieve embeddings\u0026quot;\u003c/span\u003e)\ndemo_milvus = client.create_collection(\u003cspan class=\"hljs-string\"\u003e\u0026quot;milvus_demo\u0026quot;\u003c/span\u003e, schema)\n\n\u003cspan class=\"hljs-comment\"\u003e# load the pre-trained model from gensim\u003c/span\u003e\nmodel = api.load(\u003cspan class=\"hljs-string\"\u003e\u0026quot;glove-wiki-gigaword-50\u0026quot;\u003c/span\u003e)\n\n\u003cspan class=\"hljs-comment\"\u003e# generate embeddings\u003c/span\u003e\nice = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;ice\u0026#x27;\u003c/span\u003e]\nwater = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;water\u0026#x27;\u003c/span\u003e]\ncold = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;cold\u0026#x27;\u003c/span\u003e]\ntree = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;tree\u0026#x27;\u003c/span\u003e]\nman = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;man\u0026#x27;\u003c/span\u003e]\nwoman = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;woman\u0026#x27;\u003c/span\u003e]\nchild = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;child\u0026#x27;\u003c/span\u003e]\nfemale = model[\u003cspan class=\"hljs-string\"\u003e\u0026#x27;female\u0026#x27;\u003c/span\u003e]\n\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003ch3 id=\"3-Store-Vector-Embeddings\" class=\"common-anchor-header\"\u003e3. Store Vector Embeddings\u003cbutton data-href=\"#3-Store-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eStore the generated vector embeddings in the previous step to the \u003ccode\u003edemo_milvus\u003c/code\u003e collection we created above:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003e\u003cspan class=\"hljs-comment\"\u003e#Insert data in collection\u003c/span\u003e\n\u003cspan class=\"hljs-attr\"\u003edata\u003c/span\u003e = [ [\u003cspan class=\"hljs-number\"\u003e1\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e2\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e3\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e4\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e5\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e6\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e7\u003c/span\u003e,\u003cspan class=\"hljs-number\"\u003e8\u003c/span\u003e], \u003cspan class=\"hljs-comment\"\u003e# field pk \u003c/span\u003e\n [\u003cspan class=\"hljs-string\"\u003e\u0026#x27;ice\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;water\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;cold\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;tree\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;man\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;woman\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;child\u0026#x27;\u003c/span\u003e,\u003cspan class=\"hljs-string\"\u003e\u0026#x27;female\u0026#x27;\u003c/span\u003e], \u003cspan class=\"hljs-comment\"\u003e# field words \u003c/span\u003e\n[ice, water, cold, tree, man, woman, child, female], \u003cspan class=\"hljs-comment\"\u003e# field embeddings]\u003c/span\u003e\ninsert_result = demo_milvus.insert(data)\n\n\u003cspan class=\"hljs-comment\"\u003e# After final entity is inserted, it is best to call flush to have no growing segments left in memory\u003c/span\u003e\ndemo_milvus.flush()\n\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003ch3 id=\"4-Create-Indexes-on-Entries\" class=\"common-anchor-header\"\u003e4. Create Indexes on Entries\u003cbutton data-href=\"#4-Create-Indexes-on-Entries\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eIndexes make the vector search faster. The following code \u003ccode\u003eIVF_FLAT\u003c/code\u003e index, \u003ccode\u003eL2 (Euclidean distance)\u003c/code\u003e metric, and 128 parameters to create an index:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003e\u003cspan class=\"hljs-keyword\"\u003eindex\u003c/span\u003e = { \u003cspan class=\"hljs-string\"\u003e\u0026quot;index_type\u0026quot;\u003c/span\u003e: \u003cspan class=\"hljs-string\"\u003e\u0026quot;IVF_FLAT\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-string\"\u003e\u0026quot;metric_type\u0026quot;\u003c/span\u003e: \u003cspan class=\"hljs-string\"\u003e\u0026quot;L2\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-string\"\u003e\u0026quot;params\u0026quot;\u003c/span\u003e: {\u003cspan class=\"hljs-string\"\u003e\u0026quot;nlist\u0026quot;\u003c/span\u003e: 128},}\ndemo_milvus.create_\u003cspan class=\"hljs-meta\"\u003eindex\u003c/span\u003e(\u003cspan class=\"hljs-string\"\u003e\u0026quot;embeddings\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-keyword\"\u003eindex\u003c/span\u003e)\n\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003ch3 id=\"5-Search-Vector-Embeddings\" class=\"common-anchor-header\"\u003e5. Search Vector Embeddings\u003cbutton data-href=\"#5-Search-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eTo search the vector embedding, load the Milvus collection in memory using the \u003ccode\u003e.load()\u003c/code\u003e method and do a vector similarity search:\u003c/p\u003e\n\u003cpre\u003e\u003ccode\u003edemo_milvus.load()\n\u003cspan class=\"hljs-comment\"\u003e# performs a vector similarity search:\u003c/span\u003e\ndata = [cold]search_params = { \u003cspan class=\"hljs-string\"\u003e\u0026quot;metric_type\u0026quot;\u003c/span\u003e: \u003cspan class=\"hljs-string\"\u003e\u0026quot;L2\u0026quot;\u003c/span\u003e, \u003cspan class=\"hljs-string\"\u003e\u0026quot;params\u0026quot;\u003c/span\u003e: {\u003cspan class=\"hljs-string\"\u003e\u0026quot;nprobe\u0026quot;\u003c/span\u003e: 10},}\nresult = demo_milvus.search(data, \u003cspan class=\"hljs-string\"\u003e\u0026quot;embeddings\u0026quot;\u003c/span\u003e, search_params, limit=4, output_fields=[\u003cspan class=\"hljs-string\"\u003e\u0026quot;words\u0026quot;\u003c/span\u003e])\n\n\u003cbutton class=\"copy-code-btn\"\u003e\u003c/button\u003e\u003c/code\u003e\u003c/pre\u003e\n\u003ch2 id=\"Best-Practices-for-Using-Vector-Embeddings\" class=\"common-anchor-header\"\u003eBest Practices for Using Vector Embeddings\u003cbutton data-href=\"#Best-Practices-for-Using-Vector-Embeddings\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eObtaining optimal results with vector embeddings requires careful use of embedding models. The best practices for using vector embeddings are:\u003c/p\u003e\n\u003ch3 id=\"1-Selecting-the-Right-Embedding-Model\" class=\"common-anchor-header\"\u003e1. Selecting the Right Embedding Model\u003cbutton data-href=\"#1-Selecting-the-Right-Embedding-Model\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eDifferent embedding models are suitable for different tasks. For example, CLIP is designed for multimodal tasks, and GloVe is designed for NLP tasks. \u003ca href=\"https://zilliz.com/blog/choosing-the-right-embedding-model-for-your-data\"\u003eSelecting embedding models\u003c/a\u003e based on data needs and computational limitations results in better outputs.\u003c/p\u003e\n\u003ch3 id=\"2-Optimizing-Embedding-Performance\" class=\"common-anchor-header\"\u003e2. Optimizing Embedding Performance\u003cbutton data-href=\"#2-Optimizing-Embedding-Performance\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003ePre-trained models like BERT and CLIP offer a good starting point. However, these can be optimized for improved performance.\u003c/p\u003e\n\u003cp\u003eHyperparameter tuning also helps find the important combination of features for optimal performance. Data augmentation is another way to improve embedding model performance. It artificially increases the size and complexity of data, making it suitable for tasks with limited data.\u003c/p\u003e\n\u003ch3 id=\"3-Monitoring-Embedding-Model\" class=\"common-anchor-header\"\u003e3. Monitoring Embedding Model\u003cbutton data-href=\"#3-Monitoring-Embedding-Model\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eContinuous monitoring of embedding models tests their performance over time. This offers insights into model degradation, allowing fine-tuning them for accurate results.\u003c/p\u003e\n\u003ch3 id=\"4-Considering-Evolving-Needs\" class=\"common-anchor-header\"\u003e4. Considering Evolving Needs\u003cbutton data-href=\"#4-Considering-Evolving-Needs\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eEvolving data needs like growing data or changing format may decrease accuracy. Retraining and fine-tuning models according to data needs ensures precise model performance.\u003c/p\u003e\n\u003ch2 id=\"Common-Pitfalls-and-How-to-Avoid-Them\" class=\"common-anchor-header\"\u003eCommon Pitfalls and How to Avoid Them\u003cbutton data-href=\"#Common-Pitfalls-and-How-to-Avoid-Them\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003ch4 id=\"Change-in-Model-Architecture\" class=\"common-anchor-header\"\u003eChange in Model Architecture\u003cbutton data-href=\"#Change-in-Model-Architecture\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h4\u003e\u003cp\u003eFine-tuning and hyperparameter tuning can modify the underlying model architecture. Since the model generates vector embeddings, significant changes can lead to different vector embeddings.\u003c/p\u003e\n\u003cp\u003eTo improve model performance without changing them completely, avoid adjusting model parameters completely. Instead, fine-tune pre-trained models like Word2Vec and BERT for specific tasks.\u003c/p\u003e\n\u003ch4 id=\"Data-Drift\" class=\"common-anchor-header\"\u003eData Drift\u003cbutton data-href=\"#Data-Drift\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h4\u003e\u003cp\u003eData drift happens when data changes from what the model was trained on. This might result in inaccurate vector embeddings. Continuous monitoring of data ensures it stays consistent with model requirements.\u003c/p\u003e\n\u003ch4 id=\"Misleading-Evaluation-Metrics\" class=\"common-anchor-header\"\u003eMisleading Evaluation Metrics\u003cbutton data-href=\"#Misleading-Evaluation-Metrics\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h4\u003e\u003cp\u003eAll evaluation metrics are suitable for different tasks. Randomly choosing the evaluation metrics might result in misleading analysis, hiding the model's true performance.\u003c/p\u003e\n\u003cp\u003eCarefully pick the evaluation metrics suitable for your tasks. For example, Cosine similarity for semantic differences and BLEU score for translation tasks.\u003c/p\u003e\n\u003ch2 id=\"Further-Resources\" class=\"common-anchor-header\"\u003eFurther Resources\u003cbutton data-href=\"#Further-Resources\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h2\u003e\u003cp\u003eThe best way to build a deeper understanding of vector embeddings is by watching relevant resources, practicing, and engaging with industry professionals. Below are the ways you can deeply explore vector embeddings:\u003c/p\u003e\n\u003cul\u003e\n\u003cli\u003eZilliz Learn series: \u003ca href=\"https://zilliz.com/learn\"\u003ehttps://zilliz.com/learn\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003eZilliz Glossaries: \u003ca href=\"https://zilliz.com/glossary\"\u003ehttps://zilliz.com/glossary\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003eEmbedding models and their integration with Milvus: \u003ca href=\"https://zilliz.com/product/integrations\"\u003ehttps://zilliz.com/product/integrations\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003eHugging Face Models: \u003ca href=\"https://huggingface.co/models\"\u003ehttps://huggingface.co/models\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003eAcademic papers:\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003e\u003ca href=\"https://arxiv.org/abs/1810.04805\"\u003e[1810.04805] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003e\u003ca href=\"https://arxiv.org/abs/2004.12832\"\u003e[2004.12832] ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003e\u003ca href=\"https://arxiv.org/abs/2109.10086\"\u003e[2109.10086] SPLADE v2: Sparse Lexical and Expansion Model for Information Retrieval\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003cul\u003e\n\u003cli\u003eBenchmark tool for evaluating vector databases: \u003ca href=\"https://zilliz.com/vector-database-benchmark-tool?database=ZillizCloud%2CMilvus%2CElasticCloud%2CPgVector%2CPinecone%2CQdrantCloud%2CWeaviateCloud\u0026amp;dataset=medium\u0026amp;filter=none%2Clow%2Chigh\u0026amp;tab=1\"\u003eVectorDBBench: An Open-Source VectorDB Benchmark Tool\u003c/a\u003e\u003c/li\u003e\n\u003c/ul\u003e\n\u003ch3 id=\"2-Community-Engagement\" class=\"common-anchor-header\"\u003e2. Community Engagement\u003cbutton data-href=\"#2-Community-Engagement\" class=\"anchor-icon\" translate=\"no\"\u003e\n \u003csvg\n aria-hidden=\"true\"\n focusable=\"false\"\n height=\"20\"\n version=\"1.1\"\n viewBox=\"0 0 16 16\"\n width=\"16\"\n \u003e\n \u003cpath\n fill=\"#0092E4\"\n fill-rule=\"evenodd\"\n d=\"M4 9h1v1H4c-1.5 0-3-1.69-3-3.5S2.55 3 4 3h4c1.45 0 3 1.69 3 3.5 0 1.41-.91 2.72-2 3.25V8.59c.58-.45 1-1.27 1-2.09C10 5.22 8.98 4 8 4H4c-.98 0-2 1.22-2 2.5S3 9 4 9zm9-3h-1v1h1c1 0 2 1.22 2 2.5S13.98 12 13 12H9c-.98 0-2-1.22-2-2.5 0-.83.42-1.64 1-2.09V6.25c-1.09.53-2 1.84-2 3.25C6 11.31 7.55 13 9 13h4c1.45 0 3-1.69 3-3.5S14.5 6 13 6z\"\n \u003e\u003c/path\u003e\n \u003c/svg\u003e\n \u003c/button\u003e\u003c/h3\u003e\u003cp\u003eJoin \u003ca href=\"https://discord.com/invite/8uyFbECzPX\"\u003eour Discord\u003c/a\u003e community to connect with GenAI developers from various industries and discuss everything related to vector embeddings, vector databases, and AI. Follow relevant discussions on Stack Overflow, Reddit, and \u003ca href=\"https://github.com/milvus-io/milvus\"\u003eGitHub\u003c/a\u003e to learn potential issues you might encounter when working with embeddings and improve your debugging skills.\u003c/p\u003e\n\u003cp\u003eStaying up-to-date with resources and engaging with the community ensures that your skills grow as technology advances, which offers you a competitive advantage in the AI industry.\u003c/p\u003e\n","abstract":"In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.\n","url":"everything-you-should-know-about-vector-embeddings","codeList":["!pip install -U -pymilvus gensim\n","\u003e wget -sfL https://raw.githubusercontent.com/milvus-io/milvus/master/scripts/standalone_embed.sh\n\u003e bash standalone_embed.sh start\n","from pymilvus import MilvusClient\n\nclient = MilvusClient(\"milvus_demo.db\")\n\n","import gensim.downloader as api\nfrom pymilvus import ( connections, FieldSchema, CollectionSchema, DataType)\n# create a collection\nfields = [ FieldSchema(name=\"pk\", dtype=DataType.INT64, is_primary=True, auto_id=False), FieldSchema(name=\"words\", dtype=DataType.VARCHAR, max_length=50), FieldSchema(name=\"embeddings\", dtype=DataType.FLOAT_VECTOR, dim=50)]\nschema = CollectionSchema(fields, \"Demo to store and retrieve embeddings\")\ndemo_milvus = client.create_collection(\"milvus_demo\", schema)\n\n# load the pre-trained model from gensim\nmodel = api.load(\"glove-wiki-gigaword-50\")\n\n# generate embeddings\nice = model['ice']\nwater = model['water']\ncold = model['cold']\ntree = model['tree']\nman = model['man']\nwoman = model['woman']\nchild = model['child']\nfemale = model['female']\n\n","#Insert data in collection\ndata = [ [1,2,3,4,5,6,7,8], # field pk \n ['ice','water','cold','tree','man','woman','child','female'], # field words \n[ice, water, cold, tree, man, woman, child, female], # field embeddings]\ninsert_result = demo_milvus.insert(data)\n\n# After final entity is inserted, it is best to call flush to have no growing segments left in memory\ndemo_milvus.flush()\n\n","index = { \"index_type\": \"IVF_FLAT\", \"metric_type\": \"L2\", \"params\": {\"nlist\": 128},}\ndemo_milvus.create_index(\"embeddings\", index)\n\n","demo_milvus.load()\n# performs a vector similarity search:\ndata = [cold]search_params = { \"metric_type\": \"L2\", \"params\": {\"nprobe\": 10},}\nresult = demo_milvus.search(data, \"embeddings\", search_params, limit=4, output_fields=[\"words\"])\n\n"],"image":{"id":3419,"name":"Everything You Should Know about Vector Embeddings (1).png","alternativeText":"","caption":"","width":2400,"height":1256,"formats":{"large":{"ext":".png","url":"https://assets.zilliz.com/large_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","hash":"large_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2","mime":"image/png","name":"large_Everything You Should Know about Vector Embeddings (1).png","path":null,"size":474.5,"width":1000,"height":523},"small":{"ext":".png","url":"https://assets.zilliz.com/small_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","hash":"small_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2","mime":"image/png","name":"small_Everything You Should Know about Vector Embeddings (1).png","path":null,"size":132.77,"width":500,"height":262},"medium":{"ext":".png","url":"https://assets.zilliz.com/medium_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","hash":"medium_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2","mime":"image/png","name":"medium_Everything You Should Know about Vector Embeddings (1).png","path":null,"size":261.12,"width":750,"height":393},"thumbnail":{"ext":".png","url":"https://assets.zilliz.com/thumbnail_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","hash":"thumbnail_Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2","mime":"image/png","name":"thumbnail_Everything You Should Know about Vector Embeddings (1).png","path":null,"size":48.18,"width":245,"height":128}},"hash":"Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2","ext":".png","mime":"image/png","size":2557.75,"url":"https://assets.zilliz.com/Everything_You_Should_Know_about_Vector_Embeddings_1_3d19f86ad2.png","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":82,"updated_by":82,"created_at":"2024-05-06T23:56:31.448Z","updated_at":"2024-05-06T23:56:31.464Z"},"canonical_rel":"https://zilliz.com/learn/everything-you-should-know-about-vector-embeddings","read_time":9,"blogId":"learn-189","meta_title":"An Introduction to Vector Embeddings: What They Are and How to Use Them","meta_description":"In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.\n","meta_keywords":null,"display_updated_time":"Jul 01, 2025"},"anchors":[{"label":"What are Vector Embeddings?","href":"What-are-Vector-Embeddings","type":2,"isActive":false},{"label":"Types of Vector Embeddings","href":"Types-of-Vector-Embeddings","type":2,"isActive":false},{"label":"How are Vector Embeddings Created?","href":"How-are-Vector-Embeddings-Created","type":2,"isActive":false},{"label":"What are Vector Embeddings Used for?","href":"What-are-Vector-Embeddings-Used-for","type":2,"isActive":false},{"label":"Storing, Indexing, and Retrieving Vector Embeddings with Milvus","href":"Storing-Indexing-and-Retrieving-Vector-Embeddings-with-Milvus","type":2,"isActive":false},{"label":"Best Practices for Using Vector Embeddings","href":"Best-Practices-for-Using-Vector-Embeddings","type":2,"isActive":false},{"label":"Common Pitfalls and How to Avoid Them","href":"Common-Pitfalls-and-How-to-Avoid-Them","type":2,"isActive":false},{"label":"Further Resources","href":"Further-Resources","type":2,"isActive":false}],"seriesName":"Vector Database 101: Everything You Need to Know","seriesArticles":[{"id":105,"title":"Introduction to Unstructured Data","language":null,"keywords":null,"published_at":"2022-09-30T06:15:45.722Z","created_by":18,"updated_by":60,"created_at":"2022-09-30T06:15:18.726Z","updated_at":"2025-03-24T09:01:42.846Z","featured":true,"abstract":"Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week. ","sub_title":"Everything about unstructured data - what they are, how they differ from traditional data types, and the paradigm shift called for by the need to efficiently process them.","author":"Zilliz","display_time":"2022-09-29T19:00:00.000Z","home_order":"a","isNews":null,"url":"introduction-to-unstructured-data","show_in_learn":false,"order_in_learn":null,"category_order":null,"category_learn":3,"deploy_time":null,"author_info":null,"canonical_rel":"https://zilliz.com/learn/introduction-to-unstructured-data","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"68e4b3354d73","mediumUrl":"https://medium.com/@zilliz_learn/introduction-to-unstructured-data-68e4b3354d73"},"meta_title":"Introduction to Unstructured Data","meta_description":"Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":11,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":5,"title":"What is a Vector Database and how does it work: Implementation, Optimization \u0026 Scaling for Production Applications","sub_title":"","featured":null,"abstract":"A vector database stores, indexes, and searches vector embeddings generated by machine learning models for fast information retrieval and similarity search. ","display_time":"2025-03-01","url":"what-is-vector-database","home_order":null,"published_at":"2021-12-22T07:35:10.971Z","created_by":23,"updated_by":60,"created_at":"2021-12-22T07:10:46.525Z","updated_at":"2025-05-07T07:39:31.523Z","show_in_learn":true,"order_in_learn":"0","category_order":null,"category_learn":3,"author":3,"canonical_rel":"https://zilliz.com/learn/what-is-vector-database","repost_to_medium":true,"repost_state":{"devto":{"status":"success"},"status":"success","mediumId":"c7409912cb69","mediumUrl":"https://medium.com/@zilliz_learn/what-is-a-vector-database-c7409912cb69"},"meta_title":"What is a Vector Database and How Does It Work?","meta_description":"A vector database stores, indexes, and searches unstructured data through vector embeddings for fast information retrieval and similarity search. ","meta_keywords":"Vector Database, Vector Embeddings, Vector Search, LLM, RAG","invisible":null,"locale":"en","repost_to_devto":true,"belong":"learn","read_time":20,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":68,"title":"Understanding Vector Databases: Compare Vector Databases, Vector Search Libraries, and Vector Search Plugins","sub_title":"Compare Vector Databases, Vector Search Libraries and Plugins","featured":false,"abstract":"Deep diving into better understanding vector databases and comparing them to vector search libraries and vector search plugins.","display_time":"2023-10-23","url":"comparing-vector-database-vector-search-library-and-vector-search-plugin","home_order":null,"published_at":"2023-10-24T15:49:14.819Z","created_by":60,"updated_by":60,"created_at":"2023-10-24T15:35:52.810Z","updated_at":"2025-03-24T08:56:57.963Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":3,"canonical_rel":null,"repost_to_medium":true,"repost_state":{"status":"success","mediumId":"872f9eb4ac80","mediumUrl":"https://medium.com/@zilliz_learn/understanding-vector-databases-compare-vector-databases-vector-search-libraries-and-vector-872f9eb4ac80"},"meta_title":"Compare Vector Databases, Vector Search Libraries and Plugins","meta_description":"Deep diving into better understanding vector databases and comparing them to vector search libraries and vector search plugins.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":109,"title":"Introduction to Milvus Vector Database","language":null,"keywords":null,"published_at":"2022-10-14T07:50:41.587Z","created_by":18,"updated_by":60,"created_at":"2022-10-14T07:50:34.637Z","updated_at":"2025-07-01T00:00:23.728Z","featured":false,"abstract":"Zilliz tells the story about building the world's very first open-source vector database, Milvus. The start, the pivotal, and the future.","sub_title":"How we built the world's most popular open-source vector database.","author":"Zilliz","display_time":"2022-10-13T19:00:00.000Z","home_order":"null","isNews":null,"url":"introduction-to-milvus-vector-database","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"deploy_time":null,"author_info":null,"canonical_rel":null,"repost_to_medium":null,"repost_state":null,"meta_title":null,"meta_description":null,"meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":10,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":111,"title":"Milvus Quickstart: Install Milvus Vector Database in 5 Minutes","language":null,"keywords":null,"published_at":"2022-10-27T01:38:41.303Z","created_by":18,"updated_by":60,"created_at":"2022-10-26T02:22:05.223Z","updated_at":"2024-10-09T07:58:56.546Z","featured":false,"abstract":"Milvus vector database supports two modes of deployments: standalone and cluster. ","sub_title":"Getting started with Milvus - the world's most popular open-source vector database.","author":"Zilliz","display_time":"2022-10-21T19:00:00.000Z","home_order":"null","isNews":null,"url":"milvus-vector-database-quickstart","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"deploy_time":null,"author_info":null,"canonical_rel":"https://zilliz.com/learn/milvus-vector-database-quickstart","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"006df62927b3","mediumUrl":"https://medium.com/@zilliz_learn/milvus-quickstart-install-milvus-vector-database-in-5-minutes-006df62927b3"},"meta_title":"Milvus Quickstart: Install Milvus Vector Database in 5 Minutes","meta_description":"Milvus vector database supports two modes of deployments: standalone and cluster. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":120,"title":"Introduction to Vector Similarity Search","language":null,"keywords":null,"published_at":"2022-11-04T05:34:05.259Z","created_by":18,"updated_by":125,"created_at":"2022-11-04T03:13:45.957Z","updated_at":"2025-03-27T06:06:23.116Z","featured":true,"abstract":"Learn what vector search is and the metrics pertinent to decide the distance (or similarity) between objects.","sub_title":"Vector similarity search explained through a word embedding example.","author":"Zilliz","display_time":"2023-11-03T19:00:00.000Z","home_order":"null","isNews":null,"url":"vector-similarity-search","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"deploy_time":null,"author_info":null,"canonical_rel":null,"repost_to_medium":null,"repost_state":null,"meta_title":"Introduction to Vector Similarity Search","meta_description":"Learn what vector search is and the metrics pertinent to decide the distance (or similarity) between objects.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":13,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":121,"title":"Everything You Need to Know about Vector Index Basics","language":null,"keywords":null,"published_at":"2022-11-22T18:49:24.042Z","created_by":18,"updated_by":53,"created_at":"2022-11-17T04:32:35.998Z","updated_at":"2025-03-01T19:40:02.223Z","featured":false,"abstract":"This tutorial analyzes the components of a modern indexer before going over two simplest indexing strategies - flat indexing and inverted file (IVF) indexes.","sub_title":"Vector Index Basics and the Inverted File Index ","author":"Zilliz","display_time":"2023-05-31T15:00:00.000Z","home_order":"null","isNews":null,"url":"vector-index","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"deploy_time":null,"author_info":null,"canonical_rel":null,"repost_to_medium":null,"repost_state":null,"meta_title":null,"meta_description":null,"meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":10,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":146,"title":"Scalar Quantization and Product Quantization","language":null,"keywords":null,"published_at":"2023-03-29T15:40:33.915Z","created_by":53,"updated_by":60,"created_at":"2023-03-28T22:56:42.235Z","updated_at":"2025-06-01T00:00:25.077Z","featured":false,"abstract":"A hands-on dive into scalar quantization (integer quantization) and product quantization with Python.","sub_title":"Learn about Scalar Quantization and Product Quantization","author":"Zilliz","display_time":"2023-03-28T19:00:00.000Z","home_order":"null","isNews":null,"url":"scalar-quantization-and-product-quantization","show_in_learn":false,"order_in_learn":null,"category_order":null,"category_learn":3,"deploy_time":null,"author_info":null,"canonical_rel":null,"repost_to_medium":null,"repost_state":{"status":"success","mediumId":"66099f49b23d","mediumUrl":"https://medium.com/@zilliz_learn/vector-database-101-scalar-quantization-and-product-quantization-66099f49b23d"},"meta_title":"Scalar Quantization and Product Quantization","meta_description":"A hands-on dive into scalar quantization (integer quantization) and product quantization with Python.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"blog","read_time":10,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":234,"title":"Hierarchical Navigable Small Worlds (HNSW) ","sub_title":null,"featured":null,"abstract":"Hierarchical Navigable Small World (HNSW) is a graph-based algorithm that performs approximate nearest neighbor (ANN) searches in vector databases.","display_time":"2024-07-17","url":"hierarchical-navigable-small-worlds-HNSW","home_order":null,"published_at":"2024-07-28T17:28:32.243Z","created_by":60,"updated_by":60,"created_at":"2024-07-28T16:13:21.363Z","updated_at":"2024-07-28T17:38:11.060Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"author":null,"canonical_rel":"","repost_to_medium":false,"repost_state":null,"meta_title":"Understanding Hierarchical Navigable Small Worlds (HNSW) ","meta_description":"Hierarchical Navigable Small World (HNSW) is a graph-based algorithm that performs approximate nearest neighbor (ANN) searches in vector databases. ","meta_keywords":"Hierarchical Navigable Small Worlds, HNSW, Vector Database, Vector Search, hnsw algorithm","invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":13,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":40,"title":"Approximate Nearest Neighbors Oh Yeah (Annoy)","sub_title":"Annoy: A Deep Dive into Approximate Nearest Neighbors - Vector Database 101","featured":true,"abstract":"Discover the capabilities of Annoy, an innovative algorithm revolutionizing approximate nearest neighbor searches for enhanced efficiency and precision.","display_time":"2023-05-25","url":"approximate-nearest-neighbor-oh-yeah-ANNOY","home_order":null,"published_at":"2023-05-27T12:09:24.955Z","created_by":60,"updated_by":124,"created_at":"2023-05-26T02:13:53.736Z","updated_at":"2025-03-28T08:36:07.897Z","show_in_learn":true,"order_in_learn":"0","category_order":null,"category_learn":3,"author":3,"canonical_rel":"https://zilliz.com/learn/approximate-nearest-neighbor-oh-yeah-ANNOY","repost_to_medium":null,"repost_state":null,"meta_title":"Approximate Nearest Neighbors Oh Yeah (Annoy)","meta_description":"Discover the capabilities of Annoy, an innovative algorithm revolutionizing approximate nearest neighbor searches for enhanced efficiency and precision.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":11,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":67,"title":"Choosing the Right Vector Index for Your Project","sub_title":"Guidelines on choosing the right vector index for your project","featured":false,"abstract":"Understanding in-memory vector search algorithms, indexing strategies, and guidelines on choosing the right vector index for your project.","display_time":"2023-07-17","url":"choosing-right-vector-index-for-your-project","home_order":null,"published_at":"2023-07-17T05:43:40.185Z","created_by":18,"updated_by":53,"created_at":"2023-07-11T05:44:08.139Z","updated_at":"2024-10-11T03:47:15.956Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":2,"author":3,"canonical_rel":"https://thesequence.substack.com/p/guest-post-choosing-the-right-vector","repost_to_medium":null,"repost_state":null,"meta_title":null,"meta_description":null,"meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":69,"title":"DiskANN and the Vamana Algorithm","sub_title":"DiskANN and the Vamana Algorithm","featured":null,"abstract":"Dive into DiskANN, a graph-based vector index, and Vamana, the core data structure behind DiskANN. ","display_time":"2023-10-29","url":"DiskANN-and-the-Vamana-Algorithm","home_order":null,"published_at":"2023-10-30T00:39:49.412Z","created_by":53,"updated_by":60,"created_at":"2023-10-30T00:32:28.418Z","updated_at":"2025-06-01T00:00:26.961Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":3,"canonical_rel":"https://zilliz.com/learn/DiskANN-and-the-Vamana-Algorithm","repost_to_medium":null,"repost_state":null,"meta_title":"DiskANN and the Vamana Algorithm","meta_description":"Dive into DiskANN, a graph-based vector index, and Vamana, the core data structure behind DiskANN. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":84,"title":"Safeguard Data Integrity: Backup and Recovery in Vector Databases","sub_title":null,"featured":null,"abstract":"This blog explores data backup and recovery in vectorDBs, their challenges, various methods, and specialized tools to fortify the security of your data assets.","display_time":"2024-03-14","url":"vector-database-backup-and-recovery-safeguard-data-integrity","home_order":null,"published_at":"2024-03-15T01:18:18.855Z","created_by":60,"updated_by":60,"created_at":"2024-03-15T00:54:06.900Z","updated_at":"2025-04-01T00:00:21.781Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"author":45,"canonical_rel":"https://zilliz.com/learn/vector-database-backup-and-recovery-safeguard-data-integrity","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"7de5864c730b","mediumUrl":"https://medium.com/@zilliz_learn/safeguard-data-integrity-backup-and-recovery-in-vector-databases-7de5864c730b"},"meta_title":"Safeguard Data Integrity: Backup and Recovery in VectorDBs","meta_description":"This blog explores data backup and recovery in vectorDBs, their challenges, various methods, and specialized tools to fortify the security of your data assets.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":5,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":90,"title":"Dense Vectors in AI: Maximizing Data Potential in Machine Learning","sub_title":null,"featured":null,"abstract":"This article zooms in on dense vectors, uncovering their advantages compared to sparse vectors and how they are widely used in ML algorithms across various domains. ","display_time":"2024-03-22","url":"dense-vector-in-ai-maximize-data-potential-in-machine-learning","home_order":null,"published_at":"2024-03-22T07:48:51.796Z","created_by":60,"updated_by":60,"created_at":"2024-03-22T07:48:07.919Z","updated_at":"2025-06-01T00:00:27.922Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"author":122,"canonical_rel":"https://zilliz.com/learn/dense-vector-in-ai-maximize-data-potential-in-machine-learning","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"cbb6268f06e3","mediumUrl":"https://medium.com/@zilliz_learn/dense-vectors-in-ai-maximizing-data-potential-in-machine-learning-cbb6268f06e3"},"meta_title":"Dense Vectors in AI: Maximizing Data Potential in ML","meta_description":"This article zooms in on dense vectors, uncovering their advantages compared to sparse vectors and how they are used in ML algorithms across various areas. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":4,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":91,"title":"Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges ","sub_title":null,"featured":null,"abstract":"Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning. ","display_time":"2024-03-22","url":"integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges","home_order":null,"published_at":"2024-03-23T04:59:48.229Z","created_by":60,"updated_by":53,"created_at":"2024-03-23T04:53:32.283Z","updated_at":"2024-08-11T16:27:57.110Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":40,"author":123,"canonical_rel":"https://zilliz.com/learn/integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"346537cf07e2","mediumUrl":"https://medium.com/@zilliz_learn/integrating-vector-databases-with-cloud-computing-a-strategic-solution-to-modern-data-challenges-346537cf07e2"},"meta_title":"Integrating VectorDBs with Cloud Computing Against Data Challenges","meta_description":"Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":106,"title":"A Beginner's Guide to Implementing Vector Databases","sub_title":null,"featured":null,"abstract":"A Beginner's Guide to Vector Databases, including key considerations and steps to get started with a vector database and implementation best practices. ","display_time":"2024-03-27","url":"beginner-guide-to-implementing-vector-databases","home_order":null,"published_at":"2024-03-29T07:49:18.435Z","created_by":60,"updated_by":60,"created_at":"2024-03-29T07:49:15.667Z","updated_at":"2025-03-12T05:31:54.666Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"author":131,"canonical_rel":"https://zilliz.com/learn/beginner-guide-to-implementing-vector-databases","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"3393c0e87fb5","mediumUrl":"https://medium.com/@zilliz_learn/a-beginners-guide-to-implementing-vector-databases-3393c0e87fb5"},"meta_title":"A Beginner's Guide to Implementing Vector Databases","meta_description":"A Beginner's Guide to Vector Databases, including key considerations and steps to get started with a vector database and implementation best practices. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":7,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":108,"title":"Maintaining Data Integrity in Vector Databases","sub_title":null,"featured":false,"abstract":"Guaranteeing that data is correct, consistent, and dependable throughout its lifecycle is important in data management, and especially in vector databases","display_time":"2024-02-13","url":"maintaining-data-integrity-in-vector-databases","home_order":null,"published_at":"2024-03-30T00:44:05.723Z","created_by":82,"updated_by":60,"created_at":"2024-03-30T00:43:47.984Z","updated_at":"2025-05-01T00:00:23.529Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":127,"canonical_rel":null,"repost_to_medium":false,"repost_state":null,"meta_title":"Maintaining Data Integrity in Vector Databases","meta_description":"Guaranteeing that data is correct, consistent, and dependable throughout its lifecycle is important in data management, especially in vector databases","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":4,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":105,"title":"From Rows and Columns to Vectors: The Evolutionary Journey of Database Technologies ","sub_title":"Vector databases are the latest frontier in this evolutionary path of database systems. ","featured":null,"abstract":"From structured SQL and NoSQL and cutting-edge vector databases, this journey undertakes a significant transformation in data management strategies.\n","display_time":"2024-02-02","url":"from-sql-and-nosql-to-vectors-database-evolution-journey","home_order":null,"published_at":"2024-03-30T19:26:56.961Z","created_by":60,"updated_by":82,"created_at":"2024-03-29T07:34:28.826Z","updated_at":"2024-07-19T21:44:48.136Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":121,"canonical_rel":"https://zilliz.com/learn/from-sql-and-nosql-to-vectors-database-evolution-journey","repost_to_medium":false,"repost_state":null,"meta_title":"Database Evolution Journey from Rows and Columns to Vectors","meta_description":"From structured SQL and NoSQL and cutting-edge vector databases, this journey undertakes a significant transformation in data management strategies.\n","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":126,"title":"Decoding Softmax Activation Function","sub_title":null,"featured":null,"abstract":"This article will discuss the Softmax Activation Function, its applications, challenges, and tips for better performance.\n","display_time":"2024-04-03","url":"decoding-softmax-understanding-functions-and-impact-in-ai","home_order":null,"published_at":"2024-04-03T21:41:51.210Z","created_by":82,"updated_by":53,"created_at":"2024-04-01T19:28:38.603Z","updated_at":"2024-10-03T20:09:22.933Z","show_in_learn":true,"order_in_learn":"","category_order":null,"category_learn":3,"author":118,"canonical_rel":"https://zilliz.com/learn/decoding-softmax-understanding-functions-and-impact-in-ai","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"29314ba9daad","mediumUrl":"https://medium.com/@zilliz_learn/decoding-softmax-understanding-its-functions-and-impact-in-ai-29314ba9daad"},"meta_title":"Understanding Softmax Activation Function","meta_description":"The softmax function is a mathematical function used in machine learning, particularly in the context of classification tasks. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":9,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":140,"title":"Harnessing Product Quantization for Memory Efficiency in Vector Databases","sub_title":null,"featured":null,"abstract":"Exploring product quantization's intricacies and practical implementation through hands-on examples.","display_time":"2024-06-22","url":"harnessing-product-quantization-for-memory-efficiency-in-vector-databases","home_order":null,"published_at":"2024-06-24T10:19:01.210Z","created_by":82,"updated_by":21,"created_at":"2024-04-04T18:17:22.932Z","updated_at":"2025-07-01T00:00:26.934Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":125,"canonical_rel":"https://zilliz.com/learn/harnessing-product-quantization-for-memory-efficiency-in-vector-databases","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"73df8defe12f","mediumUrl":"https://medium.com/@zilliz_learn/harnessing-product-quantization-for-memory-efficiency-in-vector-databases-73df8defe12f"},"meta_title":"Harnessing Product Quantization for Memory Efficiency in Vector Databases","meta_description":"Exploring product quantization's intricacies and practical implementation through hands-on examples.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":7,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":145,"title":"How to Spot Search Performance Bottleneck in Vector Databases","sub_title":null,"featured":null,"abstract":"Learn how to monitor search performance, spot bottlenecks, and optimize the performance in a vector database like Milvus.","display_time":"2024-04-10","url":"how-to-spot-search-performance-bottleneck-in-vector-databases","home_order":null,"published_at":"2024-04-12T00:20:48.575Z","created_by":82,"updated_by":60,"created_at":"2024-04-04T18:28:49.821Z","updated_at":"2024-07-15T09:38:05.520Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":89,"canonical_rel":null,"repost_to_medium":false,"repost_state":null,"meta_title":"How to Spot Search Performance Bottleneck in Vector Databases","meta_description":"Learn how to monitor search performance, spot bottlenecks, and optimize the performance in a vector database like Milvus.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":13,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":147,"title":"Ensuring High Availability of Vector Databases","sub_title":null,"featured":null,"abstract":"Ensuring high availability is crucial for the operation of vector databases, especially in applications where downtime translates directly into lost productivity and revenue. \n","display_time":"2024-04-18","url":"ensuring-high-availability-of-vector-databases","home_order":null,"published_at":"2024-04-20T20:05:22.833Z","created_by":82,"updated_by":60,"created_at":"2024-04-04T18:34:24.980Z","updated_at":"2025-05-01T00:00:23.126Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":45,"canonical_rel":null,"repost_to_medium":false,"repost_state":null,"meta_title":"Ensuring High Availability of Vector Databases","meta_description":"Ensuring high availability is crucial for the operation of vector databases, especially in applications where downtime translates directly into lost productivity and revenue. \n","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":6,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":152,"title":"Mastering Locality Sensitive Hashing: A Comprehensive Tutorial and Use Cases","sub_title":null,"featured":null,"abstract":"Understand Locality Sensitive Hashing as an effective similarity search technique. Learn practical applications, challenges, and Python implementation of LSH.","display_time":"2024-04-01","url":"mastering-locality-sensitive-hashing-a-comprehensive-tutorial","home_order":null,"published_at":"2024-04-07T00:41:00.743Z","created_by":82,"updated_by":60,"created_at":"2024-04-05T17:02:46.589Z","updated_at":"2024-10-15T11:34:57.697Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":118,"canonical_rel":null,"repost_to_medium":false,"repost_state":null,"meta_title":"Mastering Locality Sensitive Hashing: A Comprehensive Tutorial and Use Cases","meta_description":"Understand Locality Sensitive Hashing as an effective similarity search technique. Learn practical applications, challenges, and Python implementation of LSH.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":7,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":154,"title":"Vector Library vs Vector Database: Which One is Right for You?","sub_title":null,"featured":null,"abstract":"Dive into the differences between these two technologies, their strengths, and their practical applications, providing developers with a comprehensive guide to choosing the right tool for their AI projects.\n","display_time":"2024-04-14","url":"vector-library-versus-vector-database","home_order":null,"published_at":"2024-04-14T23:01:07.312Z","created_by":82,"updated_by":53,"created_at":"2024-04-05T17:08:44.859Z","updated_at":"2024-10-03T15:43:40.917Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":128,"canonical_rel":"https://zilliz.com/learn/vector-library-versus-vector-database","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"86b65b7acf7a","mediumUrl":"https://medium.com/@zilliz_learn/vector-library-versus-vector-database-86b65b7acf7a"},"meta_title":"Vector Library versus Vector Database","meta_description":"Dive into the differences between these two technologies, their strengths, and their practical applications, providing developers with a comprehensive guide to choosing the right tool for their AI projects.\n","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":10,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":156,"title":"Maximizing GPT 4.x's Potential Through Fine-Tuning Techniques","sub_title":null,"featured":null,"abstract":"This article explores the real potential of GPT 4.x, highlighting its advanced powers and the critical role of fine-tuning in making the model suitable for specific applications. ","display_time":"2024-04-10","url":"maximizing-gpt-4-potential-through-fine-tuning-techniques","home_order":null,"published_at":"2024-04-11T21:30:56.652Z","created_by":82,"updated_by":60,"created_at":"2024-04-05T23:54:07.790Z","updated_at":"2025-07-01T00:00:29.149Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":133,"canonical_rel":"https://zilliz.com/learn/maximizing-gpt-4-potential-through-fine-tuning-techniques","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"a89af0a4ff11","mediumUrl":"https://medium.com/@zilliz_learn/maximizing-gpt-4-xs-potential-through-fine-tuning-techniques-a89af0a4ff11"},"meta_title":"Maximizing GPT 4.x's Potential Through Fine-Tuning Techniques","meta_description":"This article explores the real potential of GPT 4.x, highlighting its advanced powers and the critical role of fine-tuning in making the model suitable for specific applications. ","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":8,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":158,"title":"Deploying Vector Databases in Multi-Cloud Environments","sub_title":null,"featured":null,"abstract":"Multi-cloud deployment has become increasingly popular for services looking for as much uptime as possible, with organizations leveraging multiple cloud providers to optimize performance, reliability, and cost-efficiency.","display_time":"2024-04-12","url":"Deploying-Vector-Databases-in-Multi-Cloud-Environments","home_order":null,"published_at":"2024-04-14T16:12:40.900Z","created_by":82,"updated_by":60,"created_at":"2024-04-05T23:58:28.738Z","updated_at":"2025-06-01T00:00:28.059Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":3,"author":3,"canonical_rel":"https://zilliz.com/learn/Deploying-Vector-Databases-in-Multi-Cloud-Environments","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"936b39782b0f","mediumUrl":"https://medium.com/@zilliz_learn/deploying-vector-databases-in-multi-cloud-environments-936b39782b0f"},"meta_title":"Deploying Vector Databases in Multi-Cloud Environments","meta_description":"Multi-cloud deployment with organizations leveraging multiple cloud providers to optimize performance, reliability, and cost-efficiency.","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":5,"seriesName":"Vector Database 101: Everything You Need to Know"},{"id":189,"title":"An Introduction to Vector Embeddings: What They Are and How to Use Them ","sub_title":null,"featured":null,"abstract":"In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.\n","display_time":"2024-06-03","url":"everything-you-should-know-about-vector-embeddings","home_order":null,"published_at":"2024-06-04T21:18:17.494Z","created_by":82,"updated_by":21,"created_at":"2024-05-06T23:57:15.254Z","updated_at":"2025-07-01T00:00:28.777Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":null,"author":null,"canonical_rel":"https://zilliz.com/learn/everything-you-should-know-about-vector-embeddings","repost_to_medium":false,"repost_state":{"status":"failed"},"meta_title":"An Introduction to Vector Embeddings: What They Are and How to Use Them","meta_description":"In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.\n","meta_keywords":null,"invisible":null,"locale":"en","repost_to_devto":null,"belong":"learn","read_time":9,"seriesName":"Vector Database 101: Everything You Need to Know"}],"canonicalUrl":null,"CONTENT_TYPE":"knowledge-article","recommendedArticles":[{"id":"learn-105","title":"Introduction to Unstructured Data","content":"\nWelcome to Vector Database 101. \n\n## Introduction\n\nThis is the first tutorial in the course _[Vector Database 101](https://zilliz.com/blog?tag=39\u0026page=1)_, and will be mostly a text-based overview of _unstructured data_. I know, this doesn't sound like a very sexy topic, but before you press that little __x__ button on your browser tab, hear us out.\n\nNew data is being generated every day, and is undoubtedly a key driver of both worldwide integration as well as the global economy. From heart rate monitors worn on wrists generating sensor data to GPS positions of a vehicle fleet to videos uploaded to social media, data is being generated at an exponentially increasing rate. The importance of this ever-increasing amount of data cannot be understated; data can help better serve existing customers, identify supply chain weaknesses, pinpoint workforce inefficiencies, and help companies identify and break into new markets, all factors that can enable a company (and you) to generate more revenue.\n\nNot convinced yet? International Data Corporation - also known as _IDC_ - predicts that the _global datasphere_ - a measure of the total amount of new data created and stored on persistent storage all around the world - will grow to 400 zettabytes (a zettabyte = $10 ^ {21}$ bytes) by 2028. At that time, over 30% of said data will be generated in real-time, while 80% of all generated data will be _unstructured data_.\n\n\u003ciframe style=\"border-radius:12px\" src=\"https://open.spotify.com/embed/episode/7c4u0iiLVULmv7fkmhbghC?utm_source=generator\" width=\"100%\" height=\"152\" frameBorder=\"0\" allowfullscreen=\"\" allow=\"autoplay; clipboard-write; encrypted-media; fullscreen; picture-in-picture\" loading=\"lazy\"\u003e\u003c/iframe\u003e \n\n\n## Structured/ semi-structured/ unstructured data definition\n\nSo what exactly is [unstructured data](https://zilliz.com/glossary/unstructured-data)? As the name suggests, unstructured data refers to data that cannot be stored in a pre-defined format or fit into an existing data model. Human-generated data - images, video, audio, text files, etc - are great examples of unstructured data. But there are a variety of less mundane examples of unstructured data too. Protein structures, executable file hashes, and even human-readable code are three of a near-infinite set of examples of unstructured data.\n\nStructured data, on the other hand, refers to data that can be stored in a table-based format, while semi-structured data refers to data that can be stored in single- or multi-level array/key-value stores. If none of this makes sense to you yet, don't fret. Bear with us and we'll provide examples to help solidify your understanding of the key differences between stuctured and unstructured data.\n\n## Some concrete examples of structured data\n\nStill with us? Excellent - let's start by briefly describing structured/semi-structured data. In the simplest terms, traditional structured data can be stored via a relational model. Take, for example, a book database:\n\n| ISBN | Year | Name | Author |\n| ---------- | ---- | ------------------------------------ | ------------- |\n| 0767908171 | 2003 | A Short History of Nearly Everything | Bill Bryson |\n| 039516611X | 1962 | Silent Spring | Rachel Carson |\n| 0374332657 | 1998 | Holes | Louis Sachar |\n| ... | | | |\n\n\u003cp style=\"text-align: center\"\u003e\u003csub\u003eAhh, _Holes_. Brings back childhood memories.\u003c/sub\u003e\u003c/p\u003e\n\nIn the example above, each row within the database represents a particular book (indexed by ISBN number), while the columns denote the corresponding category of information. Databases built on top of the relational model allow for multiple tables, each of which has its own unique set of columns. These tables are formally known as _relations_, but we'll just call them tables to avoid confusing databases with friends and family members. Two of the most popular and well-known examples of relational databases are _MySQL_ (released in 1995) and _PostgreSQL_ (released in 1996).\n\nSemi-structured data is the subset of structured data that does not conform to the traditional table-based model. Instead, semi-structured data usually comes with keys or markers which can be used to describe and index the data. Going back to the example of a book database, we can expand it to a semi-structured JSON format as so:\n\n {\n ISBN: 0767908171\n Month: February\n Year: 2003\n Name: A Short History of Nearly Everything\n Author: Bill Bryson\n Tags: geology, biology, physics\n },\n {\n ISBN: 039516611X\n Name: Silent Spring\n Author: Rachel Carson\n },\n {\n ISBN: 0374332657\n Year: 1998\n Name: Holes\n Author: Louis Sachar\n },\n ...\n\nNote how the first element in our new JSON database now contains `Months` and `Tags` as two extra pieces of information, without impacting the two subsequent elements. With semi-structured data, this can be done without the extra overhead of two additional columns for all elements, thereby allowing for greater flexibility.\n\nSemi-structured data is typically stored in a _NoSQL database_ (wide-column store, object/document database, key-value store, etc), as their non-tabular nature prevents direct use in a relational database. _Cassandra_ (released in 2008), _MongoDB_ (released in 2009), and _Redis_ (released in 2009) are three of the most popular databases for semi-structured data today. Note how these popular databases for semi-structured data were released a little over a decade after popular databases for structured data - keep this in mind as we'll get to it later.\n\n## A paradigm shift — Unstructured Data Definition\n\nNow that we have a solid understanding of structured/semi-structured data, let's move to talking about unstructured data. Unlike structured/semi-structured data, unstructured data can take any form, be of an arbitrarily large or small size on disk, and can require vastly different runtimes to transform and index. Let's take images as an example: three front-facing successive images of the same German Shepherd are _semantically the same_.\n\n_Semantically the same_? What on earth does that mean? Let's dive a bit deeper and unpack the idea of _semantic similarity_. Although these three photos may have vastly different pixel values, resolutions, file sizes, etc, all three photos are of the same German Shepherd in the same environment. Think about it - all three photos have identical or near-identical content but significantly different raw pixel values. This poses a new challenge for industries and companies that use data\u003csup\u003e1\u003c/sup\u003e: how can we transform, store, and search unstructured data in a similar fashion to structured/semi-structured data?\n\nAt this point, you're probably wondering: how can we search and analyze unstructured data if it has no fixed size or format? The answer: machine learning (or more specifically, deep learning). In the past decade, the combination of big data and deep neural networks has fundamentally changed the way we approach data-driven applications; tasks ranging from spam email detection to realistic text-to-video synthesis have seen incredible strides, with accuracy metrics on certain tasks reaching superhuman levels. This may sound scary (hello, Skynet), but we're still many decades away from Elon Musk's vision of AI taking over the world.\n\n\u003csup\u003e1\u003c/sup\u003e\u003csub\u003eIn essence, this is all industries, all companies, and all individuals. Including you!\u003c/sub\u003e\n\n### Examples of Unstructured data \nUnstructured data can be generated by machines or by humans. \nMachine-generated unstructured data examples include:\n\n- Sensor data: Data collected from sensors, such as temperature sensors, humidity sensors, GPS sensors, and motion sensors.\n- Machine log data: Data generated by machines, devices, or applications, including system logs, application logs, and event logs.\n- Internet of Things (IoT) data: Data collected from smart devices, such as smart thermostats, smart home assistants, and wearable devices.\n- Computer vision data: This is unstructured data generated by computer vision technologies, such as image recognition, object detection, and video analysis.\n- Natural Language Processing (NLP) data: This is data generated by NLP technologies, such as speech recognition, language translation, and sentiment analysis.\n- Web and application data: Data generated by web servers, web applications, and mobile applications, including user behavior data, error logs, and application performance data.\n\nExamples of human-generated unstructured data include:\n\n- Emails: Email messages are often unstructured and can contain free-form text, images, and attachments.\n- Text messages: Text messages can be informal, unstructured, and contain abbreviations or emojis.\n- Social media posts: Social media posts can vary in structure and content, including text, images, videos, and hashtags.\n- Audio recordings: Human-generated audio recordings can include phone calls, voicemails, audio files and audio notes are unstructured data.\n- Handwritten notes: Handwritten notes can be unstructured and contain drawings, diagrams, and other visual elements.\n- Meeting notes: Meeting notes can contain unstructured text, diagrams, and action items.\n- Transcripts: Transcripts of speeches, interviews, and meetings can contain unstructured text with varying degrees of accuracy.\n- User-generated content: User-generated content on websites and forums can be unstructured data and include free-form text, images, and video files.\n\n## A crash course on embeddings\n\nLet's get back on track. The vast majority of neural network models are capable of turning a single piece of unstructured data into a list of floating point values, also known more commonly as an _embeddings_ or _embedding vectors_. As it turns out, a properly trained neural network can output embeddings that represent the semantic content of the image\u003csup\u003e2\u003c/sup\u003e. In a future tutorial, we'll go over a [vector database](https://zilliz.com/learn/what-is-vector-database) use case that uses a pre-determined algorithm to generate embeddings.\n\n\n\nThe photo above provides an example of transforming a piece of unstructured data into a vector. With the preeminent ResNet-50 convolutional neural network, this image can be represented as a vector of length 2048 - here are the first three and last three elements: `[0.1392, 0.3572, 0.1988, ..., 0.2888, 0.6611, 0.2909]`. Embeddings generated by a properly trained neural network have mathematical properties which make them easy to search and analyze. We won't go too much into detail here, but know that, generally speaking, embedding vectors for semantically similar objects are _close to each other in terms of distance_. Therefore, searching across and understanding unstructured data boils down to vector arithmetic.\n\n\n\nAs mentioned in the introduction, unstructured data will comprise a whopping 80% of all newly created data by the year 2028. This proportion will continue to increase beyond 80% as industries mature and implement methods for unstructured data processing. This impacts everybody - you, me, the companies that we work for, the organizations that we volunteer for, so on and so forth. Just as new user-facing applications from 2010 onward required databases for storing semi-structured data (as opposed to traditional tabular data), this decade necessitates databases purpose-built for indexing and searching across massive quantities (exabytes) of unstructured data.\n\nThe solution? A database for the AI era - a _vector database_. Welcome to our world; welcome to the world of ___[the open-source vector database, Milvus](https://zilliz.com/what-is-milvus)___.\n\n\u003csup\u003e2\u003c/sup\u003e\u003csub\u003eIn most tutorials, we'll focus on embeddings generated by neural networks; do note, however, that embeddings can be generated through handcrafted algorithms as well.\u003c/sub\u003e\n\n## Unstructured data processing\n\nExcited yet? Excellent. But before we dive headfirst into vector databases and Milvus, let's take a minute to talk about how we process and analyze unstructured data. In the case of structured and semi-structured data, searching for or filtering items in the database is fairly straightforward. As a simple example, querying MongoDB for the first book from a particular author can be done with the following code snippet (using `pymongo`):\n\n```python\n\u003e\u003e\u003e document = collection.find_one({'Author': 'Bill Bryson'})\n```\n\nThis type of querying methodology is not dissimilar to that of traditional relational databases, which rely on SQL statements to filter and fetch data. The concept is the same: databases for structured/semi-structured data perform filtering and querying using mathematical (e.g. `\u003c=`, string distance) or logical (e.g. `EQUALS`, `NOT`) operators across numerical values and/or strings. For traditional relational databases, this is called _relational algebra_; for those of you unfamiliar with it, trust me when I say it's much worse than linear algebra. You may have seen examples of extremely complex filters being constructed through relational algebra, but the core concept remains the same - traditional databases are _deterministic_ systems that always return exact matches for a given set of filters.\n\nUnlike databases for structured/semi-structured data, vector database queries are done by specifying an input _query vector_ as opposed to SQL statement or data filters (such as `{'Author': 'Bill Bryson'}`). This vector is the embedding-based representation of the unstructured data. As a quick example, this can be done in Milvus with the following snippet (using `pymilvus`):\n\n```python\n\u003e\u003e\u003e results = collection.search(embedding, 'embedding', params, limit=10)\n```\n\nInternally, queries across large collections of unstructured data are performed using a suite of algorithms collectively known as _approximate nearest neighbor search_, or _ANN search_ for short. In a nutshell, ANN search is a form of optimization that attempts to find the \"closest\" point or set of points to a given query vector. Note the \"approximate\" in ANN. By utilizing clever indexing methods, vector databases have a clear accuracy/performance tradeoff: increasing search runtimes will result in a more consistent database that performs closer to a deterministic system, always returning the absolute nearest neighbors given a query value. Conversely, reducing query times will improve throughput but may result in capturing fewer of a query's true nearest values. In this sense, unstructured data processing is a _probabilistic_ process\u003csup\u003e3\u003c/sup\u003e.\n\n\n\nANN search is a core component of vector databases and a massive research area in and of itself; as such, we'll dive deep into various ANN search methodologies available to you within Milvus in a future set of articles.\n\n\u003csup\u003e3\u003c/sup\u003e\u003csub\u003eVector databases can be made deterministic by selecting a specific index.\u003c/sub\u003e\n\n## Wrapping up\n\nThanks for making it this far! Here are the key takeaways for this tutorial:\n\n- Structured/semi-structured data are limited to numeric, string, or time data types. Through the power of modern machine learning, unstructured data is represented as high-dimensional vectors of numerical values.\n- These vectors, more commonly known as embeddings, are great for representing the semantic content of the unstructured data. Structured/semi-structured data, on the other hand, is semantically as-is, i.e. the content itself is equivalent to the semantics.\n- Searching and analyzing unstructured data is done through ANN search, a process that is inherently probabilistic. Querying across structured/semi-structured data, on the other hand, is deterministic.\n- Unstructured data processing is very different from semi-structured data processing, and requires a complete paradigm shift. This naturally necessitates a new type of database - the vector database.\n\nThis concludes part one of this introductory series - for those of you new to vector databases, welcome to Milvus! In the next [tutorial](https://zilliz.com/learn/what-is-vector-database), we'll cover vector databases in more detail:\n\n- We'll first provide a birds-eye view of the the Milvus vector database.\n- We'll then follow it up with how Milvus differs from vector search libraries (FAISS, ScaNN, DiskANN, etc).\n- We'll also discuss how vector databases differ from vector search plugins (for traditional databases and search systems).\n- We'll wrap up with technical challenges associated with modern vector databases.\n\nSee you in the next tutorial, [What is a Vector Database?](https://zilliz.com/learn/what-is-vector-database).\n\n## Take another look at the Vector Database 101 courses\n\n1. [Introduction to Unstructured Data](https://zilliz.com/learn/introduction-to-unstructured-data)\n2. [What is a Vector Database?](https://zilliz.com/learn/what-is-vector-database)\n3. [Comparing Vector Databases, Vector Search Libraries, and Vector Search Plugins](https://zilliz.com/learn/comparing-vector-database-vector-search-library-and-vector-search-plugin)\n4. [Introduction to Milvus](https://zilliz.com/learn/introduction-to-milvus-vector-database)\n5. [Milvus Quickstart](https://zilliz.com/learn/milvus-vector-database-quickstart)\n6. [Introduction to Vector Similarity Search](https://zilliz.com/learn/vector-similarity-search)\n7. [Vector Index Basics and the Inverted File Index](https://zilliz.com/learn/vector-index)\n8. [Scalar Quantization and Product Quantization](https://zilliz.com/learn/scalar-quantization-and-product-quantization)\n9. [Hierarchical Navigable Small Worlds (HNSW)](https://zilliz.com/learn/hierarchical-navigable-small-worlds-HNSW)\n10. [Approximate Nearest Neighbors Oh Yeah (ANNOY)](https://zilliz.com/learn/approximate-nearest-neighbor-oh-yeah-ANNOY)\n11. [Choosing the Right Vector Index for Your Project](https://zilliz.com/learn/choosing-right-vector-index-for-your-project)\n12. [DiskANN and the Vamana Algorithm](https://zilliz.com/learn/DiskANN-and-the-Vamana-Algorithm) ","language":null,"keywords":null,"published_at":"2022-09-30T06:15:45.722Z","created_by":{"id":18,"firstname":"admin","lastname":"admin","username":null,"email":"admin@zilliz.com","password":"$2a$10$OS8coZJRymO4g1dl88yqU.qIxzaqiEyWxa3j4Ab/HuKyOIV7dhTK6","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"updated_by":{"id":60,"firstname":"Di","lastname":"Feng","username":null,"email":"fendy.feng@zilliz.com","password":"$2a$10$3n0EPwpsTTiNpylnqJ4MReO3yAuO3glfers.zS0Wo4pmwHzaFMbnm","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"created_at":"2022-09-30T06:15:18.726Z","updated_at":"2025-03-24T09:01:42.846Z","featured":true,"abstract":"Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week. ","sub_title":"Everything about unstructured data - what they are, how they differ from traditional data types, and the paradigm shift called for by the need to efficiently process them.","author":"Zilliz","display_time":"Sep 29, 2022","home_order":"a","isNews":null,"url":"introduction-to-unstructured-data","show_in_learn":false,"order_in_learn":null,"category_order":null,"category_learn":{"id":3,"name":"Vector Database 101","created_by":23,"updated_by":23,"created_at":"2021-12-22T07:11:01.954Z","updated_at":"2021-12-22T07:11:19.627Z","order":"0","published_at":"2021-12-22T07:11:19.621Z","locale":"en"},"deploy_time":null,"author_info":null,"canonical_rel":"https://zilliz.com/learn/introduction-to-unstructured-data","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"68e4b3354d73","mediumUrl":"https://medium.com/@zilliz_learn/introduction-to-unstructured-data-68e4b3354d73"},"meta_title":"Introduction to Unstructured Data","meta_description":"Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week. ","meta_keywords":null,"invisible":false,"locale":"en","repost_to_devto":null,"image":{"id":2159,"name":"Introduction to Unstructured Data.png","alternativeText":"","caption":"","width":2400,"height":1256,"formats":{"large":{"ext":".png","url":"https://assets.zilliz.com/large_Introduction_to_Unstructured_Data_60dd9ab64b.png","hash":"large_Introduction_to_Unstructured_Data_60dd9ab64b","mime":"image/png","name":"large_Introduction to Unstructured Data.png","path":null,"size":408.28,"width":1000,"height":523},"small":{"ext":".png","url":"https://assets.zilliz.com/small_Introduction_to_Unstructured_Data_60dd9ab64b.png","hash":"small_Introduction_to_Unstructured_Data_60dd9ab64b","mime":"image/png","name":"small_Introduction to Unstructured Data.png","path":null,"size":105.58,"width":500,"height":262},"medium":{"ext":".png","url":"https://assets.zilliz.com/medium_Introduction_to_Unstructured_Data_60dd9ab64b.png","hash":"medium_Introduction_to_Unstructured_Data_60dd9ab64b","mime":"image/png","name":"medium_Introduction to Unstructured Data.png","path":null,"size":211.37,"width":750,"height":393},"thumbnail":{"ext":".png","url":"https://assets.zilliz.com/thumbnail_Introduction_to_Unstructured_Data_60dd9ab64b.png","hash":"thumbnail_Introduction_to_Unstructured_Data_60dd9ab64b","mime":"image/png","name":"thumbnail_Introduction to Unstructured Data.png","path":null,"size":38.81,"width":245,"height":128}},"hash":"Introduction_to_Unstructured_Data_60dd9ab64b","ext":".png","mime":"image/png","size":2105.47,"url":"https://assets.zilliz.com/Introduction_to_Unstructured_Data_60dd9ab64b.png","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":18,"updated_by":18,"created_at":"2023-11-09T15:33:04.758Z","updated_at":"2023-11-09T15:33:04.771Z"},"tags":[{"id":39,"name":"VectorDB 101","published_at":"2022-09-30T05:56:34.554Z","created_by":18,"updated_by":18,"created_at":"2022-09-30T05:56:31.633Z","updated_at":"2024-07-10T07:12:04.155Z","locale":"en"}],"authors":[{"id":1,"name":"Zilliz","author_tags":"Vector database","published_at":"2021-12-14T06:36:41.397Z","created_by":18,"updated_by":18,"created_at":"2021-12-14T06:28:27.154Z","updated_at":"2023-04-11T16:54:05.275Z","home_page":null,"home_page_link":null,"self_intro":null,"repost_to_medium":null,"repost_state":null,"meta_description":null,"locale":"en","avatar":{"id":1303,"name":"Zilliz Logo Mark White-20230223-041013.png","alternativeText":"","caption":"","width":400,"height":400,"formats":{"thumbnail":{"ext":".png","url":"https://assets.zilliz.com/thumbnail_Zilliz_Logo_Mark_White_20230223_041013_86057436cc.png","hash":"thumbnail_Zilliz_Logo_Mark_White_20230223_041013_86057436cc","mime":"image/png","name":"thumbnail_Zilliz Logo Mark White-20230223-041013.png","path":null,"size":12.28,"width":156,"height":156}},"hash":"Zilliz_Logo_Mark_White_20230223_041013_86057436cc","ext":".png","mime":"image/png","size":30.19,"url":"https://assets.zilliz.com/Zilliz_Logo_Mark_White_20230223_041013_86057436cc.png","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":55,"updated_by":122,"created_at":"2023-04-11T16:53:10.449Z","updated_at":"2025-01-21T09:57:24.435Z"}}],"localizations":[{"id":815,"locale":"ja-JP","published_at":"2025-01-22T07:03:40.126Z"}],"read_time":11,"display_updated_time":"Mar 24, 2025","backgroundImage":"https://assets.zilliz.com/large_Introduction_to_Unstructured_Data_60dd9ab64b.png","belong":"learn","authorNames":["Zilliz"]},{"id":"learn-67","content":"## A quick recap\n\nIn our [Vector Database 101 series](https://zilliz.com/blog?tag=39\u0026page=1), we’ve learned that [vector databases](https://zilliz.com/learn/what-is-vector-database) are purpose-built databases meant to conduct [approximate nearest neighbor search](https://zilliz.com/glossary/anns) across large datasets of high-dimensional vectors (typically over 96 dimensions and sometimes over 10k). These vectors are meant to represent the semantics of [unstructured data](https://zilliz.com/glossary/unstructured-data), i.e., data that cannot be fit into traditional databases such as relational databases, wide-column stores, or document databases.\n\n \n\nConducting an efficient [similarity search](https://zilliz.com/learn/vector-similarity-search) requires a data structure known as a vector index. These indexes enable efficient traversal of the entire database; rather than having to perform brute-force search with each vector. There are a number of in-memory vector search algorithms and indexing strategies available to you on your vector search journey. Here's a quick summary of each:\n\n### Brute-force search (`FLAT`)\n\n \n\nBrute-force search, also known as \"flat\" indexing, is an approach that compares the query vector with every other vector in the database. While it may seem naive and inefficient, flat indexing can yield surprisingly good results for small datasets, especially when parallelized with accelerators like GPUs or FPGAs.\n\n\n\n\n\n### Inverted file index (`IVF`)\n\n \n\n[IVF](https://zilliz.com/learn/vector-index) is a partition-based indexing strategy that assigns all database vectors to the partition with the closest centroid. Cluster centroids are determined using unsupervised clustering (typically k-means). With the centroids and assignments in place, we can create an [inverted index](https://zilliz.com/glossary/inverted-index), correlating each centroid with a list of vectors in its cluster. IVF is generally a solid choice for small- to medium-size datasets.\n\n\n\n\n\n### Scalar quantization (`SQ`)\n\n \n\n[Scalar quantization](https://zilliz.com/learn/scalar-quantization-and-product-quantization) converts floating-point vectors (typically `float32` or `float64`) into integer vectors by dividing each dimension into bins. The process involves:\n\n- Determining each dimension's maximum and minimum values.\n \n- Calculating start values and step sizes.\n \n- Performing quantization by subtracting start values and dividing by step sizes.\n \n\nThe quantized dataset typically uses 8-bit unsigned integers, but lower values (5-bit, 4-bit, and even 2-bit) are also common.\n\n### Product quantization (`PQ`)\n\n \n\nScalar quantization disregards distribution along each vector dimension, potentially leading to underutilized bins. [Product quantization](https://zilliz.com/learn/scalar-quantization-and-product-quantization) (PQ) is a more powerful alternative that performs both compression and reduction: high-dimensional vectors are mapped to low-dimensional quantized vectors assigning fixed-length chunks of the original vector to a single quantized value. `PQ` typically involves splitting vectors, applying k-means clustering across all splits, and converting centroid indices.\n\n \n\n\n\n\n \n\n### Hierarchical Navigable Small Worlds (`HNSW`)\n\n \n\n[HNSW](https://zilliz.com/learn/hierarchical-navigable-small-worlds-HNSW) is the most commonly used vectoring indexing strategy today. It combines two concepts: skip lists and Navigable Small Worlds (NSWs). Skip lists are effectively layered linked lists for faster random access (`O(log n)` for skip lists vs. `O(n)` for linked lists). In HNSW, we create a hierarchical graph of NSWs. Searching in HNSW involves starting at the top layer and moving toward the nearest neighbor in each layer until we find the closest match. Inserts work by finding the nearest neighbor and adding connections.\n\n \n\n\n\n\n### Approximate Nearest Neighbors Oh Yeah (`Annoy`)\n\n \n\n[Annoy](https://zilliz.com/learn/approximate-nearest-neighbor-oh-yeah-ANNOY) is a tree-based index that uses binary search trees as its core data structure. It partitions the vector space recursively to create a binary tree, where each node is split by a hyperplane equidistant from two randomly selected child vectors. The splitting process continues until leaf nodes have fewer than a predefined number of elements. Querying involves iteratively the tree to determine which side of the hyperplane the query vector falls on.\n\n\n\n\n \n\nDon't worry if some of these summaries feel a bit obtuse. Vector search algorithms can be fairly complex but are often easier to explain with visualizations and a bit of code.\n\n \n\n## Picking a vector index\n\n \n\nSo, how exactly do we choose the right vector index? This is a fairly open-ended question, but one key principle to remember is that the right index will depend on your application requirements. For example: are you primarily interested in query speed (with a static database), or will your application require a lot of inserts and deletes? Do you have any constraints on your machine type, such as limited memory or CPU? Or perhaps the domain of data that you'll be inserting will change over time? All of these factors contribute to the most optimal index type to use.\n\n \n\nHere are some guidelines to help you choose the right index type for your project:\n\n \n\n100% recall: This one is fairly simple - use `FLAT` search if you need 100% accuracy. All efficient data structures for vector search perform _approximate_ nearest neighbor search, meaning that there's going to be a loss of recall once the index size hits a certain threshold.\n\n \n\n`index_size` \u003c 10MB: If your total index size is tiny (fewer than 5k 512-dimensional `float32` vectors), just use `FLAT` search. The overhead associated with index building, maintenance, and querying is simply not worth it for a tiny dataset.\n\n \n\n**10MB \u003c `index_size` \u003c 2GB**: If your total index size is small (fewer than 1M 512-dimensional `float32` vectors), my recommendation is to go with a standard inverted-file index (e.g. `IVF`). An inverted-file index can reduce the search scope by around an order of magnitude while still maintaining fairly high recall.\n\n \n\n**2GB \u003c `index_size` \u003c 20GB**: Once you reach a mid-size index (fewer than 10M 512-dimensional `float32` vectors), you'll want to start considering other `PQ` and `HNSW` index types. Both will give you reasonable query speed and throughput, but `PQ` allows you to use significantly less memory at the expense of low recall, while `HNSW` often gives you 95%+ recall at the expense of high memory usage - around 1.5x the total size of your index. For dataset sizes in this range, composite `IVF` indexes (`IVF_SQ`, `IVF_PQ`) can also work well, but I would use them only if you have limited compute resources.\n\n \n\n**20GB \u003c `index_size` \u003c 200GB**: For large datasets (fewer than 100M 512-dimensional `float32` vectors), I recommend the use of _composite indexes_: `IVF_PQ` for memory-constrained applications and `HNSW_SQ` for applications that require high recall. A composite index is an indexing technique combining multiple vector search strategies into a single index. This technique effectively combines the best of both indexes; `HNSW_SQ`, for example, retains most of `HNSW`'s base query speed and throughput but with a significantly reduced index size. We won't dive too deep into composite indexes here, but [FAISS's documentation](https://github.com/facebookresearch/faiss/wiki/Faiss-indexes-(composite) provides a great overview for those interested.\n\n \n\nOne last note on Annoy - we don't recommend using it simply because it fits into a similar category as HNSW since, generally speaking, it is less performant. Annoy is the most uniquely named index, so it gets bonus points there.\n\n \n\n## A word on disk indexes\n\nAnother option we haven't dove into explicitly in this blog post is disk-based indexes. In a nutshell, disk-based indexes leverage the architecture of NVMe disks by colocating individual search subspaces into their own NVMe page. In conjunction with zero seek latency, this enables efficient storage of both graph- and tree-based vector indexes.\n\n \n\nThese index types are becoming increasingly popular since they enable the storage and search of billions of vectors on a single machine while maintaining a reasonable performance level. The downside to disk-based indexes should be obvious as well. Because disk reads are significantly slower than RAM reads, disk-based indexes often experience increased query latencies, sometimes by over 10x! If you are willing to sacrifice latency and throughput for the ability to store billions of vectors at minimal cost, disk-based indexes are the way to go. Conversely, if your application requires high performance (often at the expense of increased compute costs), you'll want to stick with `IVF_PQ` or `HNSW_SQ`.\n\n \n\n## Wrapping up\n\nIn this post, we covered some of the vector indexing strategies available. Given your data size and compute limitations, we provided a simple flowchart to help determine the optimal strategy. Please note that this flowchart is a general guideline, not a hard-and-fast rule. Ultimately, you'll need to understand the strengths and weaknesses of each indexing option, as well as whether a composite index can help you squeeze out the last bit of performance your application needs. All these index types are freely available to you in Milvus, so you can experiment as you see fit. Go out there and experiment!\n\n## Take another look at the Vector Database 101 courses\n\n1. [Introduction to Unstructured Data](https://zilliz.com/learn/introduction-to-unstructured-data)\n2. [What is a Vector Database?](https://zilliz.com/learn/what-is-vector-database)\n3. [Comparing Vector Databases, Vector Search Libraries, and Vector Search Plugins](https://zilliz.com/learn/comparing-vector-database-vector-search-library-and-vector-search-plugin)\n4. [Introduction to Milvus](https://zilliz.com/learn/introduction-to-milvus-vector-database)\n5. [Milvus Quickstart](https://zilliz.com/learn/milvus-vector-database-quickstart)\n6. [Introduction to Vector Similarity Search](https://zilliz.com/learn/vector-similarity-search)\n7. [Vector Index Basics and the Inverted File Index](https://zilliz.com/learn/vector-index)\n8. [Scalar Quantization and Product Quantization](https://zilliz.com/learn/scalar-quantization-and-product-quantization)\n9. [Hierarchical Navigable Small Worlds (HNSW)](https://zilliz.com/learn/hierarchical-navigable-small-worlds-HNSW)\n10. [Approximate Nearest Neighbors Oh Yeah (ANNOY)](https://zilliz.com/learn/approximate-nearest-neighbor-oh-yeah-ANNOY)\n11. [Choosing the Right Vector Index for Your Project](https://zilliz.com/learn/choosing-right-vector-index-for-your-project)\n12. [DiskANN and the Vamana Algorithm](https://zilliz.com/learn/DiskANN-and-the-Vamana-Algorithm)","title":"Choosing the Right Vector Index for Your Project","sub_title":"Guidelines on choosing the right vector index for your project","featured":false,"abstract":"Understanding in-memory vector search algorithms, indexing strategies, and guidelines on choosing the right vector index for your project.","display_time":"Jul 17, 2023","url":"choosing-right-vector-index-for-your-project","home_order":null,"published_at":"2023-07-17T05:43:40.185Z","created_by":{"id":18,"firstname":"admin","lastname":"admin","username":null,"email":"admin@zilliz.com","password":"$2a$10$OS8coZJRymO4g1dl88yqU.qIxzaqiEyWxa3j4Ab/HuKyOIV7dhTK6","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"updated_by":{"id":53,"firstname":"Chris","lastname":"Churilo","username":null,"email":"chris.churilo@zilliz.com","password":"$2a$10$A7cGJkzIFDjoAQRaAPCCW.Dw59vx2PqnKAfW87apXsAJdZPc54rTm","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"created_at":"2023-07-11T05:44:08.139Z","updated_at":"2024-10-11T03:47:15.956Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":{"id":2,"name":"Index and Libraries","created_by":23,"updated_by":23,"created_at":"2021-12-17T08:04:16.699Z","updated_at":"2021-12-22T07:11:16.068Z","order":"1","published_at":"2021-12-17T08:04:19.580Z","locale":"en"},"author":{"id":3,"name":"Frank Liu","author_tags":"Director of Operations \u0026 ML Architect at Zilliz","published_at":"2021-12-23T11:08:08.015Z","created_by":18,"updated_by":18,"created_at":"2021-12-23T10:40:59.821Z","updated_at":"2023-04-21T22:30:10.416Z","home_page":"GitHub","home_page_link":"https://github.com/fzliu","self_intro":"Frank Liu is the Director of Operations \u0026 ML Architect at Zilliz, where he serves as a maintainer for the Towhee open-source project. Prior to Zilliz, Frank co-founded Orion Innovations, an ML-powered indoor positioning startup based in Shanghai and worked as an ML engineer at Yahoo in San Francisco. In his free time, Frank enjoys playing chess, swimming, and powerlifting. Frank holds MS and BS degrees in Electrical Engineering from Stanford University. ","repost_to_medium":null,"repost_state":null,"meta_description":null,"locale":"en","avatar":{"id":545,"name":"frank.jpeg","alternativeText":"","caption":"","width":320,"height":320,"formats":{"thumbnail":{"ext":".jpeg","url":"https://assets.zilliz.com/thumbnail_frank_984c125080.jpeg","hash":"thumbnail_frank_984c125080","mime":"image/jpeg","name":"thumbnail_frank.jpeg","path":null,"size":5.19,"width":156,"height":156}},"hash":"frank_984c125080","ext":".jpeg","mime":"image/jpeg","size":11.83,"url":"https://assets.zilliz.com/frank_984c125080.jpeg","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":23,"updated_by":23,"created_at":"2021-12-23T10:40:11.373Z","updated_at":"2021-12-23T10:40:11.387Z"}},"canonical_rel":"https://thesequence.substack.com/p/guest-post-choosing-the-right-vector","repost_to_medium":null,"repost_state":null,"meta_title":"Choosing the Right Vector Index for Your Project","meta_description":"Understanding in-memory vector search algorithms, indexing strategies, and guidelines on choosing the right vector index for your project.","meta_keywords":null,"invisible":false,"locale":"en","repost_to_devto":null,"image":{"id":1533,"name":"Choosing the Right Vector Index for Your Project.png","alternativeText":"","caption":"","width":2400,"height":1256,"formats":{"large":{"ext":".png","url":"https://assets.zilliz.com/large_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","hash":"large_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6","mime":"image/png","name":"large_Choosing the Right Vector Index for Your Project.png","path":null,"size":567.77,"width":1000,"height":523},"small":{"ext":".png","url":"https://assets.zilliz.com/small_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","hash":"small_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6","mime":"image/png","name":"small_Choosing the Right Vector Index for Your Project.png","path":null,"size":161.32,"width":500,"height":262},"medium":{"ext":".png","url":"https://assets.zilliz.com/medium_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","hash":"medium_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6","mime":"image/png","name":"medium_Choosing the Right Vector Index for Your Project.png","path":null,"size":326.58,"width":750,"height":393},"thumbnail":{"ext":".png","url":"https://assets.zilliz.com/thumbnail_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","hash":"thumbnail_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6","mime":"image/png","name":"thumbnail_Choosing the Right Vector Index for Your Project.png","path":null,"size":53.48,"width":245,"height":128}},"hash":"Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6","ext":".png","mime":"image/png","size":2571.36,"url":"https://assets.zilliz.com/Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":18,"updated_by":18,"created_at":"2023-07-11T05:53:18.433Z","updated_at":"2023-07-11T05:53:18.444Z"},"tags":[{"id":39,"name":"VectorDB 101","published_at":"2022-09-30T05:56:34.554Z","created_by":18,"updated_by":18,"created_at":"2022-09-30T05:56:31.633Z","updated_at":"2024-07-10T07:12:04.155Z","locale":"en"}],"authors":[{"id":3,"name":"Frank Liu","author_tags":"Director of Operations \u0026 ML Architect at Zilliz","published_at":"2021-12-23T11:08:08.015Z","created_by":18,"updated_by":18,"created_at":"2021-12-23T10:40:59.821Z","updated_at":"2023-04-21T22:30:10.416Z","home_page":"GitHub","home_page_link":"https://github.com/fzliu","self_intro":"Frank Liu is the Director of Operations \u0026 ML Architect at Zilliz, where he serves as a maintainer for the Towhee open-source project. Prior to Zilliz, Frank co-founded Orion Innovations, an ML-powered indoor positioning startup based in Shanghai and worked as an ML engineer at Yahoo in San Francisco. In his free time, Frank enjoys playing chess, swimming, and powerlifting. Frank holds MS and BS degrees in Electrical Engineering from Stanford University. ","repost_to_medium":null,"repost_state":null,"meta_description":null,"locale":"en","avatar":{"id":545,"name":"frank.jpeg","alternativeText":"","caption":"","width":320,"height":320,"formats":{"thumbnail":{"ext":".jpeg","url":"https://assets.zilliz.com/thumbnail_frank_984c125080.jpeg","hash":"thumbnail_frank_984c125080","mime":"image/jpeg","name":"thumbnail_frank.jpeg","path":null,"size":5.19,"width":156,"height":156}},"hash":"frank_984c125080","ext":".jpeg","mime":"image/jpeg","size":11.83,"url":"https://assets.zilliz.com/frank_984c125080.jpeg","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":23,"updated_by":23,"created_at":"2021-12-23T10:40:11.373Z","updated_at":"2021-12-23T10:40:11.387Z"}}],"localizations":[{"id":351,"locale":"ja-JP","published_at":"2023-07-17T05:43:40.185Z"}],"read_time":6,"display_updated_time":"Oct 11, 2024","backgroundImage":"https://assets.zilliz.com/large_Choosing_the_Right_Vector_Index_for_Your_Project_27998e8ec6.png","belong":"learn","authorNames":["Frank Liu"]},{"id":"learn-91","content":"Cloud computing has been a rising trend over the past ten years. It transforms data management and analytics by offering greater scalability, flexibility, and accessibility than on-premises infrastructures. Cloud computing allows businesses to scale resources on demand, thus reducing costs and enabling real-time decision-making. Vector databases are a cutting-edge technology that is beneficial for efficiently getting insights from unstructured data like images, texts, and videos through high-dimensional numerical representations known as [vector embeddings](https://zilliz.com/glossary/vector-embeddings). \n\nIntegrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management and analysis of large-scale, complex data, especially in areas of AI and machine learning. This relationship leverages both technologies' strengths to provide a comprehensive solution for modern data challenges.\n\n\n## The Essence of Vector Databases\n\nA [vector database](https://zilliz.com/learn/what-is-vector-database) is crafted to store, index, and retrieve data points with multiple dimensions, commonly called vectors. Unlike traditional relational databases that handle data (like numbers and strings) organized in tables, vector databases are specifically designed for managing unstructured data represented in multi-dimensional vector space. This design makes them highly suitable for AI and machine learning applications, where data often takes the form of vectors like image embeddings, text embeddings, or other types of feature vectors. Therefore, vector databases sometimes take on the name of[ AI Database](https://zilliz.com/glossary/ai-database). \n\nVector databases excel at performing similarity searches through indexing and search algorithms, swiftly identifying similar vectors within a large dataset. This capability is essential for AI native applications like [Retrieval Augmented Generation](https://zilliz.com/learn/Retrieval-Augmented-Generation) (RAG), [recommendation systems](https://zilliz.com/vector-database-use-cases/recommender-system), and [natural language processing](https://zilliz.com/learn/A-Beginner-Guide-to-Natural-Language-Processing) (NLP), where processing high-dimensional data is crucial.\n\nOverall, vector databases represent a significant evolution in database technology. They offer specialized solutions for handling the complex, vector-based data prevalent in modern AI and machine learning applications.\n\n\n## Cloud Computing Fundamentals\n\nCloud computing is a technology that allows users to access and use computing resources (like servers, storage, databases, networking, software, and more) over the internet, often referred to as \"the cloud.\" Instead of owning and maintaining physical [hardware](https://zilliz.com/glossary/ai-hardware) and software, users can rent access to these resources from a cloud service provider. This model provides several benefits:\n\n- **Scalability**: It allows individual developers and businesses to adjust their computing resources based on demand. This flexibility ensures optimal performance and cost-efficiency as organizations can scale up or down, in or out, as needed without investing in expensive hardware infrastructure. \n\n- **On-demand resources**: Cloud platforms provide access to computing resources over the Internet. Major cloud providers, such as Google Cloud, AWS, and Microsoft Azure, offer various services to cater to different business needs. \n\n- **Accessibility:** Cloud computing enables global accessibility, with data centers located worldwide to ensure low-latency access for users worldwide.\n\n- **Maintenance and management**: The cloud provider is responsible for maintaining, updating, and managing the hardware and software, reducing users' IT workload.\n\n- **Reliability**: Many cloud providers offer reliable backup and disaster recovery services, ensuring data integrity and availability.\n\n\n## Synergy Between Vector Databases and Cloud Computing\n\nThe synergy between vector databases and cloud computing provides scalable, efficient, and cost-effective solutions for handling complex data analytics and AI-driven applications. \n\nCloud computing's scalability and elasticity are perfectly suited to the demands of vector databases, which often need to handle fluctuating workloads and rapidly expanding data volumes. The cloud's ability to dynamically allocate resources ensures that vector databases can scale efficiently, meeting the demands of high-dimensional data processing without the need for substantial upfront investment in physical infrastructure. Moreover, the cloud provides a robust infrastructure that enhances data processing performance and speed, a critical requirement for vector databases that deal with complex queries and extensive datasets. \n\nIntegrating vector databases with cloud computing also significantly benefits cost efficiency. The cloud's pay-as-you-go pricing model aligns well with vector databases' resource-intensive nature, offering a cost-effective solution for managing large datasets and complex computations. Integration with cloud services further empowers vector databases by providing access to a range of complementary tools and platforms, including machine learning algorithms, analytics services, and data visualization tools. \n\nFinally, the reliability and security offered by cloud computing technologies are paramount for hosting vector databases, especially when dealing with sensitive or critical information. The cloud's advanced security features, data encryption protocols, and compliance with regulatory standards ensure that data is protected against various threats and breaches, maintaining high availability and trust.\n\n\n## Examples of Cloud-Based Vector Database Services\n\nCloud-based vector database services are designed to handle complex, multi-dimensional data, supporting AI and machine learning applications. Here are some examples of these services:\n\n- [**Zilliz Cloud**](https://zilliz.com/cloud) is a fully managed vector database service designed for speed, scale, and high performance in enterprise-grade AI applications. It's built on top of the open-source [Milvus](https://zilliz.com/what-is-milvus) vector database but offers advanced features and optimizations.\n\n- [**Pinecone**](https://zilliz.com/comparison/pinecone-vs-zilliz-vs-milvus) is a specialized vector database service that focuses on similarity search, enabling users to build, deploy, and scale vector search applications quickly.\n\n- [**Weaviate**](https://zilliz.com/comparison/milvus-vs-weaviate) is an open-source vector database that helps developers create intuitive and reliable AI-powered applications. It offers a self-hosted open-source option and a cloud-based service called [Weaviate Cloud Services](https://zilliz.com/learn/Weaviate-cloud-vs-zilliz) (WCS).\n\n- [**Qdrant Cloud**](https://zilliz.com/learn/qdrant-cloud-vs-zilliz) is the cloud service version of Qdrant, an open-source vector search engine. It offers managed vector database services that leverage cloud infrastructure for enhanced scalability and performance.\n\nThese services highlight the growing trend of integrating vector database capabilities with cloud platforms, providing scalable, flexible, and efficient solutions for managing and analyzing complex data in various AI and machine learning applications.\n\n\n## Real-world Applications and Use Cases\n\n1. An **e-commerce platform** can store customer profiles and product embeddings in a cloud-based vector database. Machine learning models trained on this data can generate real-time personalized product recommendations, increasing user engagement and sales conversion rates.\n\n2. A **healthcare provider** can leverage a cloud-hosted vector database to store patient health records and medical images represented as feature vectors. Physicians can use similarity search algorithms to identify patients with similar medical conditions or imaging patterns. This enhances diagnostic accuracy, facilitates knowledge sharing among healthcare professionals, and improves patient outcomes.\n\n3. A **financial institution** can deploy machine learning models on a cloud platform to analyze transaction data and detect fraudulent activities. The vector database enables efficient storage and retrieval of high-dimensional feature vectors, enhancing the accuracy and speed of fraud detection algorithms.\n\n4. **A social media platform** can store user profiles, social connections, and content embeddings using cloud-based vector databases. Machine learning algorithms analyze this data to recommend relevant posts, videos, and advertisements to users. Cloud computing infrastructure supports real-time content recommendation systems' high throughput and low latency requirements.\n\n\n## Future Trends and Directions\n\nEmerging trends in vector databases and cloud computing point towards technological advancements and potential future applications. \n\n- Future vector databases will likely incorporate more sophisticated query optimization techniques to enhance efficiency.\n\n- Integrating a vector database with hot and cold storage systems can significantly enhance data management and retrieval capabilities, especially when large volumes of data need to be processed efficiently.\n\n- Vector databases may evolve to support a broader range of complex data types.\n\n- Integration between vector and graph databases can unlock new possibilities for analyzing relationships and patterns in interconnected data sets. \n\n- Cloud services are moving towards edge computing. This can enhance the performance of real-time applications that rely on vector databases by reducing latency and improving responsiveness.\n\n- Future developments in cloud security can enhance the confidentiality, integrity, and availability of data stored in vector databases.\n\n\n## Conclusion\n\nIntegrating vector databases and cloud computing creates a powerful synergy that unlocks unmatched data management, analytics, and AI-driven application capabilities. This allows organizations to leverage scalable infrastructure, advanced analytics tools, and managed database services to extract insights from complex, high-dimensional data sets efficiently.\n\nHowever, given their substantial potential impact across diverse sectors, including e-commerce, healthcare, finance, social media, manufacturing, and gaming, it is important to explore these technologies further. Staying informed about emerging trends and advancements in these technologies is essential for remaining competitive and grabbing opportunities in the digital economy.\n","title":"Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges ","sub_title":null,"featured":null,"abstract":"Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning. ","display_time":"Mar 22, 2024","url":"integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges","home_order":null,"published_at":"2024-03-23T04:59:48.229Z","created_by":{"id":60,"firstname":"Di","lastname":"Feng","username":null,"email":"fendy.feng@zilliz.com","password":"$2a$10$3n0EPwpsTTiNpylnqJ4MReO3yAuO3glfers.zS0Wo4pmwHzaFMbnm","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"updated_by":{"id":53,"firstname":"Chris","lastname":"Churilo","username":null,"email":"chris.churilo@zilliz.com","password":"$2a$10$A7cGJkzIFDjoAQRaAPCCW.Dw59vx2PqnKAfW87apXsAJdZPc54rTm","resetPasswordToken":null,"registrationToken":null,"isActive":true,"blocked":null,"preferedLanguage":null},"created_at":"2024-03-23T04:53:32.283Z","updated_at":"2024-08-11T16:27:57.110Z","show_in_learn":true,"order_in_learn":null,"category_order":null,"category_learn":{"id":40,"name":"Accelerated Vector Search","created_by":60,"updated_by":60,"created_at":"2023-11-07T09:12:25.889Z","updated_at":"2023-11-07T09:12:27.113Z","order":"0","published_at":"2023-11-07T09:12:27.107Z","locale":"en"},"author":{"id":123,"name":"Fahad","author_tags":"Freelance Technical Writer","published_at":"2024-03-23T04:59:20.541Z","created_by":18,"updated_by":18,"created_at":"2024-03-23T04:59:18.601Z","updated_at":"2024-07-03T07:57:20.621Z","home_page":null,"home_page_link":null,"self_intro":null,"repost_to_medium":null,"repost_state":null,"meta_description":"Fahad, Freelance Technical Writer","locale":"en","avatar":{"id":3006,"name":"Male-Author.jpeg","alternativeText":"","caption":"","width":1024,"height":1024,"formats":{"large":{"ext":".jpeg","url":"https://assets.zilliz.com/large_Male_Author_526de0f08d.jpeg","hash":"large_Male_Author_526de0f08d","mime":"image/jpeg","name":"large_Male-Author.jpeg","path":null,"size":92.42,"width":1000,"height":1000},"small":{"ext":".jpeg","url":"https://assets.zilliz.com/small_Male_Author_526de0f08d.jpeg","hash":"small_Male_Author_526de0f08d","mime":"image/jpeg","name":"small_Male-Author.jpeg","path":null,"size":35.2,"width":500,"height":500},"medium":{"ext":".jpeg","url":"https://assets.zilliz.com/medium_Male_Author_526de0f08d.jpeg","hash":"medium_Male_Author_526de0f08d","mime":"image/jpeg","name":"medium_Male-Author.jpeg","path":null,"size":61.68,"width":750,"height":750},"thumbnail":{"ext":".jpeg","url":"https://assets.zilliz.com/thumbnail_Male_Author_526de0f08d.jpeg","hash":"thumbnail_Male_Author_526de0f08d","mime":"image/jpeg","name":"thumbnail_Male-Author.jpeg","path":null,"size":6.72,"width":156,"height":156}},"hash":"Male_Author_526de0f08d","ext":".jpeg","mime":"image/jpeg","size":94.23,"url":"https://assets.zilliz.com/Male_Author_526de0f08d.jpeg","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":55,"updated_by":55,"created_at":"2024-04-01T18:17:10.227Z","updated_at":"2024-04-01T18:17:21.142Z"}},"canonical_rel":"https://zilliz.com/learn/integrating-vector-databases-with-cloud-computing-solution-to-modern-data-challenges","repost_to_medium":true,"repost_state":{"status":"success","mediumId":"346537cf07e2","mediumUrl":"https://medium.com/@zilliz_learn/integrating-vector-databases-with-cloud-computing-a-strategic-solution-to-modern-data-challenges-346537cf07e2"},"meta_title":"Integrating VectorDBs with Cloud Computing Against Data Challenges","meta_description":"Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning. ","meta_keywords":null,"invisible":false,"locale":"en","repost_to_devto":null,"image":{"id":2885,"name":"Mar 14 ——Vector Databases and Cloud Computing_ An Integrated Approach (1).png","alternativeText":"","caption":"","width":2401,"height":1256,"formats":{"large":{"ext":".png","url":"https://assets.zilliz.com/large_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","hash":"large_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e","mime":"image/png","name":"large_Mar 14 ——Vector Databases and Cloud Computing_ An Integrated Approach (1).png","path":null,"size":436.85,"width":1000,"height":523},"small":{"ext":".png","url":"https://assets.zilliz.com/small_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","hash":"small_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e","mime":"image/png","name":"small_Mar 14 ——Vector Databases and Cloud Computing_ An Integrated Approach (1).png","path":null,"size":116.1,"width":500,"height":262},"medium":{"ext":".png","url":"https://assets.zilliz.com/medium_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","hash":"medium_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e","mime":"image/png","name":"medium_Mar 14 ——Vector Databases and Cloud Computing_ An Integrated Approach (1).png","path":null,"size":234.22,"width":750,"height":392},"thumbnail":{"ext":".png","url":"https://assets.zilliz.com/thumbnail_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","hash":"thumbnail_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e","mime":"image/png","name":"thumbnail_Mar 14 ——Vector Databases and Cloud Computing_ An Integrated Approach (1).png","path":null,"size":40.93,"width":245,"height":128}},"hash":"Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e","ext":".png","mime":"image/png","size":1973.77,"url":"https://assets.zilliz.com/Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":60,"updated_by":60,"created_at":"2024-03-23T04:44:58.862Z","updated_at":"2024-03-23T04:44:58.884Z"},"tags":[{"id":5,"name":"Engineering","published_at":"2021-01-21T02:28:39.896Z","created_by":18,"updated_by":18,"created_at":"2021-01-21T02:28:37.242Z","updated_at":"2022-11-15T19:16:38.988Z","locale":"en"}],"authors":[{"id":123,"name":"Fahad","author_tags":"Freelance Technical Writer","published_at":"2024-03-23T04:59:20.541Z","created_by":18,"updated_by":18,"created_at":"2024-03-23T04:59:18.601Z","updated_at":"2024-07-03T07:57:20.621Z","home_page":null,"home_page_link":null,"self_intro":null,"repost_to_medium":null,"repost_state":null,"meta_description":"Fahad, Freelance Technical Writer","locale":"en","avatar":{"id":3006,"name":"Male-Author.jpeg","alternativeText":"","caption":"","width":1024,"height":1024,"formats":{"large":{"ext":".jpeg","url":"https://assets.zilliz.com/large_Male_Author_526de0f08d.jpeg","hash":"large_Male_Author_526de0f08d","mime":"image/jpeg","name":"large_Male-Author.jpeg","path":null,"size":92.42,"width":1000,"height":1000},"small":{"ext":".jpeg","url":"https://assets.zilliz.com/small_Male_Author_526de0f08d.jpeg","hash":"small_Male_Author_526de0f08d","mime":"image/jpeg","name":"small_Male-Author.jpeg","path":null,"size":35.2,"width":500,"height":500},"medium":{"ext":".jpeg","url":"https://assets.zilliz.com/medium_Male_Author_526de0f08d.jpeg","hash":"medium_Male_Author_526de0f08d","mime":"image/jpeg","name":"medium_Male-Author.jpeg","path":null,"size":61.68,"width":750,"height":750},"thumbnail":{"ext":".jpeg","url":"https://assets.zilliz.com/thumbnail_Male_Author_526de0f08d.jpeg","hash":"thumbnail_Male_Author_526de0f08d","mime":"image/jpeg","name":"thumbnail_Male-Author.jpeg","path":null,"size":6.72,"width":156,"height":156}},"hash":"Male_Author_526de0f08d","ext":".jpeg","mime":"image/jpeg","size":94.23,"url":"https://assets.zilliz.com/Male_Author_526de0f08d.jpeg","previewUrl":null,"provider":"s3","provider_metadata":null,"created_by":55,"updated_by":55,"created_at":"2024-04-01T18:17:10.227Z","updated_at":"2024-04-01T18:17:21.142Z"}}],"localizations":[{"id":480,"locale":"ja-JP","published_at":"2024-03-23T04:59:48.229Z"}],"read_time":6,"display_updated_time":"Aug 11, 2024","backgroundImage":"https://assets.zilliz.com/large_Mar_14_Vector_Databases_and_Cloud_Computing_An_Integrated_Approach_1_8696b3c73e.png","belong":"learn","authorNames":["Fahad"]}]},"__N_SSG":true},"page":"/learn/[id]","query":{"id":"everything-you-should-know-about-vector-embeddings"},"buildId":"jZQUVXSOCcaFSI1MpPnBS","isFallback":false,"gsp":true,"scriptLoader":[]}</script></body></html>